By Zhang Lei

The current key development direction of Kubernetes is to expose more interfaces and extensible mechanisms for developers and users, and to decentralize more user requirements to the community to complete. Among them, CRI is the most mature and important interface. In 2018, the emergence of ShimV2 API led by the containerd community brought more mature and convenient practical methods for users to integrate their own container runtime on the basis of CRI.

This speech has shared the design and implementation of key technical features, such as Kubernetes interface design, CRI, container runtime, ShimV2, and RuntimeClass, and took KataContainers as an example to demonstrate the usage of these technical features for the audience. This article is based on notes from Zhang Lei's speech at KubeCon + CloudNativeCon 2018.

Today, I'd like to share the designs of Kubernetes CRI and Containerd ShimV2 with you, which demonstrates an important direction that the community is heading in. Hello everyone, I am Zhang Lei, and I am currently working at Alibaba Group. Today, we will talk about Kubernetes. First, let's take a brief look at the working principle of Kubernetes.

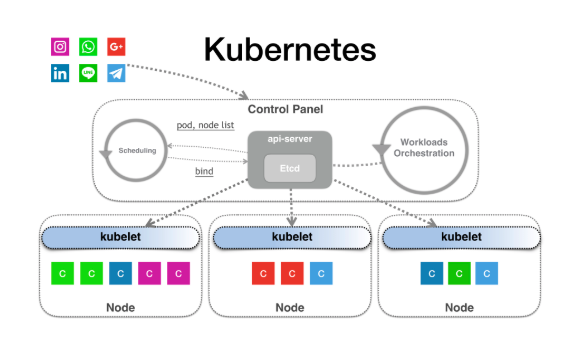

As we all know, Kubernetes has a Control Panel at the top, which is also called the Master node by many people. After you submit a workload, that is, your application, to Kubernetes, the API server first saves your application to etcd as an API object.

In Kubernetes, the controller manager is responsible for orchestration, and a bunch of controllers are running through the control cycle. This control cycle is used to perform the orchestration, helping you to create Pods required by these applications. Note that Pods are created, instead of containers.

Once a Pod appears, the Scheduler watches the changes of the new Pod. If the Scheduler finds a new Pod, it helps you run all the scheduling algorithms and write the running result, that is, the name of a Node, on the NodeName field of the Pod object, which is the so-called binding operation. Then, it writes the binding result back to etcd, which is the working process of the Scheduler. So what is the final result of the Control Panel after such a round of operation? One of your Pods is bound to a Node, which is called scheduling.

What about the Kubelet? It runs above all nodes. The Kubelet watches the changes of all Pod objects. When it finds that a Pod is bound to a Node, and that the bound Node is itself, then the Kubelet helps you take over all the subsequent tasks.

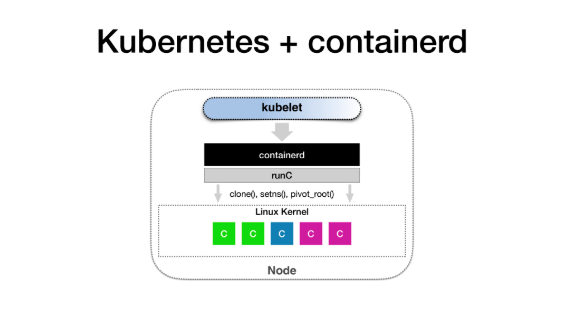

So, what is the Kubelet doing? It's very simple. After the Kubelet obtains this information, it calls the containerd process that you run on each machine and runs every container in this Pod.

At this time, containerd helps you call runC. So in the end, it is actually runC that helps you set up these namespaces and cgroups, and helps you chroot, building a container required by an application. This is the simple principle of how the entire Kubernetes works.

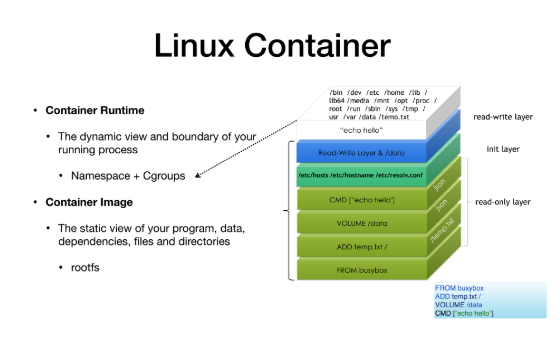

In this case, you may ask a question: what is a container? In fact, it is very simple. The container we usually refer to is the Linux container. You can divide the Linux container into two parts: the Container Runtime and the Container Image.

The so-called Runtime part is the dynamic view and the resource boundary of the running process, so it is built for you by namespaces and cgroups. For Image, you can see it as the static view of the program you want to run, so it is actually a compressed package of your program + data + all dependencies + all directory files.

When these compressed packages are mounted together in a union mount manner, we call it rootfs. Rootfs is the static view of your entire process. They see the world like this, so this is the Linux container.

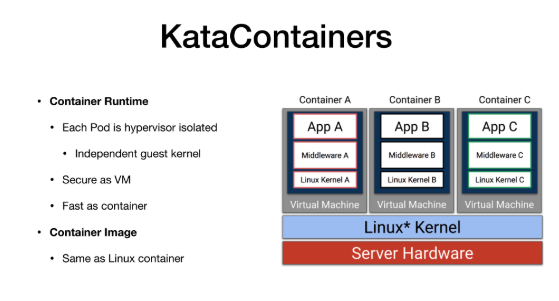

Today, we will also talk about another container, which is quite different from the previous Linux container. Its Container Runtime is implemented by the Hypervisor using hardware virtualization, just like a virtual machine. Therefore, each Pod like the KataContainer is a lightweight virtual machine with a complete Linux kernel. KataContainers can provide strong isolation like VMs, but due to their optimization and performance design, they have agility comparable to container items. This point will be emphasized later. For the Image part, a KataContainer is no different from a Docker. It uses the standard Linux continer and supports the standard OCR images, so this part is exactly the same.

You may ask why we have KataContainers? Because we are concerned about security. For example, in many financial scenarios, encryption scenarios and even scenarios where many blockchains exist, a secure Container Runtime is required, so this is one of the reasons why we emphasize KataContainer.

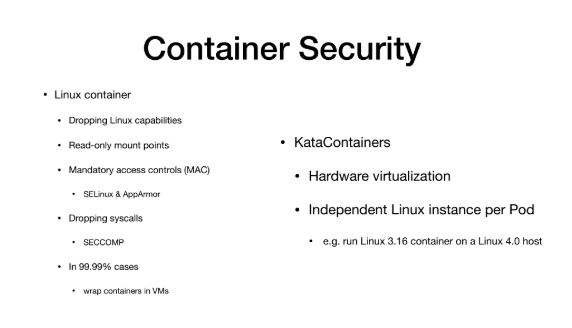

If you are using a Docker, then how can you safely use the Docker? You may have a series of skills and trick. For example, you may drop some Linux capibilities. You can specify what the Runtime can and cannot do. Second, you can go to read-only mount points. Third, you can use SELinux or AppArmor to protect the container. Another way is to directly drop some syscalls using SECCOMP.

However, I need to emphasize that all these operations introduce a new layer between your Container and Host, because the layer filters and intercepts your syscalls. Therefore, the more layers you build here, the worse your container performance is, and it has trickle down performance impact.

More importantly, before performing these operations, you need to figure out exactly what to do and which syscalls to drop. This requires specific analysis of specific problems. So how should I tell my users how to do this?

It is easy to talk about, but few people know how to do it in practice. In 99.99% cases, most people run containers in virtual machines, especially in public cloud scenarios.

For a KataContainer, it uses the same hardware virualization as the virtual machine and has an independent kernel. Therefore, the isolation provided by the KataContainer is completely trustable, just as you trust the VM.

More importantly, each Pod now has an Independent kernel, just like a small virtual machine, so it is allowed that the Kernel version run by your container is completely different from that run by the Host machine. This is completely OK, just as you do it in a virtual machine. So this is one of the reasons why I emphasize KataContainers, because they provide security and multi-tenant capabilities.

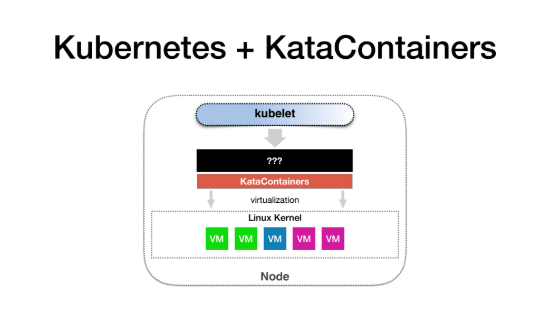

Therefore, naturally, a demand arises, that is, how can we run a KataContainer in Kubernetes?

Let's first see what the Kubelet is doing. The Kubelet must find a way to call the KataContainer like calling the containerd, and the KataContainer is responsible for setting up the Hypervisor, helping me run this small VM. Therefore, we need to think about how to make Kubernetes operate KataContainers reasonably.

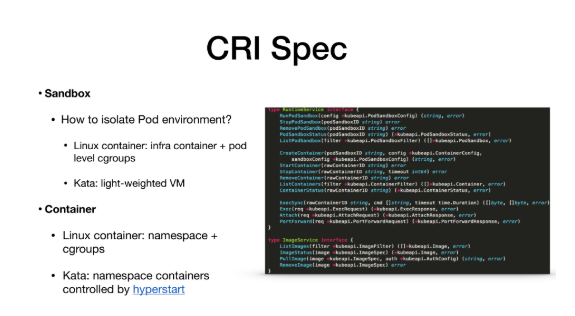

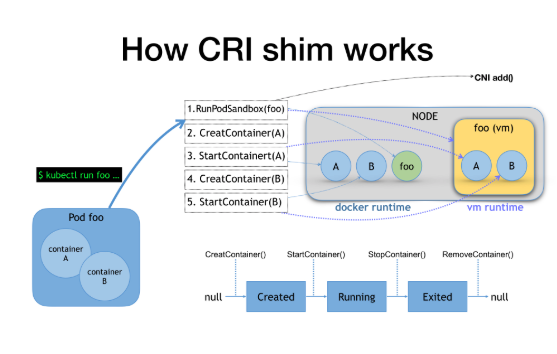

This demand is related to the Container Runtime Interface that we have been promoting in the community, which is abbreviated as CRI. CRI has only one function: It describes, for Kubernetes, what operations a container should have and what parameters each operation should have. This is one of the design principles of CRI. However, it should be noted that CRI is a Containerd-centric API, which does not have the concept of Pod. Be sure to keep this in mind.

Why? Why should we design it this way? One reason is that we do not want a project like Docker to have to know what a Pod is and expose the API of a Pod. This is an unreasonable requirement. A Pod is always a Kubernetes orchestration concept, which has nothing to do with containers, so that is why we have to make this API containerd-centric.

Another reason is for maintenance. If the concept of Pod already exists in CRI, then any subsequent changes to a Pod feature may cause changes to CRI. For an interface, the maintenance cost is relatively high. So if you take a closer look at CRI, you can find that it actually specifies some common interfaces for manipulating containers.

Here, I can roughly classify CRI into the Container and the Sandbox. The Sandbox is used to describe the mechanism through which I implement the Pod, so it is actually the field that is really related to a container. For a Docker or Linux container, a container named "infra container" is actually run last after matching. The container is a very small container, which is used to hold the Nodes and Namespaces of the entire Pod.

However, if Kubernetes uses Linux Container Runtime, such as a Docker, it does not provide you with the isolation of the Pod level, except for the Pod level cgroups. This is a difference. Because if you use a KataContainer, the KataContaniner creates a lightweight virtual machine for you in this step.

Next, in the next stage (in the Containers API), for a Docker, it starts user containers on the host machine, but this is not the case for Kata, which sets up the Namespaces required by these user containers in the lightweight virtual machine corresponding to the Pod, that is, in the Sandbox created earlier, instead of starting these new containers one by one. Therefore, with this mechanism, after the Control Panel completes its work, indicating that the Pod has been scheduled. At this time, the Kubelet starts or creates the Pod, and finally calls the so-called CRI. Previously, the concept of the so-called Containers Runtime did not exist in the Kubelet or Kubernetes.

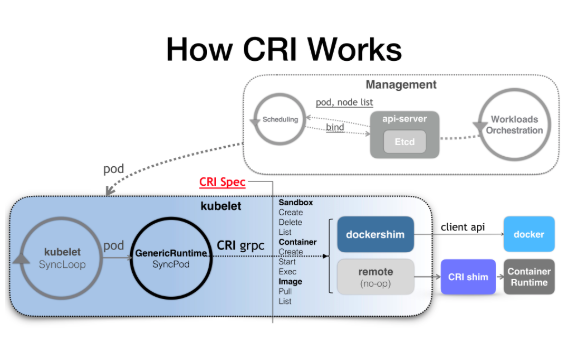

So, in this step, if you use a Docker, then the Dockershim in Kubernetes is responsible for responding to this CRI request. However, if you are not using a Docker, you have to run a mode called Remote, that is, you need to write a CRI shim to serve this CRI request. This is the next topic we will discuss today.

What can a CRI shim do? It can translate CRI requests into Runtime APIs. For example, if a Pod has a container A and a container B, after we submit this to Kubernetes, the CRI code initiated at the Kubelet is probably a sequence like this: First, it runs the Sandbox foo. if it is a Docker, it starts an infra container, which is a small container named foo. If it is Kata, it starts a virtual machine named foo. It is not the same thing.

So when you create and start container A and B, two containers are started in Docker, but for Kata, two small namespaces are started in the small virtual machine, the Sandbox. The two are not the same. To sum up, to run Kata in Kubernetes now, you need to create a CRI shim. Therefore, you need to find a way to create a CRI shim for Kata.

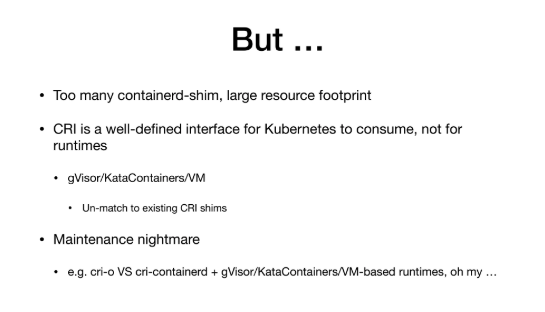

One way is to reuse the existing CRI shims. Which existing CRI shims to reuse? For example, the CRI containerd is a CRI shim of containerd. It can respond to CRI requests, so whether these situations can be translated into Kata operations next. This is feasible and this is also one of the ways we will use, which is to make KataContainers follow containerd. At this time, its working principle is roughly as follows: containerd has a unique design, that is, it runs a containerd shim for each container. After running, you can find that the host runs the containerd shims one by one.

While Kata is a container runtime with the Sandbox concept, Kata needs to create a Katashim to match the relationship between these Shims and Kata. When this content matches, containerd processing is translated into the request for Kata. This is one method we used before.

But as you can see, many problems exist actually. The most obvious problem is that for the Kata or gVisor, they both have the concept of Sandbox, so it should not start a shim for each container and then match them one by one, which causes significant performance loss. We do not want each container to match a shim, but a Sandbox matches a shim instead.

In addition, the CRI serves Kubernetes and it is reporting upward. It helps Kubernetes, but it does not help the Container Runtime. When you perform the integration, you may find that it does not match many assumptions of CRI or the writing of API, especially for VM gVisorKatachainers. Therefore, the integration process is more difficult. This is a situation where things are mismatched.

The last problem is that it is very difficult for us to maintain. For example, with CRI, RedHat has its own CRI implementation called CRI-O, which is essentially no different from CRI-Containerd, and they both rely on runC to start containers in the end. Why do we need it?

We do not know why. But as a Kata maintainer, I need to write two parts of integration respectively for them to integrate Kata. This is very troublesome, and it means that if I have 100 CRI of this kind, then I need to write 100 integrations, and their functions are all repeated.

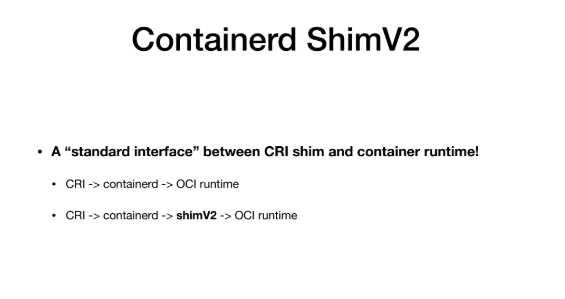

Today, I propose Containerd ShimV2 to you. As mentioned earlier, CRI determines the relationship between Runtime and Kubernetes, so can we have a more detailed API to determine what the real interface between the CRI shim and the following Runtime is like?

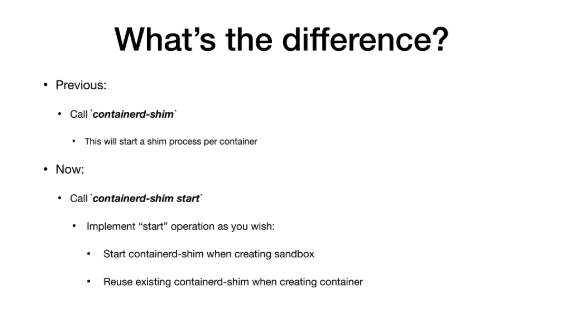

This is why ShimV2 emerged. It is a standard interface between the CRI shim and the containerd runtime. Therefore, I used to directly go from CRI to containerd, and to runC, but not now. We are from CRI to containerd, and to ShimV2, then ShimV2 to RunC and then to the KataContainer. What are the benefits?

The biggest difference is that in this way, you can specify a shim for each Pod. Because at the very beginning, Containerd simply started a Containerd shim to respond to the request. However, our new API is written in this way, namely, Containerd shim start or stop. So how to implement the start and stop operations is what you have to do.

Now, as a maintainer of the KataContainers, I can implement the operations in the following way. When I call the "start" in the created Sandbox, I start a containerd shim. However, when I next call the API, which is the Container API in the previous CRI, I will no longer start it. I reuse the Sandbox created for you, which provides great flexibility for your implementation.

Now, you may find that the entire implementation method has changed. After containerd is used, it does not care about each container to start a containerd shim, which should be implemented by yourself. My implementation is that I only create container-shim-v2 in Sandbox, and then I go to this container-shim-v2 to execute the entire following operations of the container level. I will reuse this Sandbox, so this is very different from the previous events.

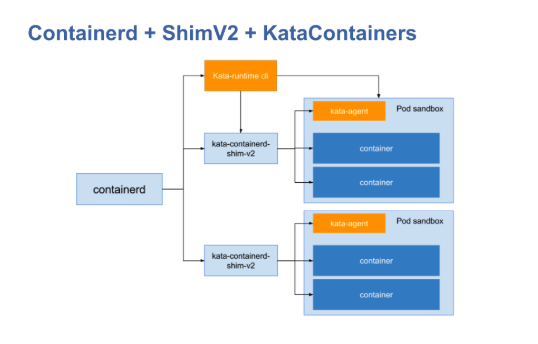

Now, to summarize the contents of this figure, you may find that our implementation is like this:

First, you still use the original CRI Containerd, but now runC is installed. And you have another katacontainer installed on that machine. Next, the Kata writes you an implementation called kata-Containerd-Shimv2. We need to write a large batch of CRI stuff before. But we do not need to now. Now, we only focus on how to connect containerd to the kata container, which is the so-called implementation of Shimv2 API. This is what we need to do. Specifically, it is a series of APIs related to running a container.

For example, I can perform "create" and "start" operations, and all of these operations are mapped on Shimv2 for implementation, instead of considering how to map and implement CRI. Due to the high flexibility before, this has led to a situation where a lot of CRI shims are available. This is actually a bad thing. This involves many political reasons, as well as many non-technical reasons, which are not matters that we as technicians should care about. You just need to think about how to connect to Shimv2 now.

Next, I will show you a Demo of calling KataContainers through CRI + containerd shimv2 (the specific content is omitted)

The current core design idea of Kubernetes is to remove and decouple the complex features that are originally invasive to the main code from the core library one by one by dividing them into different interfaces and plug-ins. In this process, CRI is the first calling interface in Kubernetes to be divided into plug-ins. In this article, we mainly introduces another idea of integrating Container Runtime based on CRI, namely, CRI + containerd shimv2. In this way, you do not need to write a CRI implementation (CRI shim) for your Container Runtime. Instead, you can directly reuse the support capability of Containerd for CRI, then use Containerd ShimV2 to connect to a specific Container Runtime (such as runC). Currently, this integration method has become the mainstream idea for the community to connect to lower-layer Container Runtime. Many containers based on independent cores or virtualization, such as KataContainers, gVisor, and Firecracker, have also started to connect seamlessly to Kubernetes via ShimV2 and the containerd project.

As we all know, within Alibaba, the Container Runtime used by Sigma/Kubernetes system is mainly the PouchContainer. In fact, PouchContainer chooses to use containerd as its main Container Runtime management engine, and implements its enhanced CRI interface to meet the strong isolated and production-level container requirements of Alibaba. Therefore, after the ShimV2 API was released in the containerd community, the PouchContainer project took the lead in exploring and trying to connect to the lower-layer Container Runtime through containerd ShimV2, thus completing the integration of other types of container runtimes, especially virtualized containers, more efficiently. We know that since it's open source, the PouchContainer team has been actively promoting the development and evolution of the containerd upstream community. In this CRI + containerd ShimV2 revolution, PouchContainer once again comes to the forefront of various containers.

480 posts | 48 followers

FollowAlibaba Developer - March 3, 2020

Alibaba Cloud Community - June 20, 2023

Alibaba Developer - June 23, 2020

Alibaba System Software - August 27, 2018

Alibaba Container Service - October 23, 2019

Alibaba Developer - February 9, 2021

480 posts | 48 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Cloud Native Community