Yan Zhijie is a Senior Algorithm Expert and the Chief Scientist of Man-Machine Interaction at Alibaba Cloud. His fields of study include voice recognition, voice synthesis, speaker recognition and verification, OCR handwriting recognition, and machine learning algorithm. He has served as an expert panel member for the top academic conferences and journals in the field of voice recognition for a long time. Besides, he owns several American and PCT patents.

The following article summarizes his lecture on the acoustic and language models adopted by Alibaba Cloud Voice Recognition Technology, including the LC-BLSTM acoustic model, LFR-DFSMN acoustic model, and NN-LM language model.

Voice recognition plays an important role in AI and man-machine interaction. It provides the voice interaction capability of smart IoT home appliances and is also applicable to public services and smart government affairs.

Typically, a modern voice recognition system consists of three core components: an acoustic model, a language model, and a decoder. Such a system has been the most popular and widely used in the field of voice recognition though there are recent research attempts to build an end-to-end voice recognition system. The acoustic model is mainly used to create a probability mapping between voice input and acoustic unit output. The language model describes the probability matching of different words so that recognized sentences sound more like natural text. The decoder filters the scores of different matches by combining the probability values of acoustic units with the language model to obtain the final recognition results of the highest probability.

Voice recognition benefits from the deep learning technology that has become increasingly popular for recent years. The HMM-DNN acoustic model can replace the traditional HMM-GMM acoustic model to improve the accuracy of voice recognition by 20%. The NN-LM language model can work with the traditional N-Gram language model to further improve accuracy. Compared with language models, acoustic models are more compatible with deep neural network models and therefore draw more researchers.

We will describe the acoustic and language models adopted by Alibaba Cloud Voice Recognition Technology, including the LC-BLSTM acoustic model, LFR-DFSMN acoustic model, and NN-LM language model. The LC-BLSTM acoustic model is improved from the traditional BLSTM model and ensures high accuracy and low latency. LFR-DFSMN is improved from the RNN acoustic model and provides more stable training effect and higher recognition accuracy through sophisticated design. The NN-LM language model has been improved from the traditional N-Gram language model for recent years.

The fully-connected Deep Neural Network (DNN) model increases the number of layers and nodes in a neural network to help expand the network's capability of abstracting and modeling complex data. However, DNN does have some shortcomings. For example, DNN generally uses frame collage to evaluate the impact of contextual information on the current voice frame, which is not the optimal method to indicate correlation between voice sequences. A recurrent neural network (RNN) can solve the problem to some extent because it uses correlation between data sequences through automatic connection of network nodes. Furthermore, some researchers have put forward a long short-term memory recurrent neural network (LSTM-RNN) that can easily and effectively alleviate gradient explosion and gradient vanishing occurring in simple RNN. After that, researchers expanded LSTM and used bidirectional LSTM-RNN (BLSTM-RNN) for acoustic modeling to fully evaluate the impact of contextual information.

Compared with DNN, BLSTM can effectively improve the accuracy of voice recognition by 15% to 20%. However, BLSTM has two prominent problems:

To address the two problems effectively, the academic community puts forward the context-sensitive-chunk BLSTM (CSC-BLSTM) method and the latency-controlled BLSTM (LC-BLSTM) method, an improved version of CSC-BLSTM. Based on CSC-BLSTM and LC-BLSTM, Alibaba Cloud integrates the LC-BLSTM-DNN hybrid structure with training and optimization methods such as multi-host, multi-card and 16-bit quantification for acoustic modeling. Compared with the DNN model, the LC-BLSTM-DNN model reduces the relative recognition error rate by 17% to 24%.

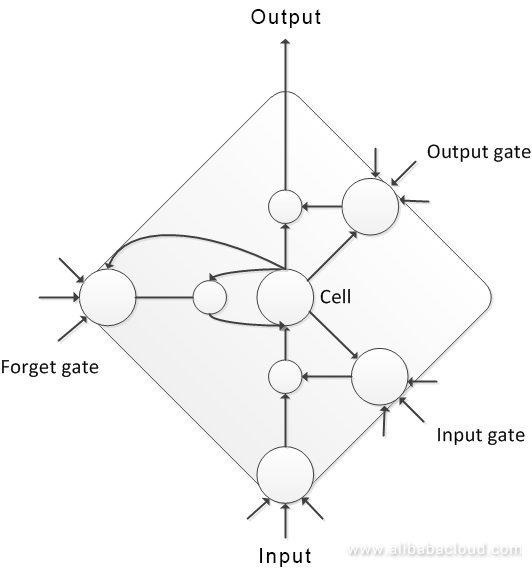

A typical LSTM node consists of a cell and three gates, namely, input gate, forget gate, and output gate. The input and output nodes and the cell are connected to each of the gates. The input gate and forget gate are further connected to the cell. The cell includes self-connections inside. By controlling the status of different gates, we can improve the long- and short-term information storage and error propagation.

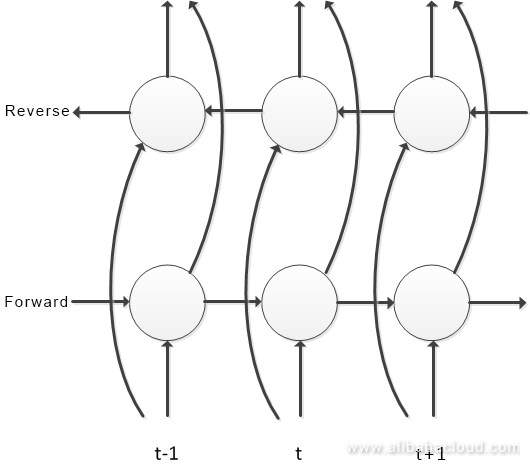

Like DNN, LSTM can be stacked layer by layer to create a deep LSTM. To better use contextual information, you can stack BLSTM layer by layer to create a deep BLSTM, whose structure is shown in the following figure. In the network, there are two information transmission processes (in the forward direction and in the reverse direction) along the timeline. Computing of each time frame depends on the computing results of all the time frames before and after the current frame. When processing a voice signal as a time sequence, the model fully considers the impact of context on the current voice frame and thus greatly improves the accuracy of phoneme state classification.

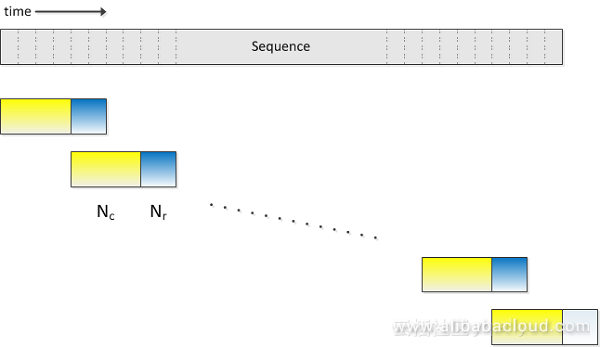

Because the standard BLSTM method models the entire voice data, the training and decoding processes encounter problems such as slow converging speed, high delay, and low real-time rate. Alibaba Cloud uses LC-BLSTM to solve these problems. Different from standard BLSTM that uses the entire speech for training and decoding, LC-BLSTM uses an update method similar to truncated BPTT and special methods of data use and intermediate cell status processing. As shown in the following figure, a small snippet of data is used for each update during training. The data consists of a central chunk and an chunk concatenated on the right, wherein the chunk concatenated on the right is only used to compute the intermediate status of the cell and the error is only propagated in the central chunk. In a network that moves along the timeline in the forward direction, the intermediate status of the cell at the time when the previous data segment ends at the central chunk is used as the initial status of the next data segment. In a network that moves along the timeline in the reverse direction, the intermediate status of the cell is set to 0 each time when a data segment starts. Such a method accelerates network convergence and improves network performance. Data is processed in basically the same way during decoding and training. The difference is that the dimensions of the central chunk and the chunk concatenated on the right can be adjusted as needed and need not be configured the same as those during training.

FSMN is a nascent network structure that models the long-range correlation of signals effectively by adding a learnable memory block to the hidden layer of a feed-forward fully-connected neural network (FNN). Compared with LC-BLSTM, FSMN controls latency more effectively, achieves better performance, and requires less computing resources. However, standard FSMN is ineffective in training deep structures and delivers poor training effect due to the gradient vanishing problem. Deep-structure models prove to have robust modeling capabilities in many fields. We put forward deep FSMN (DFSMN), an improved version of FSMN. Furthermore, we integrate the low frame rate (LFR) technique to build an effective acoustic model for real-time voice recognition. Compared with the LFR-LCBLSTM acoustic model launched last year, the new model improves performance by more than 20% and accelerates training and decoding by 2 to 3 times, which greatly saves computing resources during system implementation.

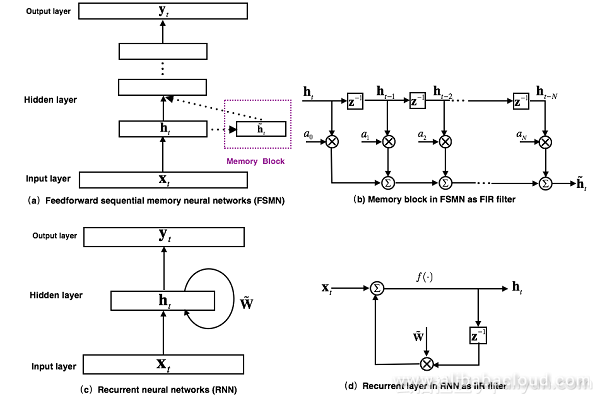

Figure (a) above shows the structure of the earliest FSMN model. In essence, FSMN is an FNN which models surrounding contextual information by adding a memory block to a hidden layer to further model the long-range correlation of time sequence signals. The memory block uses the tap delay structure shown in Figure (b) above to express the hidden layer output at the current time and previous N time points in an invariable format through coefficient coding. FSMN draws on the filter design of digital signal processing. That is, any filter with infinite impulse response (IIR) can be approximated by a higher-order filter with finite impulse response (FIR). From the perspective of filters, the RNN model shown in Figure (c) above is designed with a circulation layer that is equivalent to a first-order IIR filter shown in Figure (d). FSMN adopts a memory block equivalent to a high-order FIR filter, as shown in Figure (b) above. In this way, FSMN performs as well as RNN in modeling the long-range correlation of signals and outperforms RNN in making training simpler and more stable because an FIR filter works in a more stable manner than an IIR filter.

FSMN is classified into scalar FSMN (sFSMN) and vectorized FSMN (vFSMN), depending on the specific memory block encoding coefficient. sFSMN uses the scalar as the memory block encoding coefficient, while vFSMN uses the vector as the memory block encoding coefficient.

These FSMNs only consider the influence of history information on the current time, so it is also called unidirectional FSMNs. If we take both the influence of history information and that of future information into consideration for the current time point, we can obtain a bidirectional FSMN based on unidirectional FSMNs.

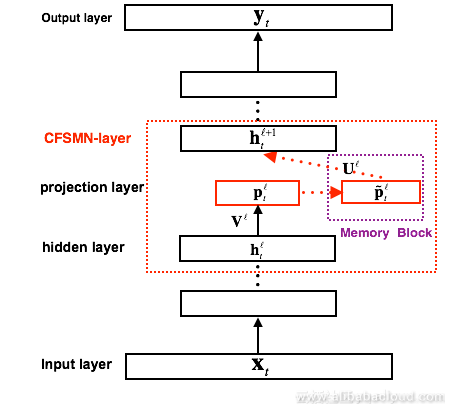

Compared with FNN, an FSMN uses output of the memory block as the additional input for the next hidden layer. In this way, additional model parameters are brought in. The more nodes a hidden layer has, the more parameters the FSMN brings in. The research combines the low-rank matrix factorization concept with the FSMN and provides an improved FSMN structure called compact FSMN (cFSMN). The following figure shows the cFSMN structure of the Ith hidden layer that contains memory blocks.

In a cFSMN, a low-dimension linear projection layer is added behind the hidden layer, and memory blocks are added to the linear projection layer. Furthermore, the cFSMN modifies the encoding formula of the memory block. It explicitly adds the output of the current time point to the expression of the memory block, so that it can simply use the memory block expression as the input for the next layer. In this way, the number of parameters in a model is effectively reduced, speeding up network training.

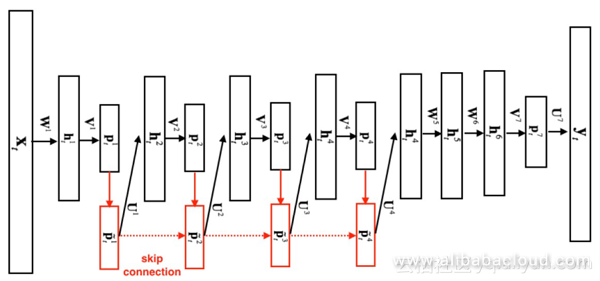

The preceding figure shows the structure of a deep FSMN (DFSMN). The first frame on the left indicates the input layer and the last frame on the right indicates the output layer. By adding skip connections between memory blocks in the cFSMN, we can accumulate the output of memory blocks on the lower layer to the memory blocks on the higher layer. In this way, the gradient of higher-layer memory blocks is assigned to lower-layer memory blocks, solving the vanishing gradient problem caused by network depth, and enabling stable training of deep networks.

Compared with the cFSMN, DFSMN enables training of very deep networks through skip connections. In a cFSMN, each hidden layer is split into a two-layer structure through low-rank matrix factorization. For a network with four cFSMN layers and two DDN layers, the total number of layers will be 13. More cFSMN layers are used, resulting in the vanishing gradient problem and furthermore instability in the training. The DFSMN we raise avoids the vanishing gradient problem in deep networks through skip connections, making the training of deep networks stable. It should be noted that skip connections can be added not only between adjacent layers, but also between nonadjacent layers. Skip connection itself can be linearly or non-linearly converted. In our experiments, we realized training on DFSMNs with dozens of layers, with the performance being much better than that of cFSMNs.

Compared with FSMN, cFSMN can effectively reduce model parameters and provide better performance, while the DFSMN we raise based on cFSMN offers much higher model performance. The following table compares the performance of acoustic models based on BLSTM, cFSMN, and DFSMN with a 2,000-hour English task.

We can see from the table that the DFSMN model offers a 14% lower error rate than the BLSTM model does in a 2,000 hour task, which is a great improvement on the performance of acoustic models.

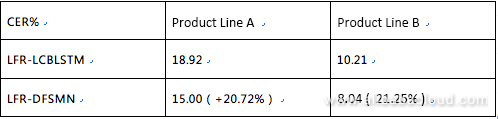

Input for a traditional acoustic model is acoustic characteristics extracted from each voice signal frame. The voice length of each frame is generally 10ms. Signals of each input voice frame have a corresponding output target. A latest research raised a low frame rate (LFR) modeling scheme, which binds timely adjacent voice frames to forecast the target output for an average output target. In the experiments, model performance is not undermined when three (or more) adjacent frames are bound. In this way, the input and output volumes are reduced to one third or less, greatly improving the acoustic scoring and decoding efficiency for a voice recognition system. We established a voice recognition acoustic model based on LFR-DFSMN by combining LFR and DFSMN. With several experiment groups being done, we finally chose a DFSMN with ten cFSMN layers, two DNN layers, and LFR input and output. The total frame rate is reduced to one third. The following table compares the recognition result and the best LC-BLSTM baseline that we release.

By combining the LFR technology, we can triple the recognition speed. The preceding table shows that in industrial application of scale, an LFR-DFSMN model has a 20% lower error rate than that of an LFR-LC-BLSTM model, demonstrating better modeling features for large-scale data application.

A language model (LM) models a language. A linguistic expression can be taken as a string of character sequences, with different character sequences representing different meanings. Characters are in the unit of word. A language model task can be taken as an estimation of the probability, or rationality, of a given sequence of characters.

P(Shanghai workers are powerful)>P(Shanghai were curse are powerful)

Take this sentence as an example. A language model judges whether "Shanghai workers are powerful" or "Shanghai were curse are powerful" is more rational. It can be judged that the first sentence is more probable. We want a language model to give the probability that meets our expectation. In this example, the first sentence shall have a greater probability than the second sentence does.

The traditional n-gram model based on word frequency statistics simplifies the model structure and computing through the Markov hypothesis. It computes by counting and is used with searching. With simple estimation, stable performance, and convenient computing, the model has over thirty years of history. However, the Markov hypothesis forces truncation of the modeling length, making modeling of a long history impossible. In addition, estimation based on word frequency makes the model unsmooth, and insufficient estimation may be made for low-frequency words. With the third evolution of neural networks (NNs), attempts have been made to use NNs for language modeling.

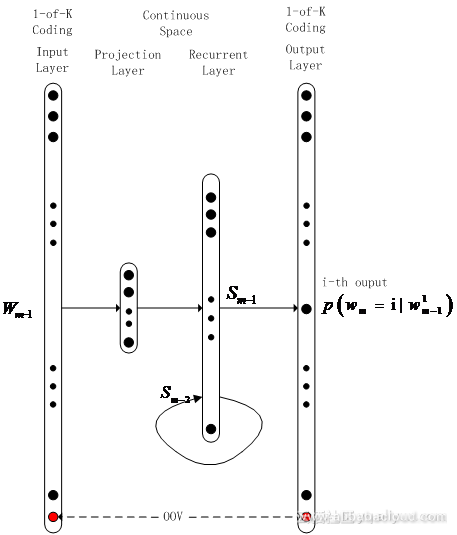

RNN is a typical modeling structure which in theory can model infinite sequences through recursion and ensures seamless modeling through omnidirectional connection between layers. This overcomes the weaknesses of Ngram in sequence modeling. Researches have put forward LSTM to overcome the shortcomings of the basic RNN architecture and improve modeling performance.

NNs are used by large-scale language modeling systems and have to address problems such as the increase in storage and computing resources required by word lists. Real-life online systems typically maintain large word lists. As more word lists are added, the basic RNN architecture has to deal with exponential growth of storage size and computing workload. Researchers have made attempts to address these problems. The most direct solution is to reduce the dictionary size by word list clustering. The solution can reduce the size of word lists greatly but results in performance degradation. Filtering low-frequency words is an alternative solution but it also results in performance degradation. We develop a better method after finding that the main factor that limits speed and performance is the output layer node. A large dictionary is configured at the input layer because the large size of the input layer can be reduced through the projection layer. Only the word lists at the output layer are compressed, which helps minimize loss, filter low-frequency words, ensures full training of model nodes, and improve performance.

Word list compression can improve modeling performance and reduce storage size and computing workload. Because the compression rate is limited, we need to further reduce computing workload beyond the limited compression rate. Several methods are available. For example, LightRNN uses a method similar to clustering to map word lists to a real-valued matrix through embedding. The actual output only contains the rows and columns of the matrix, which may be about the square root of the original computing workload. Softmax output is a contributing factor to large computing workload when many nodes exist. The sum of nodes is calculated as a denominator. If the denominator is replaced by a constant, only the required nodes are computed, which speeds up testing. Variance regularization is a method to greatly accelerate forward pass with minimum accuracy loss, provided that the training speed is acceptable. To accelerate training simultaneously, you can consider sampling methods such as NCE, importance sampling, and black sampling, wherein only positive samples (nodes with the 1 label) and negative samples obtained through sampling distribution are computed during training. This avoids slow computing caused by high output in the case that all the nodes are computed. The speed increase is significant.

Imagine that a developer specializing in intelligent telephone customer service or intelligent conference systems needs to enable voice recognition for his system to convert voice into text. The developer is stuck in a dilemma. He may choose to spend a lot of time and money learning voice recognition from scratch. It takes a long time to accumulate technologies in the field of AI in which internet giants have invested a large amount of human, material, and financial resources. The developer may also choose to use the out-of-the-box and one-size-fits-all voice recognition interfaces provided by internet giants on the Internet. The second choice is less time-consuming but may leave the developer uncertain about the accuracy of voice-to-text conversion because internet giants are so busy they never bother to optimize for scenarios of your interest.

Is there a method to obtain the optimal voice recognition effect with minimum investment? The answer is yes. Based on the industry-leading intelligent voice interaction technology developed by Alibaba DAMO Academy, Alibaba Cloud revolutionizes the supply mode of traditional voice technology providers and provides developers with a set of optimal methods tailored to service scenarios of their interest through cloud-based self-learning for voice recognition in the era of cloud computing. Alibaba Cloud allows developers to get familiar with the system applications of voice recognition within a short time through independent self-learning and by drawing on the resources of internet giants. Developers can easily achieve industry-leading voice recognition accuracy in scenario of interest. This is a new supply mode of voice recognition in the era of cloud computing.

Similar to other AI technologies, voice recognition depends on three core elements: algorithm, computing power, and data. Based on the intelligent voice interaction technology developed by Alibaba DAMO Academy, Alibaba Cloud has been pushing a cutting-edge algorithm evolution in the international community and has provided its latest research achievement DFSMN acoustic model in open source communities to allow researchers in the world to reproduce the optimal results and make continuous improvements.

Computing power is an intrinsic strength of cloud computing. Based on Alibaba Cloud ODPS-PAI platform, we built the hybrid training and service platform for CPU, GPU, FPGA, and NPU to optimize voice recognition applications. The platform processes massive voice recognition requests in Alibaba Cloud. With regard to data, we provide out-of-the-box scenario models trained by massive data, covering e-commerce, customer service, government, and mobile phone input.

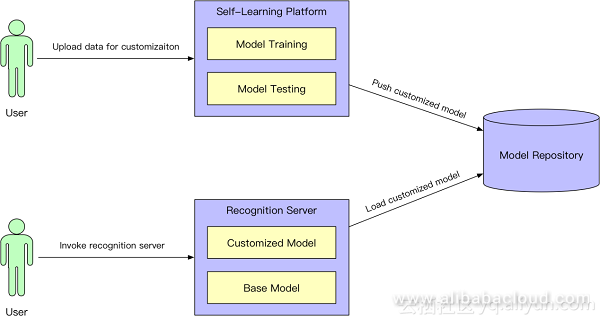

Out-of-the-box models must be customized and optimized to achieve the optimal accuracy in scenarios of developers' interest. Such customization is a routine job of voice technology providers, who cannot provide guarantee with regard to cost, duration, and controllability. Alibaba Cloud provides a self-learning platform for developers to customize, optimize, and launch voice recognition models at low costs within a short time. Alibaba Cloud innovates tool platforms, services, and technologies used to customize Voice Messaging Service extensively based on powerful infrastructure in the era of cloud computing. Developers only need to use the simple self-learning tool provided by Alibaba Cloud, scenario knowledge, and data to achieve the optimal effect in specific scenarios and improve iteration continuously as needed, without having to concern about background technologies and services.

Alibaba Cloud Intelligent Voice Self-learning Platform provides a one-click and self-service voice optimization solution with revolutionary features. The solution greatly lowers the barrier of intelligent voice optimization and allows technically unarmed business personnel to significantly improve voice recognition accuracy in their services.

Alibaba Cloud Intelligent Voice Self-learning Platform can optimize, test, and launch service-tailored models within several minutes and optimize service-related buzzwords in real time. This reduces the long delivery period of traditional customization and optimization otherwise lasting several weeks or months.

Alibaba Cloud Intelligent Voice Self-learning Platform has its optimization effect fully verified by many internal and external partners and projects. It helps many projects address availability issues and make achievements unattainable by competitors using traditional optimization methods.

For example, developers can use the following self-learning methods to customize models in fields of their interest:

Service-related buzzword customization

Many dedicated scenarios require enhancement on fast recognition of special words. (Note: Two modes are included. In Mode I, other words are easily recognized as special words; in Mode II, special words are recognized as other words.) The application of real-time buzzword loading technology allows you to set levels for buzzwords in real-time scenarios, thus enhancing recognition of buzzwords.

Class-based buzzword customization

In many cases, the same pronunciation or attribute needs to be recognized differently depending on specific context. A typical example is recognition of contacts and place names. For example, the Chinese personal names 张阳 and 章扬 with the same pronunciation "Zhang Yang" must be recognized as different persons to identify their respective contacts. Similarly, two different Chinese places 安溪 and 安西 with the same pronunciation "Anxi" must be recognized differently to navigate to the expected place. In the spirit that everyone deserves respect, Alibaba Cloud Intelligent Voice Self-learning Platform provides the customization capability under the contacts class and place name class to achieve differential and accurate recognition.

Service-specific model customization

You can input field-specific text such as the industry or company profile, customer service chat records, and general vocabulary and terms of a field to quickly generate a custom model for the industry. You can invoke the corresponding custom model flexibly as needed.

With these methods, developers can focus on knowledge and data collection in familiar vertical fields, without having to concern about voice algorithms and engineering service details. The new mode of providing voice technologies in the cloud benefits developers and improves their business results.

Cloud Migration without Stopping Services: Alibaba Cloud DTS

10 posts | 2 followers

FollowAlibaba Clouder - October 11, 2019

Alibaba Clouder - July 19, 2018

ApsaraDB - February 13, 2021

Alibaba Clouder - May 6, 2020

Alibaba Clouder - March 31, 2021

Alibaba Clouder - August 9, 2018

10 posts | 2 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More AIRec

AIRec

A high-quality personalized recommendation service for your applications.

Learn More Artificial Intelligence Service for Conversational Chatbots Solution

Artificial Intelligence Service for Conversational Chatbots Solution

This solution provides you with Artificial Intelligence services and allows you to build AI-powered, human-like, conversational, multilingual chatbots over omnichannel to quickly respond to your customers 24/7.

Learn More Voice Messaging Service

Voice Messaging Service

A one-stop cloud service for global voice communication

Learn MoreMore Posts by Alibaba Cloud Product Launch