By: Jeremy Pedersen

If you have been following this Friday Blog series for a while, you may have noticed a pattern: articles tend to fall into one of three categories:

This week is different. This week, I'm playing the most dangerous game. This week, I'm predicting the future.

Why bother? More intellectually capable people have made well-sounding predictions that turned out to be dead wrong. Still, I think it's important to at least try and understand where we might be headed.

I'll start by looking at how we got to where we are today, then I'll make my own guesses about where we go from here.

Let's dive in.

"The Cloud", as we know it today, is simply a shared set of computing resources, available on-demand.

If we wanted, we could trace "the cloud" all the way back to the first multi-user computer systems at major universities in the 70s and 80s.

I'm not going to go that far back. Instead, let's start in the 1990s, around the time of the first Dot-com bubble.

For many years, "corporate" or "industrial" computing meant buying special purpose hardware and operating systems from companies like IBM or Sun Microsystems. Large enterprises bought fixed amounts of hardware, kept it for years, and rarely shared it with anybody outside their organization. And it was expensive.

Internet companies couldn't afford to do things this way: the up-front costs were enormous, and the systems weren't flexible or easy to scale. Instead, many of them used freely available operating systems like Linux or BSD and ran their businesses on common, readily available PC hardware with Intel (x86) processors.

Often, these systems were less reliable and performant than the systems Sun or IBM was offering, but they were good enough for the budding commercial Internet.

These smaller, less reliable systems had to be employed in clusters, which meant that early Internet companies had to become experts in distributed computing. This forced Internet companies to use architectural patterns that are still very common today, and form part of the best practices used at places like Amazon AWS or Alibaba Cloud: redundancy, automated failover, load balancing, and so on.

Around the same time, virtualization software started to become available, making it possible to "trick" one physical machine into running several operating systems simultaneously. One of the earliest commercial systems that did this on standard Intel x86 hardware was VMWare Workstation, available in early 1999.

This added another key piece to the puzzle: the ability for multiple, isolated users (or systems) to share the same underlying hardware.

With these concepts in place, the modern public cloud formula was just waiting to be discovered: commodity hardware, run at scale, shared among multiple users via virtualization.

Some of the largest companies to survive the Dot-com bubble were using this formula internally by the mid-2000s, and Amazon was one of the first to consider how these internal services might be made available to third parties. The foundational work for what eventually became Amazon Web Services started in 2000 and was largely in place by 2005.

In 2006, AWS launched some of the core services still in use on the AWS cloud today: EC2 (computing) and S3 (storage). Amazon's RDS database service followed not long after.

In the 15 years since 2006, this model has been extended and tweaked, but the fundamental components are the same: virtualized computing, networking, and storage, available on-demand.

AWS now has lively competition from Google, Microsoft, Alibaba, and even old-guard companies like IBM and Oracle, though their late entry limits their future prospects. There are also a host of smaller companies like Digital Ocean, Linode, and Vultr offering a more limited set of services, but still hewing to the architectural guidelines laid down by the bigger players.

Since 2006, most of the biggest changes have been at the software layer, especially in automation.

All the major cloud providers allow users to create, configure, and destroy cloud resources via a public API, and tools like Hashicorp's Terraform make it possible to script the creation of new on-cloud infrastructure.

It is now common for companies to write code that describes the configuration of their virtual machines, networks, load balancers, and databases, then pass that code to tools which automatically create it all, by calling the cloud provider's public API.

Tools like Docker and Kubernetes now make it possible to automate a lot of the steps involved in building and deploying software, too.

A bewildering array of things are now partially or fully automatable, including:

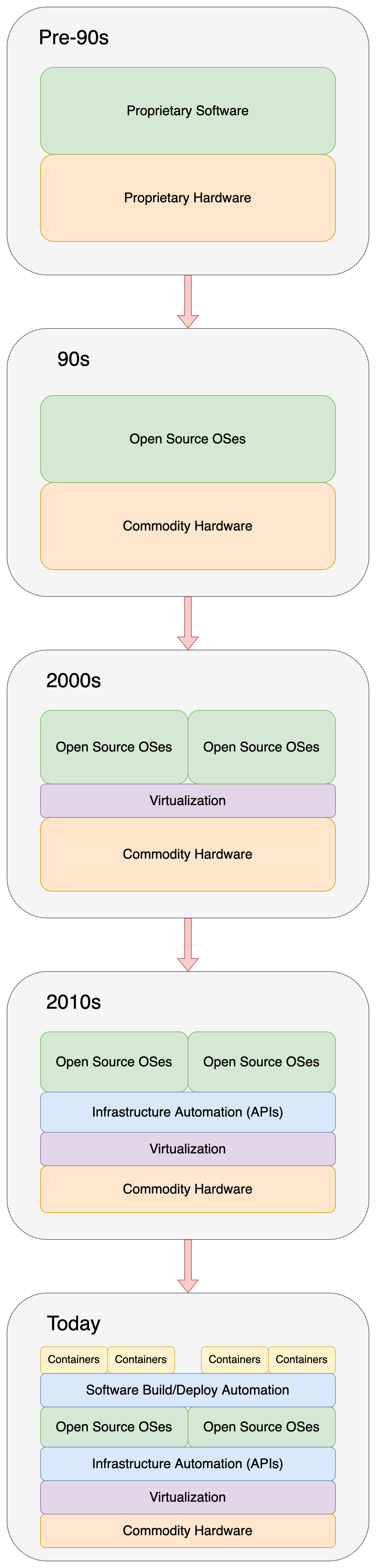

The overarching theme in all of this change has been increased abstraction and increased automation. In pictures, the evolution looks something like this:

Looking at the chart above, one is naturally tempted to continue the progression: surely more abstractions will be added, more layers will become a standard part of the cloud stack.

Those things may indeed happen, but I'm going to use the following three principles in making my own predictions:

Because these principles are so important, I want to take a minute to explain them in detail before I move on to my own predictions about the cloud.

Although this is obviously not true for things that degrade over time (like machines or people), it seems to be true for systems.

Systems that have been around for a long time have effectively been "battle-tested" by nature, and tend not to be fragile (Nasim Taleb's concept of Antifragility includes this idea).

Imagine that you were an ancient Greek. Could you have predicted airplanes? Atomic bombs? The Internet? Almost certainly not.

However, you could have reasonably predicted that the people of the future would:

Sure, you could not have predicted what types of clothes people would have worn, or what gods they might worship, but you could have reasonably foreseen that these systems would be in place thousands of years hence.

Compare this to predictions made by people in the 1950s or 1960s about people living in the early 2000s. Flying cars! Food in pill form! Vacations in space! Robot servants!

These predictions tried to extrapolate forward from technologies (like nuclear power or spaceflight) that had only just been invented. The error bars on such things are enormous.

Ironically, while predictions from half a century ago tended to vastly overstate the technology of the future, they rarely predicted any social change. If you've got the spare time and want to have a good laugh (or perhaps a good cry?), go look at old copies of Popular Science or Popular Mechanics. Their pages are filled with illustrations of future people, all of whom adhere to the middle-class stereotypes of the 50s and 60s America. They are white, straight, and of European descent. All the interesting discovery and spaceflight is being done by men. Nobody is gay, and remarkably there don't appear to be many Arabs, Indians, Africans, or Asians in the future. Women are still confined to cooking, cleaning, and child-rearing, albeit with the aid of robotic servants and atomic gadgets.

As technology improves and gets refined, it becomes more and more difficult to optimize it further.

Imagine you are writing a piece of software to compress files. At first, it takes 10 seconds to compress a file.

You realize there are some optimizations you can make that will improve its speed: you spend about 2 hours making those improvements. The software now compresses your test file in 5 seconds, a 2x speedup.

Racking your brain and consulting some research articles, you realize further optimizations are possible. After 8 hours of effort, you get the compression time down to 3 seconds.

You have now spent ten hours improving your software. Two hours (20%) of your time was used to get from 10 seconds down to 5, shaving 5 seconds off the initial 10. The remaining 80% of your time was used to shave off just 2 more seconds.

Every second you shave off the running time costs you more and more development time. This is the law of diminishing returns.

You can see this in other areas of technology too: commercial airplanes haven't gotten any faster since the 1960s. Internal combustion engines in cars made 20 years ago are only nominally less efficient than the ones rolling off the assembly lines today. These technologies are up against the law of diminishing returns: further improvements would be too costly to realize or would cause problems in other areas.

There is such a thing as technological inertia.

How good does a new tool, a new method, or a new idea need to be in order to supplant existing ones?

History is littered with technologies that were great, but had already been supplanted by things that were just good enough.

The modern QWERTY keyboard layout we all learn to type on is a holdover from the typewriter era. There are a few (mostly unverified) explanations floating around for why we use this particular layout, but the real explanation seems to be the simplest one: "because it got popular first".

Many keyboard layouts have been designed in the years since. Some allow you to type faster, or reduce stress on your fingers, or are easier for new users to learn. None of them have been widely adopted. Why? QWERTY is here, and we all know it already.

When predicting the future, we have to remember that some technologies - whatever their drawbacks - have a certain level of inertia associated with them and are therefore hard to replace.

Keeping in mind the principles above, I can make the following predictions with confidence:

Projecting a little further (and with less confidence):

Sticking my kneck out even further, I can make a few less grounded predictions:

I could say more, but I'm scared to go any further! I'll leave the wild predictions up to more capable people!

Thanks for joining me again this week. ^_^

Great! Reach out to me at jierui.pjr@alibabacloud.com and I'll do my best to answer in a future Friday Q&A blog.

You can also follow the Alibaba Cloud Academy LinkedIn Page. We'll re-post these blogs there each Friday.

New Product Updates, And An Analysis of Alibaba Cloud's Global Expansion - Friday Blog, Week 50

How Hard Is It To Do Cross-Border Networking? - Friday Blog, Week 52

JDP - September 2, 2021

JDP - August 5, 2021

JDP - August 19, 2021

JDP - August 12, 2021

JDP - October 1, 2021

JDP - November 11, 2021

Alibaba Cloud Academy

Alibaba Cloud Academy

Alibaba Cloud provides beginners and programmers with online course about cloud computing and big data certification including machine learning, Devops, big data analysis and networking.

Learn More Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn MoreMore Posts by JDP