The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

During the 2017 Alibaba Singles' Day (Double 11) online shopping festival, transaction values peaked at 325,000 per second, payments at 256,000 per second, and database requests at 42 million per second, once again breaking new records. During Double 11, the Alibaba Group infrastructure component Dragonfly sent down 5 GB of data files simultaneously to over 10,000 servers, enabling reliance on the Dragonfly system for perfect implementation of large-scale file distribution.

Alibaba Dragonfly is an intelligent P2P based image and file distribution system. It resolves problems in large-scale file distribution scenarios such as time-consuming distribution, low success rates, and bandwidth waste. It significantly improves business capabilities such as distribution deployment, data pre-heating, and large-scale container image distribution. Within Alibaba, Dragonfly has already exceeded an average of 2 billion distributions per month, distributing 3.4 PB of data, and succeeding in becoming part of Alibaba's infrastructure. Container technology brings convenience to operation and maintenance (O&M), but also presents massive challenges to image distribution efficiency. Dragonfly supports many kinds of container technology, such as Docker and Pouch. Once Dragonfly is used, image distribution can be accelerated by as much as 57 times and data source network export traffic reduced by over 99.5%. Dragonfly can save bandwidth resources, upgrade O&M efficiency, and reduce O&M costs.

Dragonfly is a P2P file distribution system that is a product of Alibaba's own research. It is an important component of the company's basic O&M platform, and forms the core competitiveness of its cloud efficiency°™a smart O&M platform. It is also a crucial component of its Cloud Container Service.

Its inception, though, was the subject of discussion for 15 years.

Following the explosive growth of Alibaba's business, in 15 years its system's daily distribution volume exceeded 20,000, and the scale of many applications began to exceed 10,000, while the distribution failure rate began to increase. The root cause was that the distribution process requires the haulage of a large volume of files. The file servers couldn't handle the large demands made of them. Of course it's easy to speak of server expansion, but once the servers are expanded, one discovers that back-end storage has become a bottleneck. Apart from this, large quantities of requests from a large number of IDC clients used up huge network bandwidth, causing network congestion.

At the same time, many businesses were moving towards internationalization and a large amount of application deployment was overseas. Overseas server downloading relies on back-to-origin domestic operations, wasting a large volume of international bandwidth as well as being very slow. If failure occurs and repetition is necessary when large files are being transmitted and the network environment is poor, efficiency is extremely low.

So we naturally thought of P2P technology. Because P2P technology was not actually new, however, we also investigated many other domestic and overseas systems. Our conclusion, though, was that the scale and stability of these systems could not meet our expectations. That's how Dragonfly came into being.

To address these shortcomings, Dragonfly set a few objectives at the beginning of the design process:

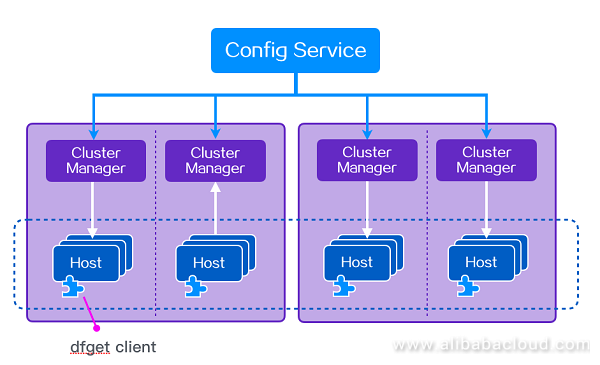

Figure 1. Overall Dragonfly architecture

The entire architecture is divided into three layers. The first layer is Config Service. It manages all the cluster managers. The cluster managers in turn manage all the hosts. The hosts are terminals. Dfget is a client sequence similar to wget.

Config Service is mainly responsible for such matters as cluster manager management, client node routing, system configuration management, and pre-heating services. Put simply, it is responsible for informing the host of the address lists of the team of cluster managers nearest to it, and periodically maintaining and updating this list, so that the host can always find the cluster manager nearest to it.

The cluster manager has two main duties. The first is to download files in passive CDN mode from the file source and generate a set of seed block data. The second is to construct a P2P network and dispatch the block data designated for mutual transmission between each peer.

Dfget is then stored at the host. The syntax of dfget is very similar to that of wget. The main functions include such things as file downloading and P2P sharing.

Within Alibaba, we can use StarAgent to distribute dfget commands downwards to enable a set of machines to download files simultaneously. In certain kinds of scenario, a set of machines might be all of Alibaba's servers. So its use is highly efficient. Apart from clients, Dragonfly also has Java SDK, which can enable you to "push" files onto a set of servers.

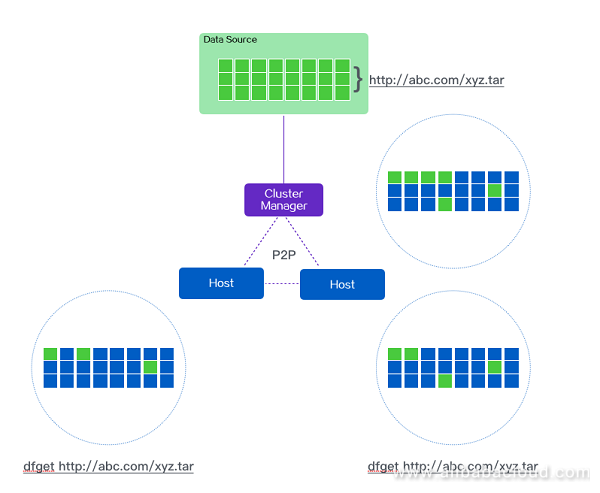

The diagram below elaborates on an interactive system schematic of two terminals simultaneously invoking dfget to download the same file:

Figure 2. Dragonfly P2P networking logic diagram

Two hosts and one CM form a P2P network. First of all, the CM will check whether the local area has a cache. If it doesn't, then it downloads one on a back-to-origin basis. Of course the files will be separated into shards. The CM will download these shards in multiple threads. At the same time, it will provide these downloaded shards to the host. After the host has downloaded a shard, it will simultaneously provide it to its peers for download. When it has reached all the hosts, the entire download is complete.

When the download is underway at the locality, the circumstances of the shard download will be recorded in the metadata. If the download is suddenly interrupted, the dfget command is once again executed and transmission will continue despite the power failure.

When downloading has finished, the MD5 will also be verified by comparison in order to confirm that the downloaded files are perfectly identical to the source files. Dragonfly controls the cache duration on the CM side using the HTTP cache protocol. Of course, the CM side also has its own ability to clean the disk periodically, to confirm that it contains sufficient space to support long-term service.

At Alibaba, there are also many file pre-heat scenarios in which it is necessary to push files to the CM side in advance. These include such files as container images, index files, and business optimization files.

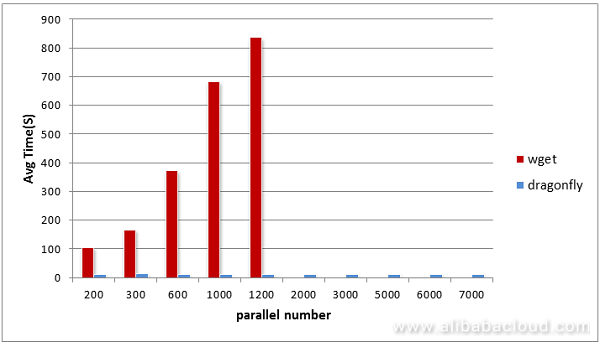

After the first version has gone online, we conduct a round of tests, the results of which are as illustrated by the graph below:

Figure 3. Comparative graph of results of traditional downloads and Dragonfly P2P download tests

The x-axis shows client volume. The y-axis shows download duration. File source: test target file 200 MB (network adapter card: Gbps). Host: 100 Mbps network adapter card. CM: Two servers (24 core, 64 G, network adapter card: Gbps).

Two problems can be seen from this graph:

The period before Double 11 every year is peak distribution period. Dragonfly exceeded perfection during Double 11 2015.

After Double 11 2015, Dragonfly achieved a download rate of 120,000 per month and a distribution volume of 4 TB. During that period, other download tools were used at Alibaba, such as wget, curl, scp and ftp, as well as small-scale distribution systems of our own construction. Apart from our full-coverage distribution system, we also conducted small-scale promotion. By around Double 11 2016, our download volume reached 140 million per month, and our distribution volume 708 TB.

After Double 11 2016, we proposed an even higher objective. We hope that 90% of Alibaba's large-scale file distribution and large file distribution business will be undertaken by Dragonfly. We hope that Dragonfly will become group-wide infrastructure.

We hope to refine the best P2P file distribution system through this objective. In addition, we could integrate all the file distribution systems within the group. Integration could enable even more users to benefit, but integration has never been the ultimate objective. The purposes of integration are:

As long as the Dragonfly system is optimized, the entire group can benefit. We found, for example, that system files are distributed by the entire network every day, and if these files alone could be compressed, we could save the company 9 TB of network traffic daily. Transnational bandwidth resources are particularly valuable. If everyone used their own distribution system, this kind of optimization of our overall situation wouldn't even need to be discussed.

So integration is absolutely imperative!

Based on the analysis of large quantities of data, we concluded that the entire group's file distribution volume was approximately 350 million per week, and our proportion at that time was not even 10%.

After half a year's hard work, in April 2017, we finally achieved this objective: a share of more than 90% of the business. The business volume grew to 300 million files per week (basically according with the data from our earlier analysis). The distribution volume was 977 TB. This figure was greater than the volume for the month half a year previously.

Of course, we are bound to point out that this is intricately connected to Alibaba's containerization. Image distribution accounts for approximately one half. Below, we provide an introduction to the way in which Dragonfly supports image distribution. Before discussing image distribution, however, we must of course discuss Alibaba's container technology.

The strengths of container technology naturally need little introduction. From a global perspective, Docker enjoys the greatest share of the container technology market. Of course, apart from Docker, other solutions exist, such as rkt, Mesos Uni Container, and LXC, while Alibaba's container technology is called Pouch. As early as 2011, Alibaba, on its own initiative, researched and developed the LXC container, T4. At that time, we hadn't created the concept of the image. T4 nevertheless served as a virtual machine, but of course it had to be much lighter than that.

In 2016, Alibaba made major upgrades on the basis of T4, which evolved into today's Pouch. This was already open source. Currently, Pouch container technology already covers almost all of the business departments in the Alibaba Group. Online businesses are 100% containerized to scales as high as several tens of thousands The value of image technology has expanded the boundaries of the application of container technology, and in Alibaba's huge application scenarios, how to achieve highly efficient "image distribution" has become a major issue.

Returning to the level of the image. From a macro perspective, Alibaba has container application scenarios of enormous scale. From a micro perspective, when each application image is being formed, the quality lies in unevenly matched circumstances.

In theory, with imaging or traditional "baseline" methods, as far as the application's size is concerned, there shouldn't be extremely large differences. In fact, this depends completely on whether the Dockerfile is written well or badly, and on whether the image layering is reasonable. Best practice actually exists within Alibaba, but each team's understanding and acceptance levels are different. There will certainly be differences between good and bad usage. Especially at the beginning, everyone turns out some images of 3 to 4 GB. This is extremely common.

So, as a P2P file distribution system, Dragonfly is in a favorable position for the use of its skills, regardless of how large the image is or how many machines it has to distribute to. Even if your image is created extremely badly, we always provide extremely highly efficient distribution. There will never be a bottleneck. So, this is a quick promotion of container technology, enabling everyone to understand the container O&M methods and giving ample time to digest it all.

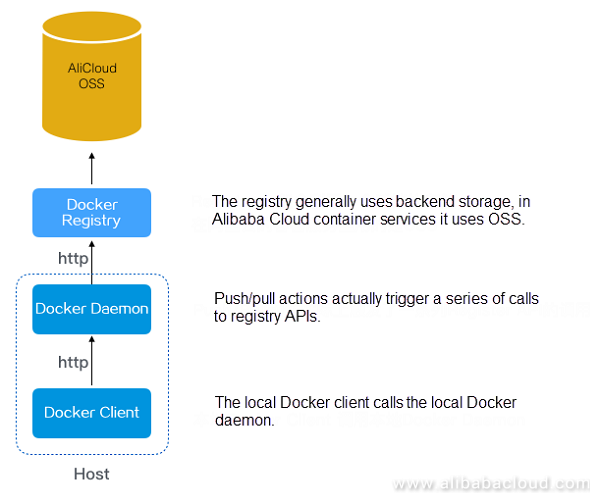

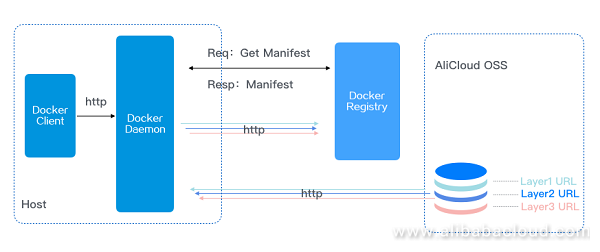

Figure 4. Flow chart of image download

Taking Alibaba Cloud Container Service as an example, traditional image transmission is as the graph shows. Of course, this is the most simplified type of architectural method. Actual deployment circumstances will be much more complex and will also take account of such matters as authentication, security, and high availability.

It can be seen from the above graph that image transmission and file distribution experience similar problems. When 10,000 hosts make simultaneous requests to a registry, the registry will become a bottleneck. Also, when overseas hosts visit domestic registries, such problems as bandwidth wastage, lengthened delays, and declining success rates will occur.

Introduced below is the Docker Pull implementation process:

Figure 5. Docker image level download

Docker Daemon invokes the manifest for registry API image acquisition. From the manifest, it can figure out the URL for each level. Soon afterwards, Daemon performs a parallel download of the image layers from the registry to the host's local database.

Ultimately, therefore, the problem of image transmission becomes the problem of concurrent downloading of each image-layer file. But what Dragonfly is good at is precisely using the P2P mode to transmit each layer's image files to the local database.

So, specifically, once again, how do we make this happen?

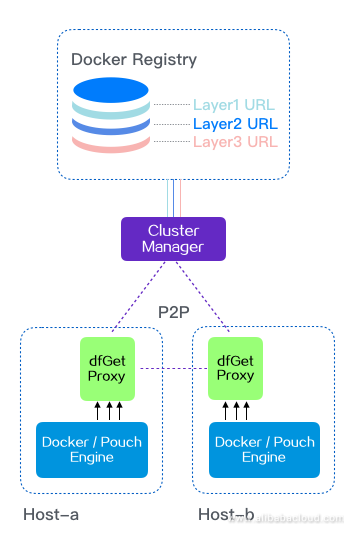

Actually, we activate the dfget proxy at the host. All of the Docker/Pouch engine's commands and requests go through this proxy. Let's look at the graph below:

Figure 6. Dragonfly P2P container image distribution chart

First of all, the docker pull command is intercepted by the dfget proxy. Then, the dfget proxy sends a dispatch request to the CM. After the CM receives the request, it investigates whether the corresponding downloaded file was already cached locally. If it wasn't, then it downloads the corresponding file from the registry and generates seed block data (as soon as the seed block data is generated it can be used). If it was already cached, then block tasks are immediately generated. The requester analyzes the corresponding block task and downloads block data from other peers or supernodes. After all the blocks from a layer finish downloading, the downloading of one layer is also complete. Similarly, after all the layers finish downloading, the whole image has also finished downloading.

Dragonfly-supported container image distribution also has a few design objectives:

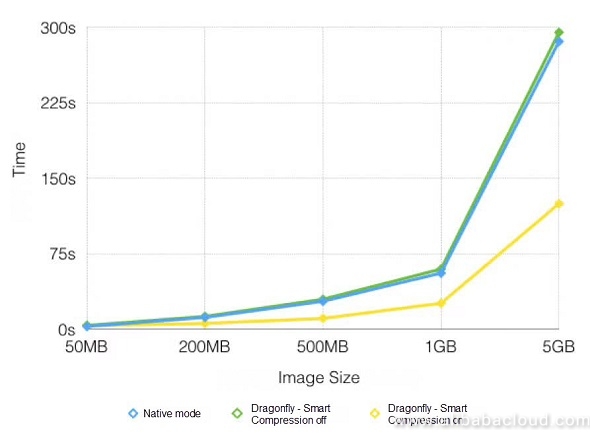

All together, we performed two sets of experiments:

Figure 7. Comparative graph showing different methods for single client

Native and Dragonfly's (closed smart compression properties) average times elapsed are basically approximate, though Dragonfly's is slightly higher. This is because Dragonfly, during the download process, will check the MD5 value of each block of data, and after downloading finishes, it will also check the MD5 of the whole file, in order to ensure that the file source is identical. When smart compression is activated, however, its time elapsed is lower than Native mode.

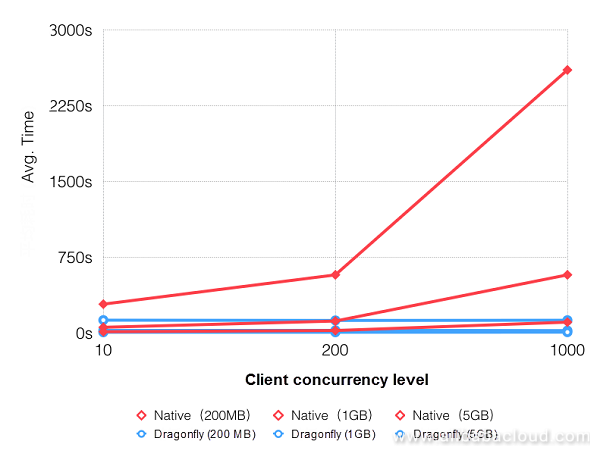

Figure 8. Comparative graph showing different image sizes and concurrencies

We can see from the above graph that, in line with the expansion in download scale, the difference between times elapsed for Dragonfly and Native modes is significantly increased. The highest availability can be accelerated by up to 20 times. In the test environment, source bandwidth is extremely important. If the source bandwidth is 2 Gbps, speeds can be accelerated by up to 57 times.

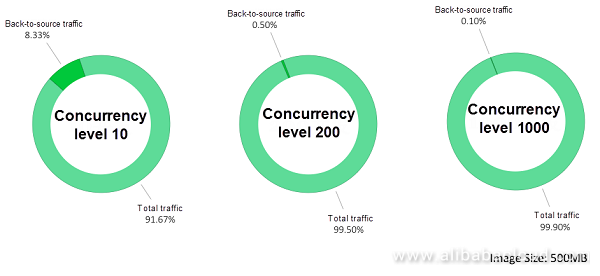

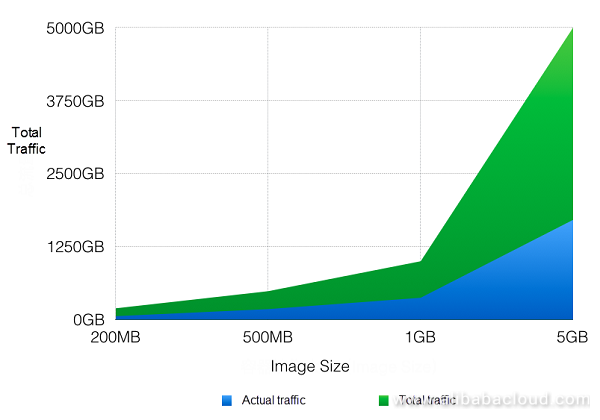

The graph below shows a comparison of overall file traffic (concurrency x file size) and back-to-origin traffic (traffic downloaded through the registry).

Figure 9. Comparative graph of Dragonfly image outward distribution traffic

To distribute 500 M of images to 200 nodes uses less network traffic than Docker native mode. Experimental data makes clear that after Dragonfly is adopted, outward registry traffic decreases by over 99.5%, and, at a scale of 1,000 concurrencies, outward registry traffic can decline by approximately 99.9%.

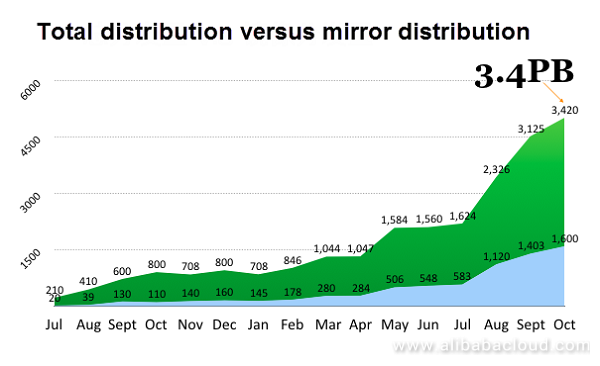

Alibaba has already committed to the use of Dragonfly for about two years, during which business has developed rapidly. Statistics on the number of distributions currently show 2 billion per month, distributing 3.4 PB of data. The volume of container image distribution accounts for almost half of this.

Figure 10. Graph showing Dragonfly files at Alibaba versus Dragonfly distribution traffic trends

The largest single distribution at Alibaba was in fact during this year's Double 11 period. Over 5 GB of data files had to be distributed simultaneously by more than 10,000 servers.

Although Alibaba's initial steps in AIOps were not taken early, we are investing massively this year, with applications for many products. Among the applications for Dragonfly are the following:

Traffic control is commonly observed in road traffic. In the speed-limit regulations on Chinese roads, for example, the speed limit on highways without center lines is 40 km/h. By the same token, only one public road for motor vehicles has a speed limit of 70 km/h. On high-speed roads, it is 80 km/h, and the maximum speed limit on freeways is 120 km/h. These kinds of limits are the same for all vehicles: clearly not flexible enough. So, in circumstances in which the road is extremely clear, resources are in fact heavily wasted and overall efficiency is extremely low.

Traffic lights are actually a means of controlling traffic. Current traffic lights all operate according to set times. They can't make smart judgments based on actual traffic flows. So at the Yunqi Conference held in October last year, Dr. Wang Jian lamented that the longest distance in the world was not that between the North and South Poles, but that between a traffic light and a traffic surveillance camera. They are positioned on the same pole, but were never connected by data. The traffic light's actions will never become the target of the surveillance camera. This wastes cities' data resources and increases their business development costs.

One of Dragonfly's parameters is the control of disk and network bandwidth usage. With this parameter, users can set the amount of disk or network IO they want to use. As explained above, this method became extremely rigid. So, currently, one of our main thoughts about making things "smart" is the hope that parameters of this sort are no longer thought of as being set, but configured in accordance with the parameters of business circumstances in combination with system operation circumstances and smart decisions. At the very beginning, this may not be the optimal solution, but, after a period of operational and training time, it will automatically achieve an optimal state. It will guarantee stable business operations and make full use of network and disk bandwidth, avoiding resource wastage. (Collaborative project with iDST team.)

Block job scheduling is the critical element in determining whether the distribution rate is high or low. If performed merely by a simple scheduling strategy, such as according to the situation or other fixed priority scheduling, it will always cause fluctuations in the regularity of download speed, easily leading to excessive downloading glitches, and, at the same time, very poor download speeds. We made countless attempts and probes to optimize job scheduling, ultimately adopting multi-dimensional data analysis and smart trends to determine requesters' optimal follow-up block job lists. The many dimensions included machine hardware configuration, geographical position, network environment, and historical download results and speeds. The data analysis mainly used gradient descent algorithms and other follow-up algorithms.

Smart compression implements an appropriate compression strategy for the part of the file that most merits compression, and can thereby save large volumes of network bandwidth resources.

As to the current actual average data of container images, the compression ratio is 40%. In other words, 100 MB of data can be compressed to 40 MB. On a scale of 1,000 concurrencies, traffic flow could be reduced by 60% using smart compression.

When downloading certain sensitive files (such as classified files or account data files), transmission security must be effectively guaranteed. In this regard, Dragonfly performs two principal tasks:

Dragonfly resolves large-scale file downloading and all kinds of difficult file distribution issues in cross-network, isolated scenarios using P2P technology combined simultaneously with smart compression, smart traffic control, and a wide range of innovative technologies. This significantly improves data preheating, large-scale container image distribution, and other business capabilities.

Dragonfly supports many types of container technology. No alteration has to be made to the container itself. File distribution can be accelerated to as much as 57 times faster than native mode. Registry network outward traffic is reduced by over 99.5%. Dragonfly, carrying PB-grade traffic, has already become an important part of Alibaba's infrastructure to support business expansion and the annual Singles' Day (Double 11) online shopping festival.

Open Container Initiative Welcomes Alibaba Cloud as Newest Member

Cloud Native Image Distribution System Dragonfly Announces to Join CNCF

639 posts | 55 followers

FollowAlibaba Clouder - January 22, 2020

Alibaba Clouder - December 20, 2018

Alibaba Developer - March 11, 2020

Alibaba Clouder - July 12, 2019

Alibaba Container Service - December 30, 2019

Alibaba System Software - November 29, 2018

639 posts | 55 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More Livestreaming for E-Commerce Solution

Livestreaming for E-Commerce Solution

Set up an all-in-one live shopping platform quickly and simply and bring the in-person shopping experience to online audiences through a fast and reliable global network

Learn More E-Commerce Solution

E-Commerce Solution

Alibaba Cloud e-commerce solutions offer a suite of cloud computing and big data services.

Learn MoreMore Posts by Alibaba Cloud Native Community