In the financial and banking industry, having a high-availability system is essential to ensure longer service availability time, more reliable services, and shorter downtime. Financial enterprises need to ensure high-availability of the services that they provide to the public and strive for more 9s (more 9s in the SLA means higher availability, for example, 99.999%). However, as the complexity of software systems continues to increase, failures are inevitable. This makes it necessary for enterprises to implement a holistic resilient architecture that is designed to deal with failures.

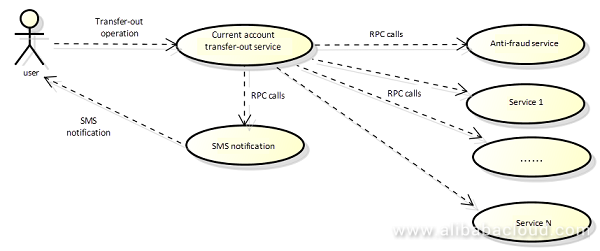

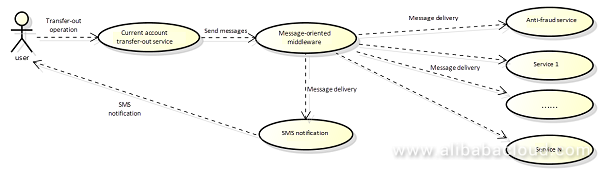

The common RPC and RMI integration technologies used by many enterprises, which are synchronous requests, often negatively impact end user experience due to failures on the execution side, timeout or other factors. In addition, many failures cannot be completely eliminated. For RPC and RMI calls, both service consumers and service providers need to be online at the same time and they need some mechanism to confirm each other's call relationship. These disadvantages led to the implementation of message-oriented middleware (MOM), which can be integrated into an enterprise's architecture to minimize the number of systems affected when a failure occurs.

Message-oriented middleware is a transparent middle layer that is integrated into a distributed system to separate service providers from service consumers.

A message queue (MQ) is a cross-process communication method used between applications to send messages between upstream and downstream applications. Let's break it down:

This allows decoupling between upstream and downstream applications. The upstream application sends messages to MQ and the downstream application receives messages from MQ. The upstream and downstream applications no longer depend on each other; instead, they only depend on MQ. Due to the queuing mechanism, MQ can act as a buffer between the upstream and downstream applications. Messages from the upstream application are cached, and the downstream application then pulls messages from MQ when it can, reducing peak traffic.

What is decoupling?

High cohesion and low coupling are software engineering concepts. Low coupling implies that individual components are as independent of each other as possible. Simply put, this requires more transparency in calls among modules. The highest level of transparency is when individual calls have no reliance upon each other. To achieve this, we need to reduce the complexity of interfaces, normalize call methods and transmitted information, reduce the dependency among product modules, and improve reusability.

How to decouple?

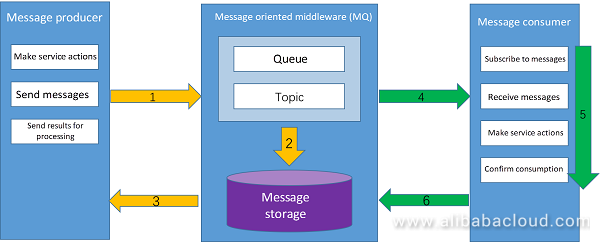

Decoupling in an overall enterprise architecture mainly involves two aspects: one is to simplify and reduce interaction, and the other is to add a middle layer to separate two services. MQ acts as this middle layer (as shown in the following diagram).

With MQ, the producer and the consumer don't have to be aware of each other and they don't have to be online at the same time. The main interaction flow is shown as follows:

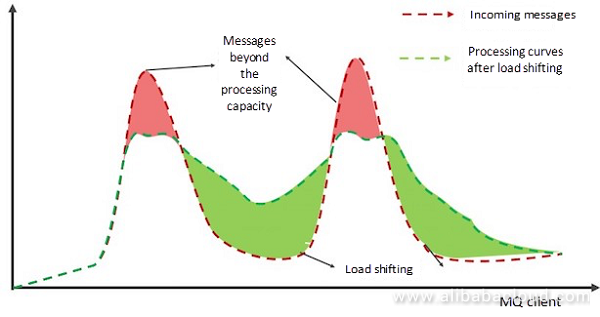

Since a system's busy and idle hours vary, the QPS difference can fluctuate exponentially. Especially in the case of marketing activities, traffic can instantly jump beyond the load capacity of the backend systems. In these situations, message-oriented middleware can be used to buffer traffic. The MQ client then pulls message from the MQ server based on its own processing capacity to reduce or eliminate the bottlenecks on the backend systems.

Source: https://github.com/alibaba/Sentinel/wiki/

For various reasons, during enterprise informationization, it is inevitable that software products are provided by different manufacturers and are designed to solve specific problems. These products cannot provide external services due to their closed architectures or lack of core development, which presents integration challenges. This problem can be partially resolved by integrating MQ. With MQ, the only requirement is for a specific process to produce a message or provide a specific response to the message and simply connect to MQ, without having to establish direct connections to other systems.

In order to provide resilient financial services, the dependencies between internal and external systems need to be isolated. There are two types of payment notifications: synchronous notifications and asynchronous notifications. For synchronous notifications, API calls may time out due to network failures resulting from factors such as the service provider's response being delayed due to insufficient processing capability; for asynchronous notifications, notifications only need to be successfully sent within a specific period to improve the end user experience and transaction success rate as well as the overall service production efficiency.

All choices are inevitably subject to objective and subjective factors. However, we should select architecture and framework models as objectively as possible and avoid retroactively justifying the selection after we see results. I'll share our MQ model selection process (I am not saying that subjective factors aren't relevant, but an engineer always needs to consider structure and quantification).

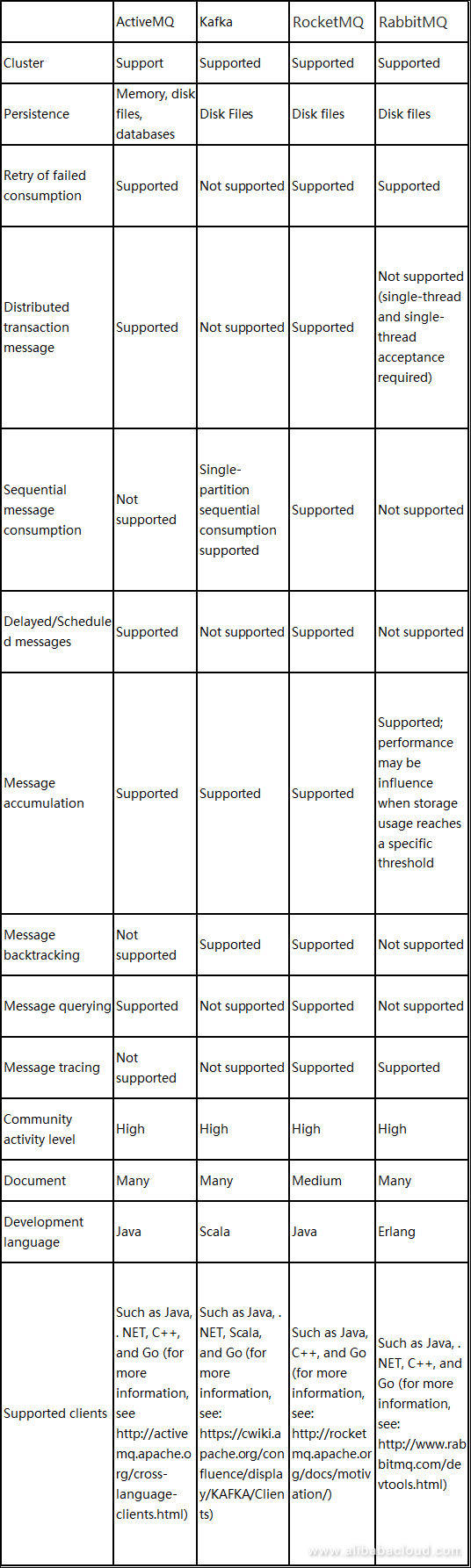

Comparison of product characteristics

You are recommended to build a POC environment to verify relevant functionality indicators as well as usability. Therefore, during the testing process, specific application scenarios should be set up based on the features provided by MQ to verify the implementation of service functions.

Performance testing: Performance testing actually involves too many factors, such as which environment a product is based on, which configuration has been made, and which stress testing script and report are used to perform stress testing. Indicator comparison: In addition to TPS (sender's TPS and TPS for consumers' final processing service), a number of other factors should be compared, such as latency, online connections supported at the same time (data volume of the producer and data volume of the consumer), Topic configuration (the number of topics and the relationships between the number of queues for each Topic and the data volume respectively for the producer and the consumer), the performance indicators of the server (CPU, memory, disk IO and network IO).

Fatigue testing: After running in a certain load level continuously for 24 hours, one week or longer time, how stable is the product? How are the indicators of the server? Is a slow increase trend observed?

Restart or failure rehearsal: Restart (or kill) partial or all instances of NameServer, Broker, Producer, and Consumer respectively in the registry in the event of disk failures or network failures and see the influence on applications, for example, check if the Apache RocketMQ ™ service can recover, if the producer and the consumer can restore the services, and if any messages are missing or duplicated.

The following are the reasons why we eventually chose Apache RocketMQ ™:

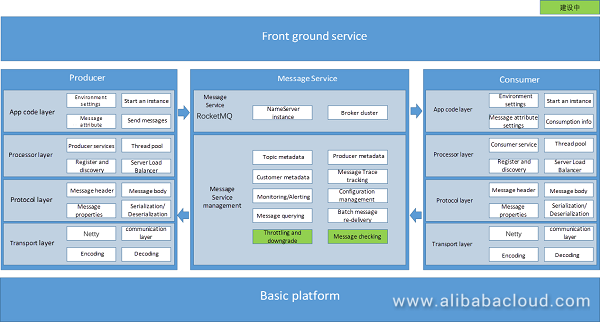

Encapsulation mainly refers to the abstraction and encapsulation of services, technologies, and data. Encapsulation has the following advantages:

Encapsulate norms into the basic code to apply uniform interaction standards inside an enterprise. These norms include:

With programming norms, we can locate service scenarios such as the corresponding projects and modules by using names, so that personnel coordination can be quickly done to handle unknown problems. Of course, it is also necessary to manage original data such as topic, producer, and consumer, because naming norms cannot hold too much information. Naming norms can also help avoid conflicts. For example, conflicts or misunderstanding among topics can cause message consuming or service transtion problems; conflicts among consumers' GroupIDs may cause message loss.

Encapsulation and customization can improve the management abilities, such as batch-querying information, batch-resending information, and message checking.

Apache RocketMQ ™ is the important basic middleware that improves the overall service resilience and plays a significant role in various financial transactions.

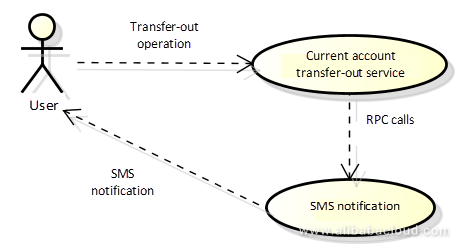

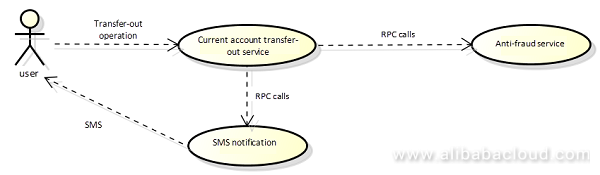

Let's take the current account transfer-out scenario for example.

For connections to external banking systems, sometimes asynchronous calls are needed to obtain response results in N seconds after service requests are sent. Before the use of delayed messages provided by Apache Apache RocketMQ ™ ™, the common method is to store data into databases or Redis cache first and then use scheduled polling tasks to perform operations. This method has the following disadvantages:

Apache RocketMQ ™ can bring the following advantages:

However, the current version does not support setting time granularity, and only allows messageDelayLevel-specific settings.This requires that the delay level should be planned ahead of setting up Apache RocketMQ ™ or that the Apache RocketMQ ™ delay source code should be extended to support a specific time granularity.

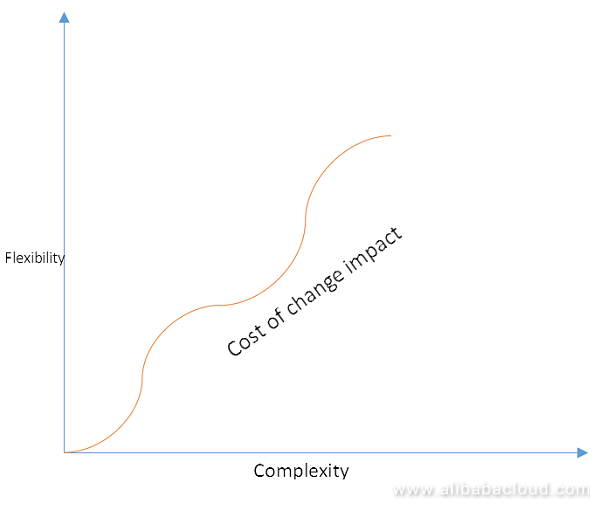

Why don't all calls use MQ when it has so many advantages? The ubiquitous economics always teaches us a lesson: benefits come hand in hand with costs.

Disadvantages of MQ:

So in the normal course of software development, we don't have to purposely look for the application scenarios of message queues. Instead, when performance bottlenecks occur, we should check if service logic includes time-consuming operations that can be asynchronously processed. If these operations are present, use MQ to process them. Otherwise, the blind use of message queues may increase the cost of maintenance and development and provide insignificant performance improvement, which is not worth the input.

Problems encountered with incorrect usage:

Since failures are inevitable, self-management, self-recovery and self-configuration and other necessary functions need to be implemented through a series of mechanisms in the process of designing applications. As cloud-native architectures become more popular, MQ is not the only option for designing resilient systems to handle large loading and various failures. However, MQ is still a good choice and worth a try.

Surpassing Best-Fit: Optimizing Online Container Scheduling Policies for Large Transactions

480 posts | 48 followers

FollowAlibaba Cloud Native Community - February 1, 2023

Alibaba Cloud Community - December 21, 2021

Alibaba Cloud Native Community - April 23, 2023

Alibaba Cloud Native Community - July 4, 2023

Alibaba Cloud Native Community - January 31, 2023

Alibaba Cloud Native Community - January 5, 2023

480 posts | 48 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More AliwareMQ for IoT

AliwareMQ for IoT

A message service designed for IoT and mobile Internet (MI).

Learn More Message Queue for RabbitMQ

Message Queue for RabbitMQ

A distributed, fully managed, and professional messaging service that features high throughput, low latency, and high scalability.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn MoreMore Posts by Alibaba Cloud Native Community

Raja_KT February 14, 2019 at 7:18 am

Nice to know. I used Kafka in few projects mainly for banks but never tried RocketMQ.