Follow the Apache Flink® Community for making Data Distribution More Efficient in Flink.

Imagine you're a class representative who needs to distribute important study materials to your classmates. If the class is divided into several groups, what would you do? Would you make one copy for each group and let the group leader distribute it to members, or make a copy for each student? Obviously, the first method is more paper-efficient and effective.

Flink's early broadcast variable distribution mechanism was like giving a copy to each student, even when some students were in the same TaskManager (equivalent to a group). This not only wastes network bandwidth but also affects overall performance. FLIP-5 was proposed to address this issue.

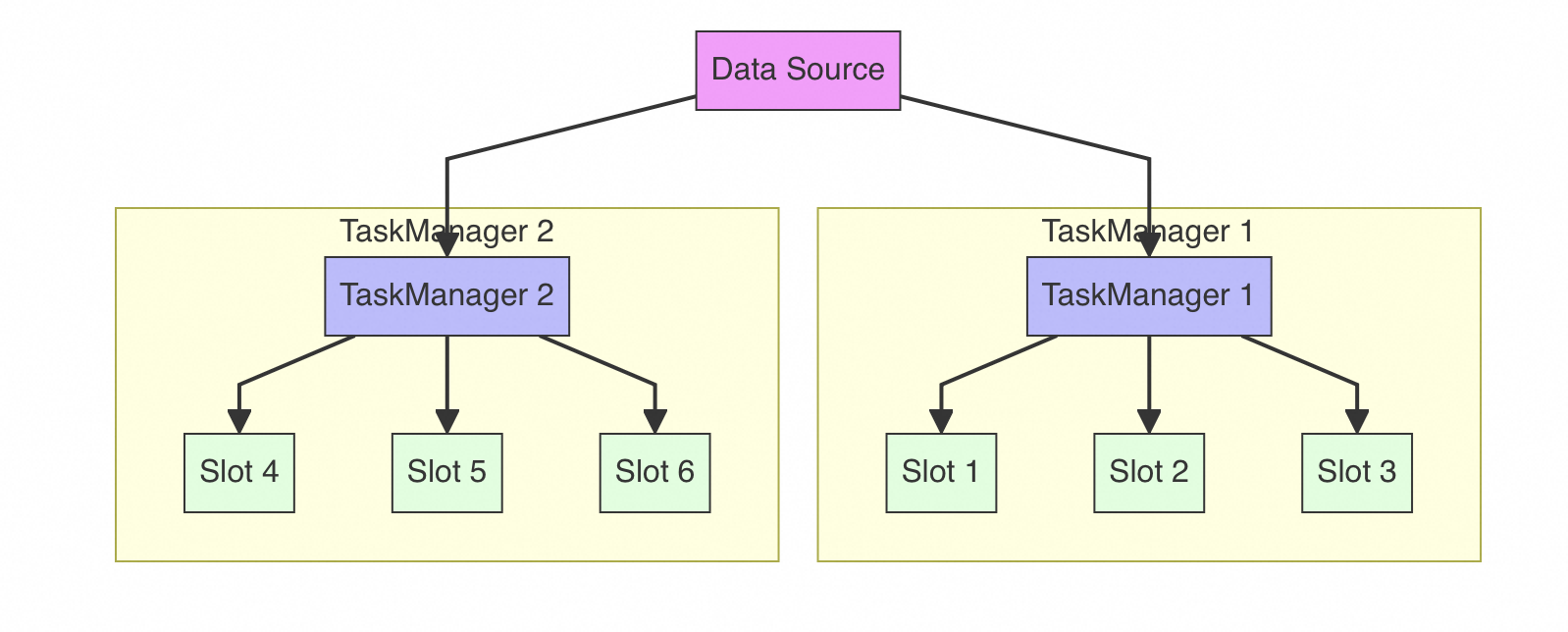

Let's illustrate the current problem with an example:

In the above example, a TaskManager has multiple Slots (processing slots). When using broadcast variables, the same data is sent to each Slot, even when these Slots are on the same TaskManager. This leads to two main problems:

Experimental data shows that when the number of Slots per TaskManager increases from 1 to 16, processing time significantly increases:

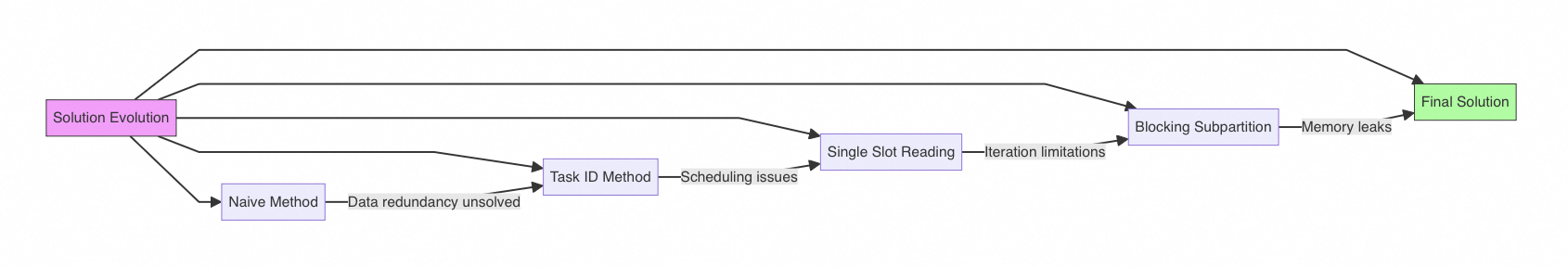

The research team proposed several solutions. Let's look at the most important ones:

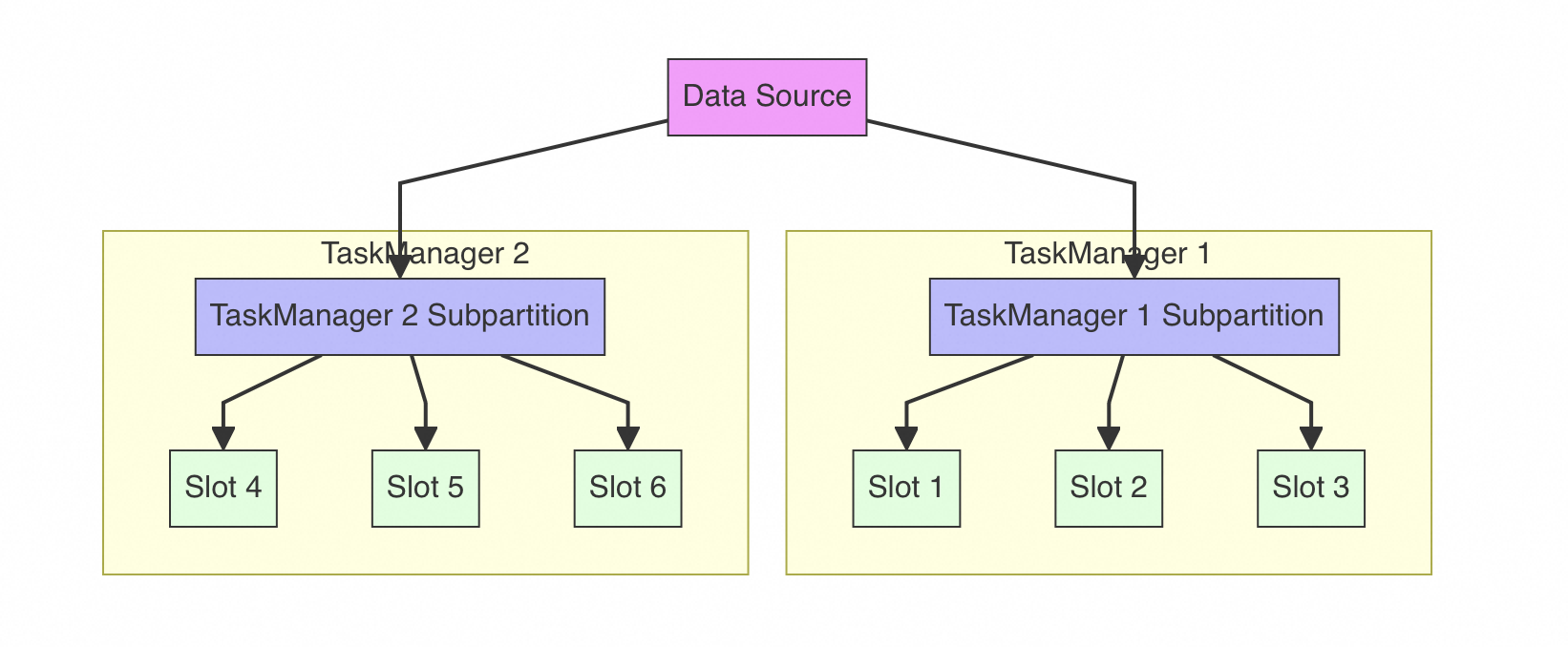

The core idea of the final solution is a complete overhaul of the broadcast data distribution mechanism. Specifically:

Data Sharing Mechanism:

Smart Release Mechanism:

This solution brought significant improvements:

Performance Improvement:

Resource Utilization Optimization:

Better Scalability:

Although this FLIP proposed good optimization ideas, it was ultimately abandoned for several main reasons:

Although FLIP-5 wasn't ultimately adopted, the problems it identified and the solutions it proposed are valuable. It reminds us to pay special attention to data transfer efficiency when handling distributed data. While this specific improvement wasn't implemented, the Flink community continued to optimize broadcast variable performance through other means. This is the charm of the open-source community — finding the most suitable solutions through continuous attempts and discussions.

Apache Flink FLIP-4: Enhanced Window Evictor for Flexible Data Eviction Before/After Processing

Flink Materialized Table: Building Unified Stream and Batch ETL

206 posts | 56 followers

FollowApache Flink Community - October 17, 2025

Apache Flink Community China - December 25, 2020

Apache Flink Community China - November 12, 2021

Alibaba Cloud Native - July 18, 2024

ApsaraDB - September 29, 2021

Apache Flink Community China - August 22, 2023

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn MoreMore Posts by Apache Flink Community