By Alex Mungai Muchiri, Alibaba Cloud Tech Share Author. Tech Share is Alibaba Cloud's incentive program to encourage the sharing of technical knowledge and best practices within the cloud community.

There's a lot of excitement in the data science community right now as more tech companies compete to release more products in the area. If you were keen during the iPhone X launch, you would have noticed some of the cool features that came with the gadget such as FaceID, Animoji, Augmented Reality (AR). These tools use machine learning frameworks to work. If you are data scientist like myself, you are probably wondering how to build such systems.

Enter Core ML, a machine learning framework from Apple to the developer community. It is compatible to all Apple products from iPhone, Apple TV to Apple watches. Furthermore, the new A11 Bionic processing chip incorporates a neural engine for advanced machine learning capabilities. It is a custom GPU in all the latest iPhone models shipped by Apple. The new tool opens up a whole new era of machine learning possibilities with Apple. It is something that is bound to spur creativity, innovation and productivity. Since Core ML is so significant, we shall evaluate what it is and why it is becoming so important. We shall also see how to implement the model and evaluate its merits and demerits.

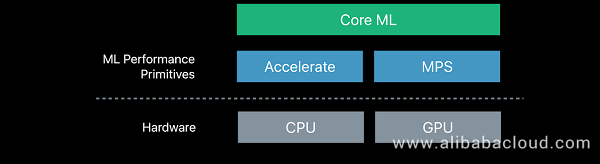

Simply put, the Core Machine Learning Framework enables developers to integrate their machine learning models into iOS applications. The underlying technologies powering Core ML are both CPU and GPU. Notably, the machine models run on respective devices allowing local analysis of data. The methods in use include both 'Metal' and 'Accelerate' techniques. In most cases, locally run machine learning models are limited in both complexity and productivity as opposed to cloud-based tools.

Apple has previously created machine learning frameworks for its devices before. The most notable are two libraries that were released including:

It uses Convolutional Neural Networks to make efficient CPU predictions.

It uses Convolutional Neural Networks to make efficient GPU predictions.

Source: www.apple.com

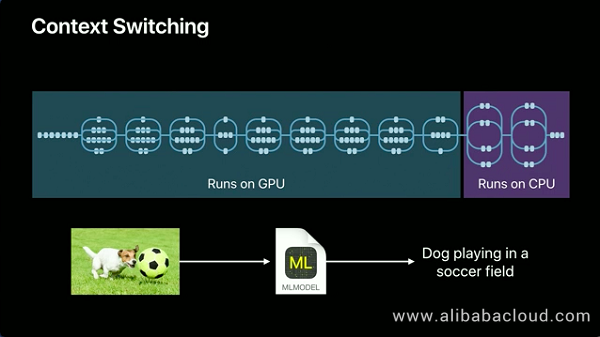

The two previous libraries are still in place; however, the Core ML framework is another top layer abstraction over them. Its interface is easier to work with and has a similar efficiency. When using the new tool, you do not need to worry about switching between CPU and GPU for inference and training. The CPU deals with memory-intensive workloads such as natural language processing. The GPU handles computation intensive workloads such as image processing tasks and identification. The context switching process of Core ML handles these functionalities with ease, and also takes care of the specific needs of your app depending on its application.

Source: www.apple.com

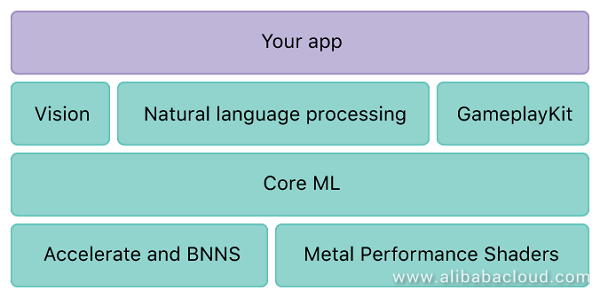

There are three libraries that are associated with Core ML that form part of its functionality:

Apple has done a great deal of work in making the above libraries easy to interface and operationalise with your apps. Putting the above libraries into the Core ML architecture gets us a new structure as below:

Source: www.apple.com

Integration of Core ML into the structure contributes to a modular and better scalable iOS application. Since there are numerous layers, it is possible to use each one of them in any number of ways. For more information about these libraries, these links contain further information: Vision, Foundation and GameplayKit. Alright, now let us learn some basic practical things:

The following are the requirements for setting up a simple Core ML project:

sudo easy_install pipsudo pip install -U coremltoolsVerify your identity after login using Apple ID by confirming the notification on the Apple device.

"Allow" the process, and type the given 6-digit passcode in the website

After you verify your identity using the six-digit code, you will get a link to download Xcode. Now, we are going to look at how to convert your trained machine learning models to Core ML standard.

The process of converting trained machine models transforms them into a format that is compatible with Core ML. the Core ML tools is the tool that is provided by Apple for that particular purposes. However, there are other tools available from third parties such as MXNet converter or the TensorFlow converter, which work pretty well. It is also possible to build your own tool if you follow the Core ML standards.

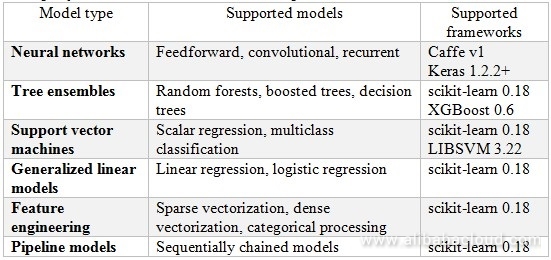

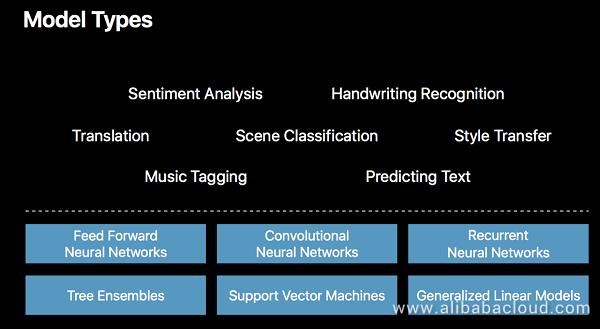

This tool, composed in Python, has a wide range of applicable model types that it converts to a format that is understood by Core ML. Apple provides a list of models and third-party frameworks supported in the table below.

Third party frameworks and ML models compatible to Core ML Tools:

Your ML model is recognised as a third-party framework under the standard indicated above. To execute the conversion process, the convert method is used. The resulting model should be saved as (.mlmodel) which is the Core ML model format. For models created using Caffe, the (.caffemodel), which is the Caffe model, should be passed to the coremltools.converters.caffe.convert method. Use the method below:

import coremltools

coreml_model = coremltools.converters.caffe.convert('my_caffe_model.caffemodel')The result after conversion should now be saved in the Core ML format:

coremltools.utils.save_spec(coreml_model, 'my_model.mlmodel')In some model types, you may have to include additional information regarding the update inputs, outputs, and labels. In other cases, you may have to declare image names, types, and formats. All conversion tools have other documentation and outlined information, with each varying with each tool. Core ML includes a Package Documentation with further information.

If your model is not among those supported by the Core ML tool, you can create your own model. The process entails translating your model's parameters such as input, output, and architecture to the Core ML standard. All layers of the model's architecture has to be defined and how it connects to other layers. The Core ML Tools have examples on how to make these conversions and also demonstrate conversion of model types of third party frameworks to Core ML format.

There are numerous ways of training a machine learning model and in our case, we shall use MXNet. It is an acceleration library that enables the creation of large-scale deep-neural networks and mathematical computations. The library is desirable for the following reasons:

You will need to run the converter on macOS El Capitan (10.11) and later versions. But before that, install Python 2.7.

The command below installs the MXNet framework and the conversion tool:

pip install mxnet-to-coremlOnce our tools have been installed, we can then proceed to convert models trained using MXNet and apply them to CoreML. For instance, we are using a simple model that detects images and attempts to determine the location.

All MXNet models are comprised of two parts:

Our simple location detection model would contain three files namely: model definition (JSON), parameters (binary) and a text file (geographic cells). When the Google's S2 Geometry Library is applied for training, the text file would contain three fields like so:

Google S2 Token, Latitude, Longitude (e.g., 8644b554 29.1835189632 -96.8277835622). The iOS app would only require the coordinate information.

Once everything is all set up, run the command below:

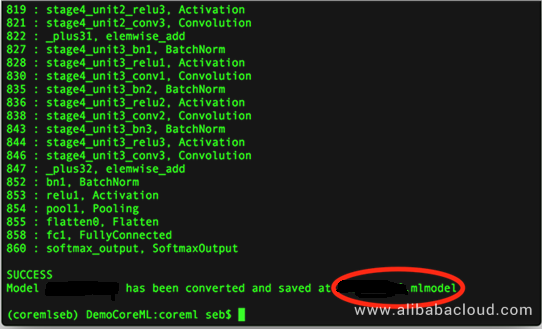

mxnet_coreml_converter.py --model-prefix='RN101-5k500' --epoch=12 --input-shape='{"data":"3,224,224"}' --mode=classifier --pre-processing-arguments='{"image_input_names":"data"}' --class-labels grids.txt --output-file="RN1015k500.mlmodel"The converter does its magic and recreates the MXNet model to a CoreML equivalent and a SUCCESS confirmation should be generated. From there, import the file you have generated to your XCode project.

The next process requires that you have XCode running on your computer. You should see the properties of your newly converted file such as size, name or even parameters as it would apply within your Swift code that is, when clicked.

In your Xcode, drag and drop the file and tick on the Target Membership checkbox. Next, test the application in a physical device or alternatively use the Xcode simulator. Keep in mind that you need to use your Team account to sign the app for it to be used on a physical device. Finally, build the app and run it on a device. That's it!

Getting started with Core ML is as easy as integrating it with your mobile application. However, trained models can take up a large chunk of your device's storage. You can solve the problem for neural networks by reducing your parameters' weight. In non-neural networks, this could be overcome by reducing the application's size, storing the models in the cloud, using a function to call the cloud to download learning models instead of having them bundled in the app. Some developers also use half precision in non-neural networks. The conversion does well in reducing the network's size when the weights being connected reduce. However, the half precision technique reduces the floating point's accuracy as well as the range of values.

You can use Alibaba Cloud to train and optimize the models at scale before exporting them to devices. Core ML simplifies the process of integrating machine learning into applications built on iOS. While the tool is available on the device, there is a huge opportunity for using the cloud as a platform to combine output from various devices and conducting massive analytics and machine learning optimisations.

https://developer.apple.com/documentation/coreml/converting_trained_models_to_core_ml

My Experience at the Alibaba Cloud Computing Conference 2018

When Databases Meet FPGA – Achieving 1 Million TPS with X-DB Heterogeneous Computing

2,605 posts | 747 followers

FollowAlex - January 22, 2020

Alibaba Clouder - October 10, 2019

Alibaba Clouder - October 30, 2019

Alibaba Clouder - August 12, 2020

Alibaba Clouder - April 28, 2021

Alibaba F(x) Team - June 20, 2022

2,605 posts | 747 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Machine Translation

Machine Translation

Relying on Alibaba's leading natural language processing and deep learning technology.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Clouder

Raja_KT February 16, 2019 at 6:32 am

Interesting one. JFMI, do all NLP algos are CPU-bound?