This article is compiled from the speech entitled Overall Architecture Design and Enterprise-Level Features of PolarDB by Beixia, an expert in the core technology of Apsara PolarDB. PolarDB supports three architectures: storage and computing separation architecture, HTAP architecture, and node high availability architecture. PolarDB also provides enterprise-level features of availability, high performance, and security. It also analyzes the overall architecture and enterprise-level features of PolarDB.

PolarDB is a cloud-native database developed by Alibaba Cloud. The source code of PolarDB is open-source. (The source code of PolarDB is available here). The following section describes the overall architecture and enterprise-level features of open-source PolarDB.

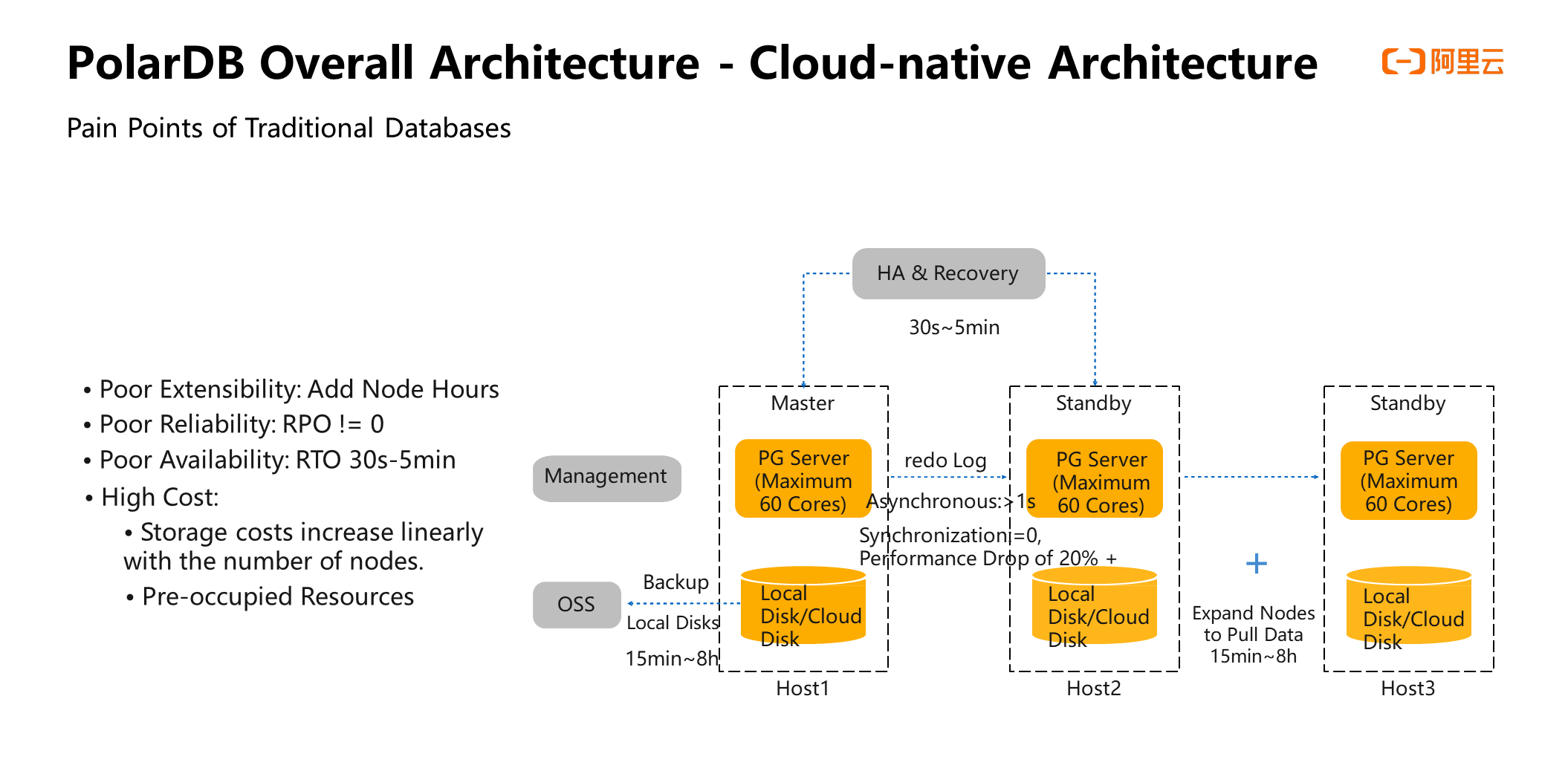

The infrastructure of PolarDB is a cloud-native architecture. A traditional database consists of a primary database, a secondary database, and a Standby node. The primary database replicates redo logs to the secondary database. The architecture of traditional databases has the following four problems:

① Poor Extensibility: When adding nodes, you need to copy the data completely first, which usually takes hours or even longer.

② Poor Reliability: If synchronous replication is required between the primary and secondary databases, the performance may be reduced by more than 20%. If asynchronous replication is used, data loss may occur.

③ Poor Availability: After a fault occurs in the primary database, HA switches to the secondary database. The new standby database needs to play back a large number of redo logs to enter the serviceable state. This process may require a minute-level duration.

④ High Cost: The storage cost increases linearly as the number of nodes increases. In addition, some resources need to be reserved.

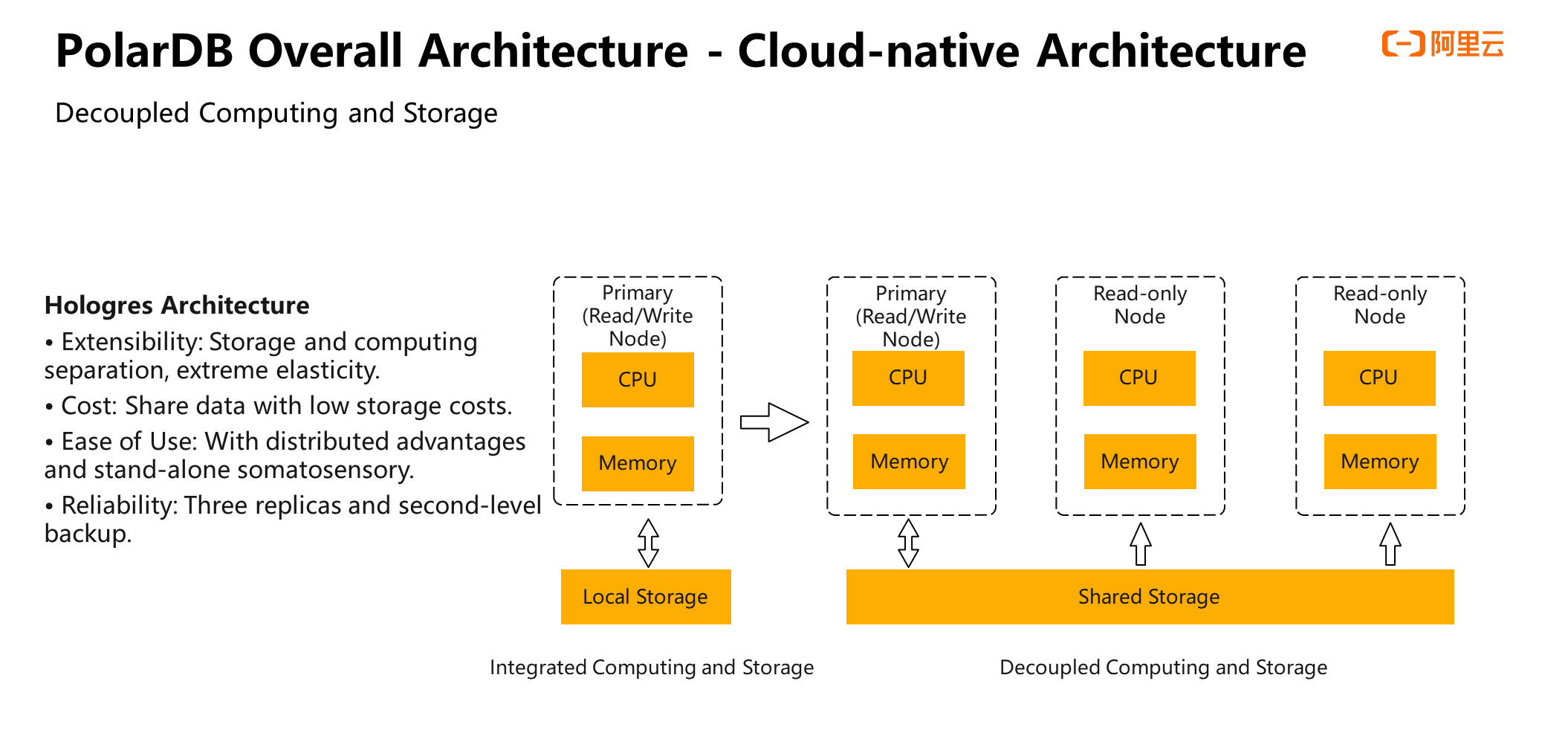

PolarDB proposes a cloud-native architecture to decouple computing and storage resources.

The left side of the preceding figure shows a traditional database whose CPU, memory, and storage are all on a single server, which is called integrated computing and storage. The right side is the architecture of PolarDB, which is divided into compute nodes and storage nodes. Data are stored in a storage pool composed of storage nodes. Each computing node reads data from the storage pool through a high-speed network.

The advantages of compute-storage separation architecture are listed below:

① Extreme and Elastic Scaling Capability: Storage and computing can be expanded independently.

② Reduce Storage Costs: The number of compute clusters where data is stored is always available.

③ Ease of Use: It has the advantage of distributed database and the sense of a standalone database because each compute node can see all data. For users, any compute node is equivalent to a standalone database.

④ High Reliability: The underlying shared storage provides three replicas and second-level snapshots, which provides a convenient way to back up databases.

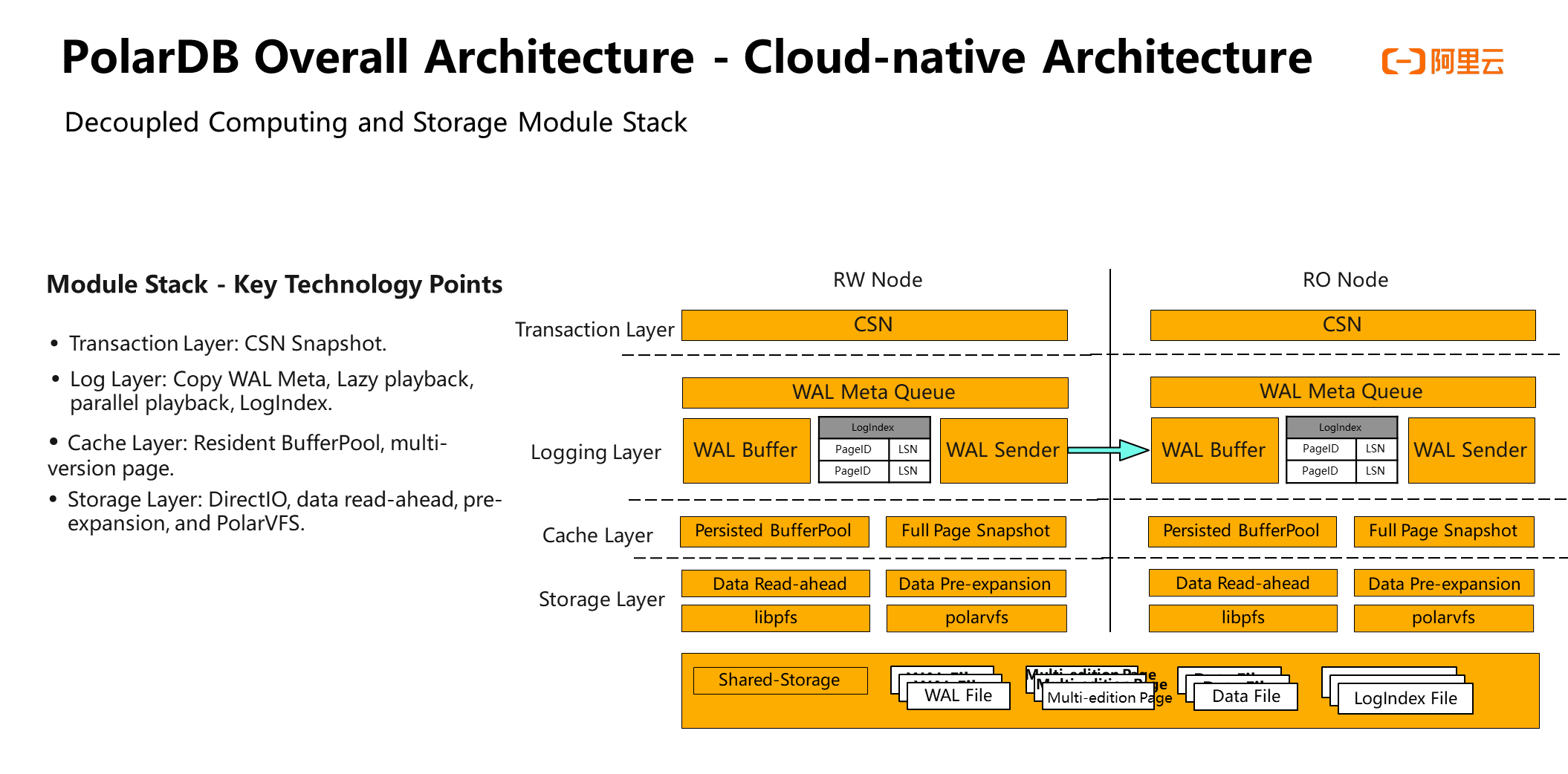

PolarDB designs and develops compute-storage separation architecture but also optimizes the module stack of the database.

CSN snapshots are implemented to replace traditional transaction snapshots in the transaction layer. The core data structure (such as LogIndex) is implemented in the log layer, which solves the unique data problems of past pages and future pages encountered under the compute-storage separation architecture, and realizes delayed playback and parallel playback. The resident BufferPool and multi-version pages are implemented in the cache layer. It implements the ability to pre-read and pre-scale DirectIO model pages in the storage layer.

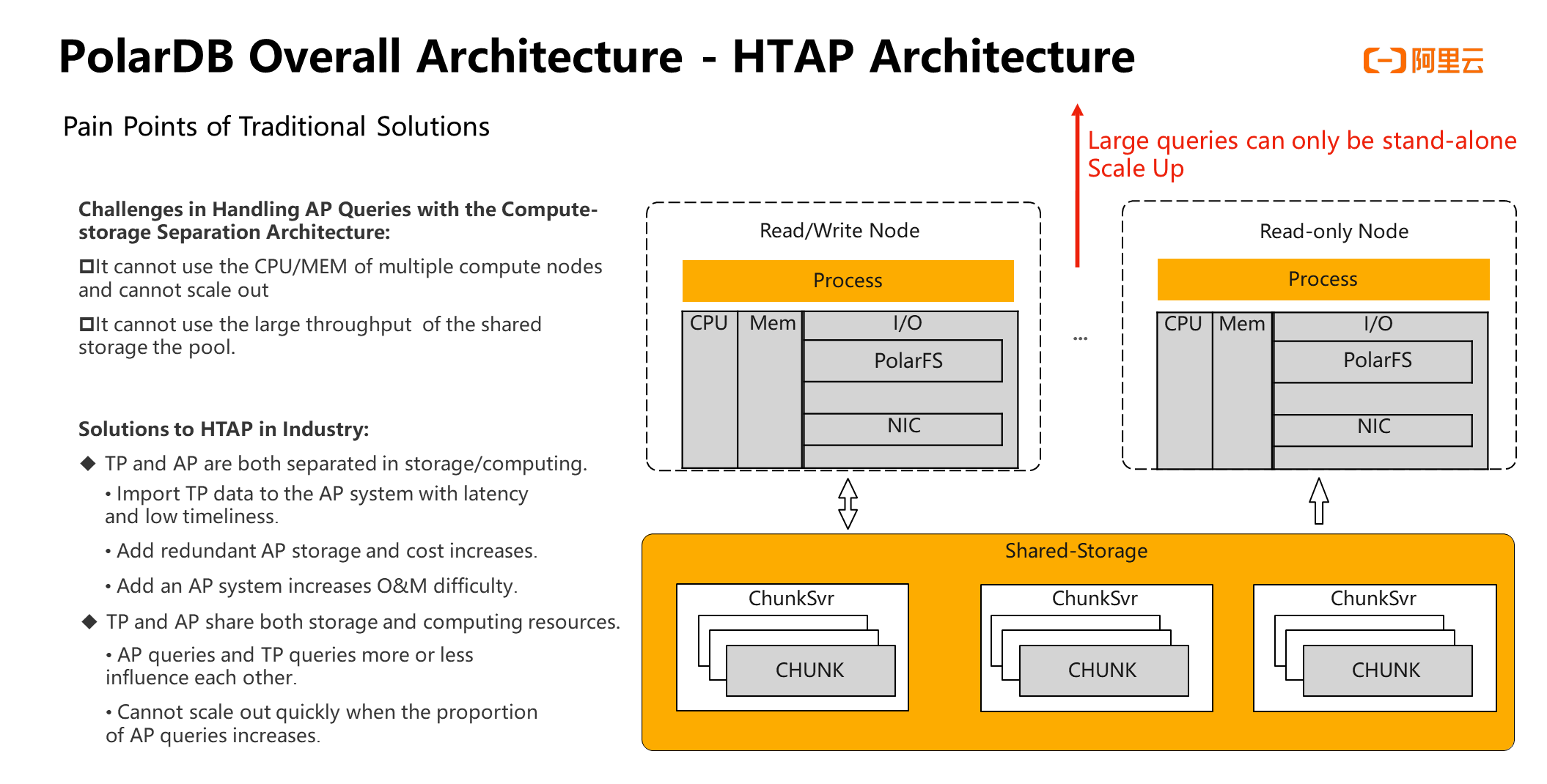

In addition, users often need to perform complex analyses and queries on TP transaction data at night, such as summary reports and reconciliation. Such queries are typically complex SQL statements, but the concurrency is not high, which is a typical OLAP scenario.

When the original compute-storage separation architecture of PolarDB processes such complex SQL statements, only a single compute node can compute the SQL statements. Therefore, the overall computing power of the computing cluster cannot be realized, and the large bandwidth of the storage pool cannot be realized.

There are usually two types of solutions in the industry at that time:

① Deploy an AP system outside the original TP system and import TP transaction data to the AP system through logs. The problem with this solution is that the latency between the two systems is relatively high, which will result in low data freshness. In addition, deploying a set of independent AP systems will lead to increased storage and O&M costs.

② Execute AP queries on the original TP system, but this will inevitably cause the two services of TP and AP to affect each other. In addition, the AP system has no way to expand flexibly.

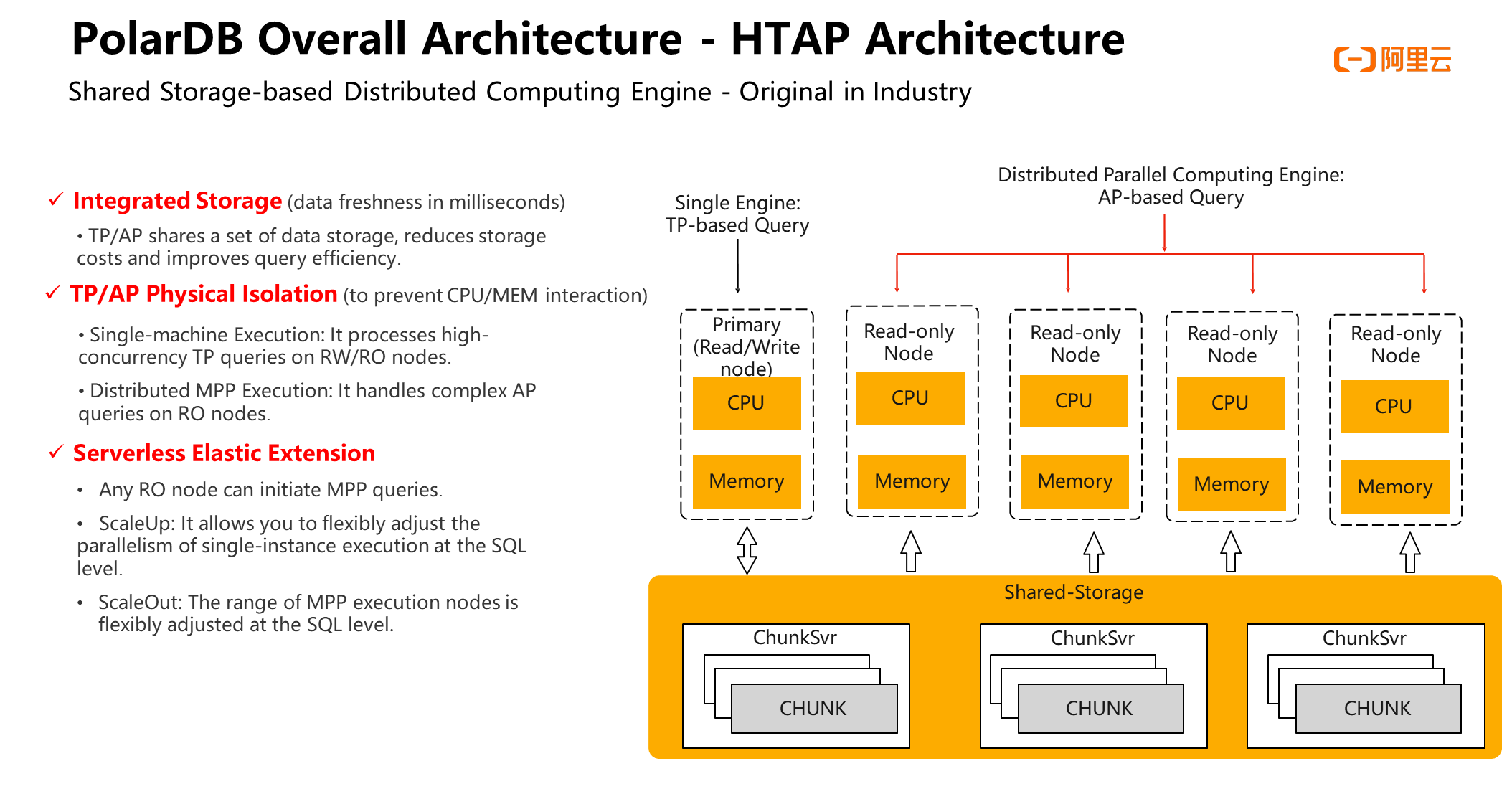

Therefore, PolarDB has developed a shared storage-based distributed computing engine, which is the first solution in the industry. This solution has the following advantages:

① It is an integrated storage solution. TP and AP share the data stored on the shared storage. Compared with the two systems, it reduces the storage cost and provides millisecond-level data freshness. A piece of data is inserted into the TP system, which can be queried in milliseconds in the IP system.

② TP and IP addresses are physically isolated and do not affect each other. Some compute nodes execute a standalone engine to process highly concurrent TP queries, while others execute a distributed query engine to process complex AP queries.

③ Scaling Capability: When the system is complex SQL, if the computing power is insufficient, the computing nodes can be quickly added, and new nodes can also be quickly added to the distributed computing engine cluster.

Compared with the traditional OLAP system, it is a system that takes effect immediately. It does not need to redistribute and redistribute data and has improved performance significantly.

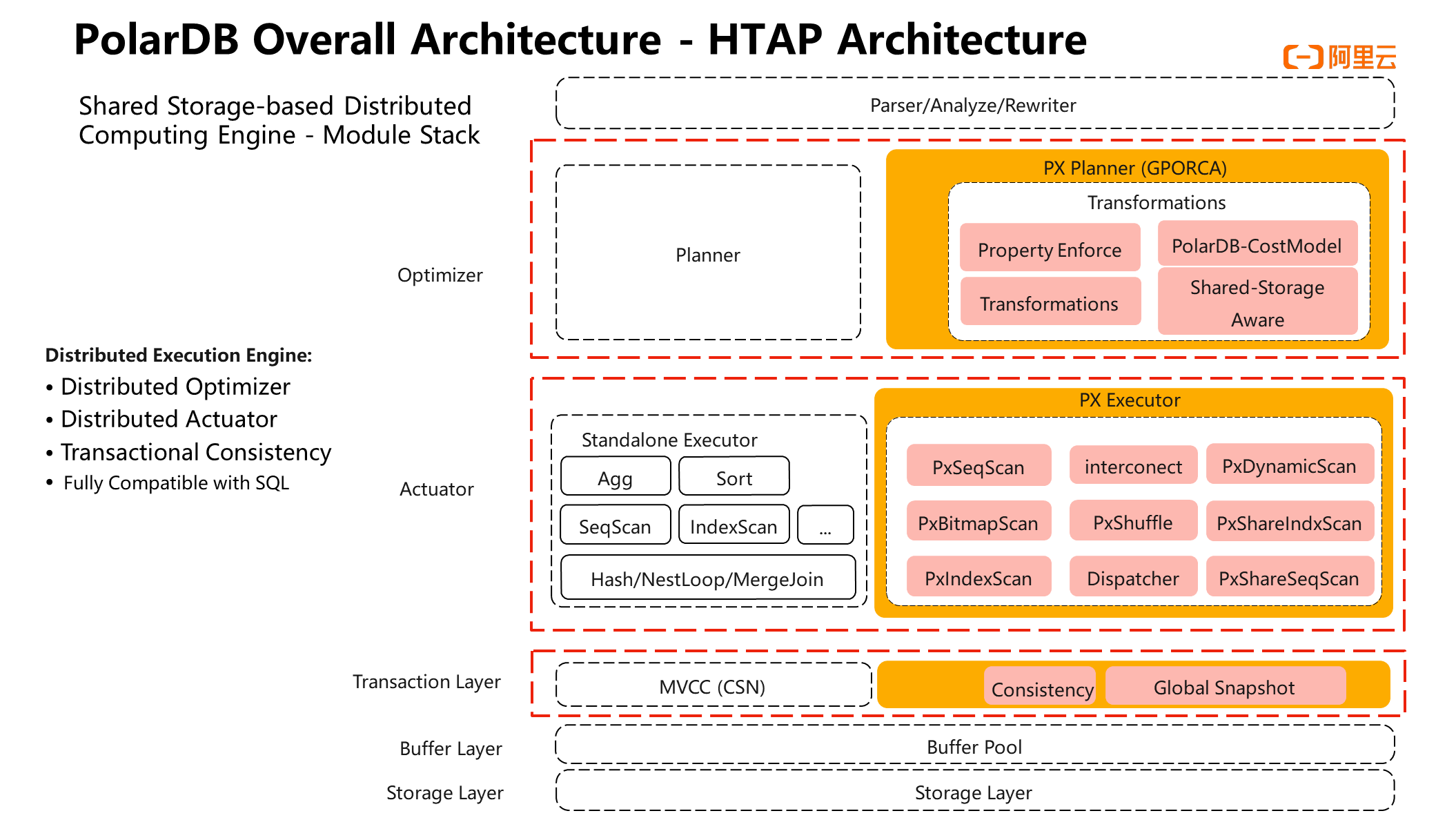

The following modules need to be implemented to implement a complete distributed computing engine on the shared storage:

① Distributed Optimizer: The optimizer generates a distributed number of execution plans based on the data distribution characteristics. PolarDB is a secondary development based on the GPORCA optimizer framework. The optimizer needs to realize data is shared during the development process. The GPORCA optimizer framework is based on share-nothing. Therefore, many rules must be added for application to PolarDB.

② Distributed Executor: A complete set of parallelized operators is required to implement distributed actuators. For example, when data are scanned, since the underlying data is shared in PolarDB, each compute node needs to parallelize the scanned words when performing sequential scans. These operators will eventually be assembled into a volcano execution model.

③ Transaction Consistency: Since distributed execution spans multiple compute nodes, you need to use unified data bits and snapshots to determine the visibility of transactions to ensure the data queried by each node is full-value consistent data.

④ Fully Compatible SQL: A lot of compatibility development work is required for SQL standards to enable the new distributed computing engine to be used by users' businesses.

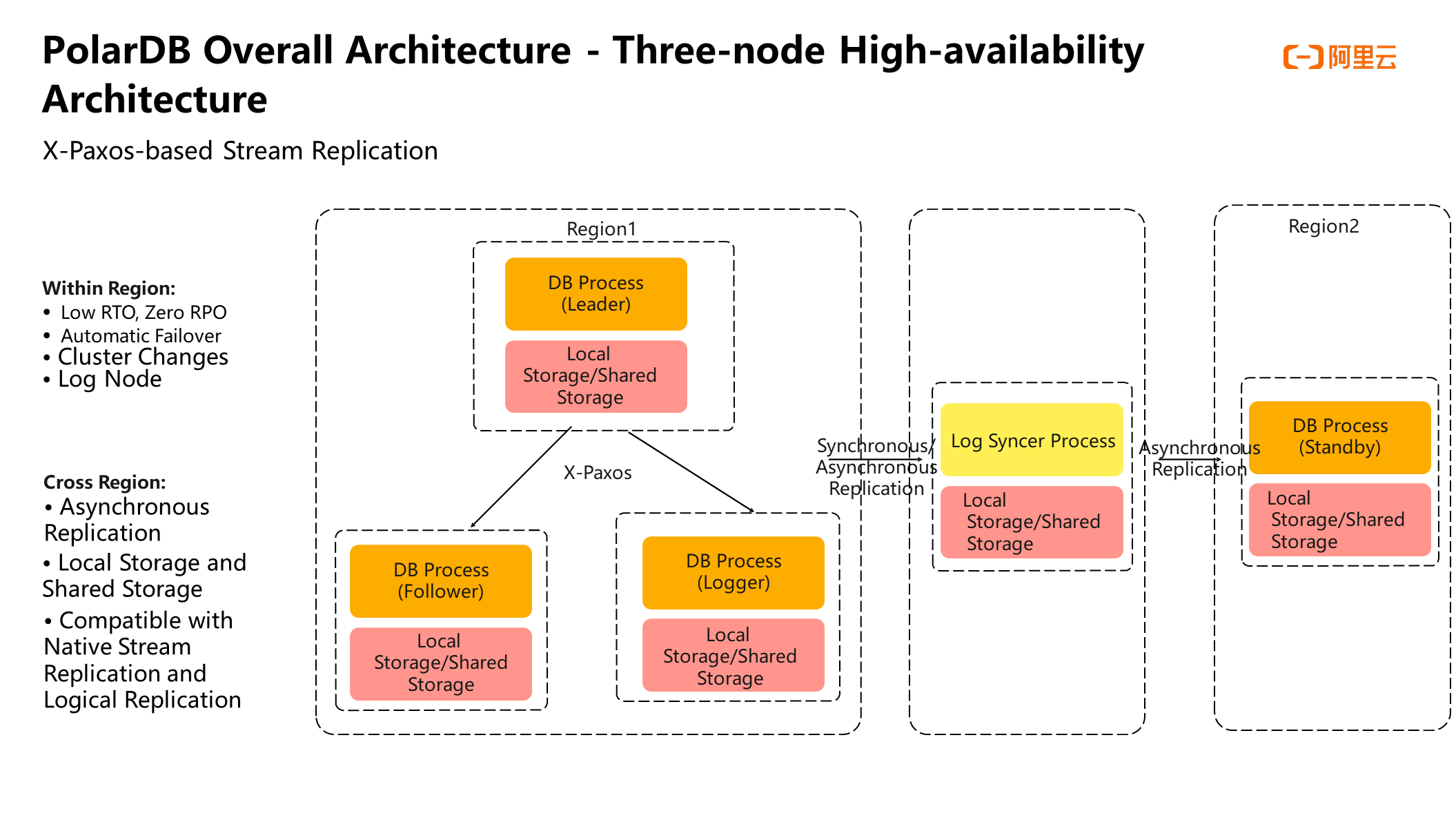

PolarDB can run on a shared storage device in a compute-storage separation mode. PolarDB also supports the three-node high availability mode. This mode can run in the local mode without relying on shared storage equipment.

First, nodes replicate redo logs through X-Paxos algorithms to ensure low latency and availability of RP=0 within the region.

Secondly, the replication of the X-Paxos algorithm realizes automatic failover. When the leader node is down, the algorithm can automatically select a new leader to automatically recover without the intervention of DBA personnel.

In addition, you can use X-Paxos algorithms to change cluster members. At the same time, PolarDB implements log nodes (only redo logs on the nodes have no data pages). You can use two normal nodes plus one log node to implement 2.5 replicas to reduce costs.

In cross-region scenarios, log nodes are used to implement high-availability deployment in two regions and three centers. As shown in the preceding figure, region 1 is an independent X-Paxos three-node highly available mode. Region 2 is an independent DB deployment, and a log node is deployed in another data center in the same city. Then, synchronous replication or asynchronous replication can be used between region 1 and the local log nodes. The latency is relatively low because they are in the same city, thus realizing the highly available deployment mode of two places and three centers.

The system is also compatible with native stream replication and logical replication. You can deploy a standard PostgreSQL database to consume upstream redo logs.

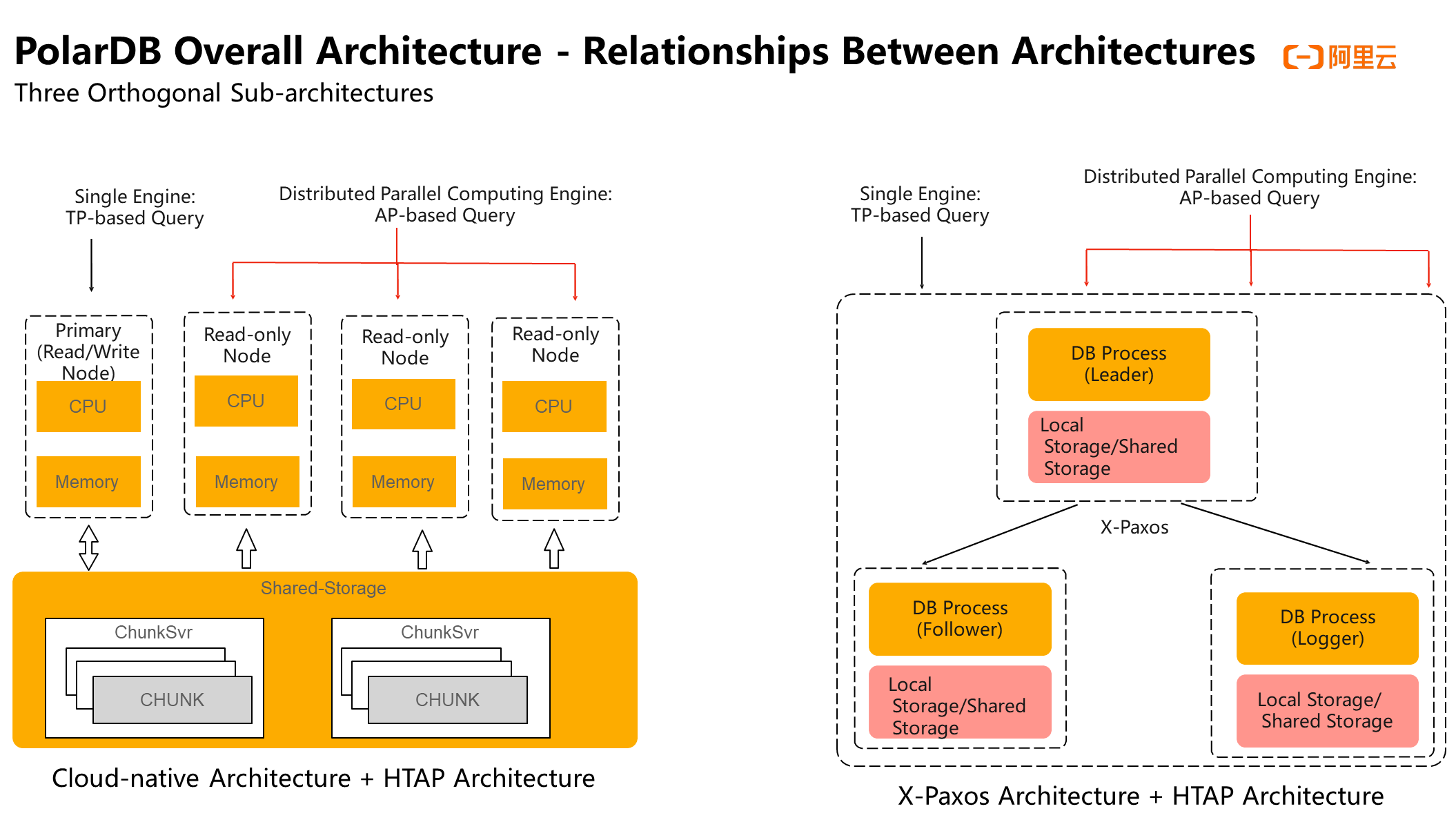

The three PolarDB architectures mentioned above can be used in a free combination based on your business scenarios. For example, the combination of cloud-native and HTAP can meet the needs of elasticity, TP, and AP services. In addition, the free combination of the three architectures is implemented in a set of binary. Users only need to make simple configurations in the configuration file to realize the free combination of these three architectures.

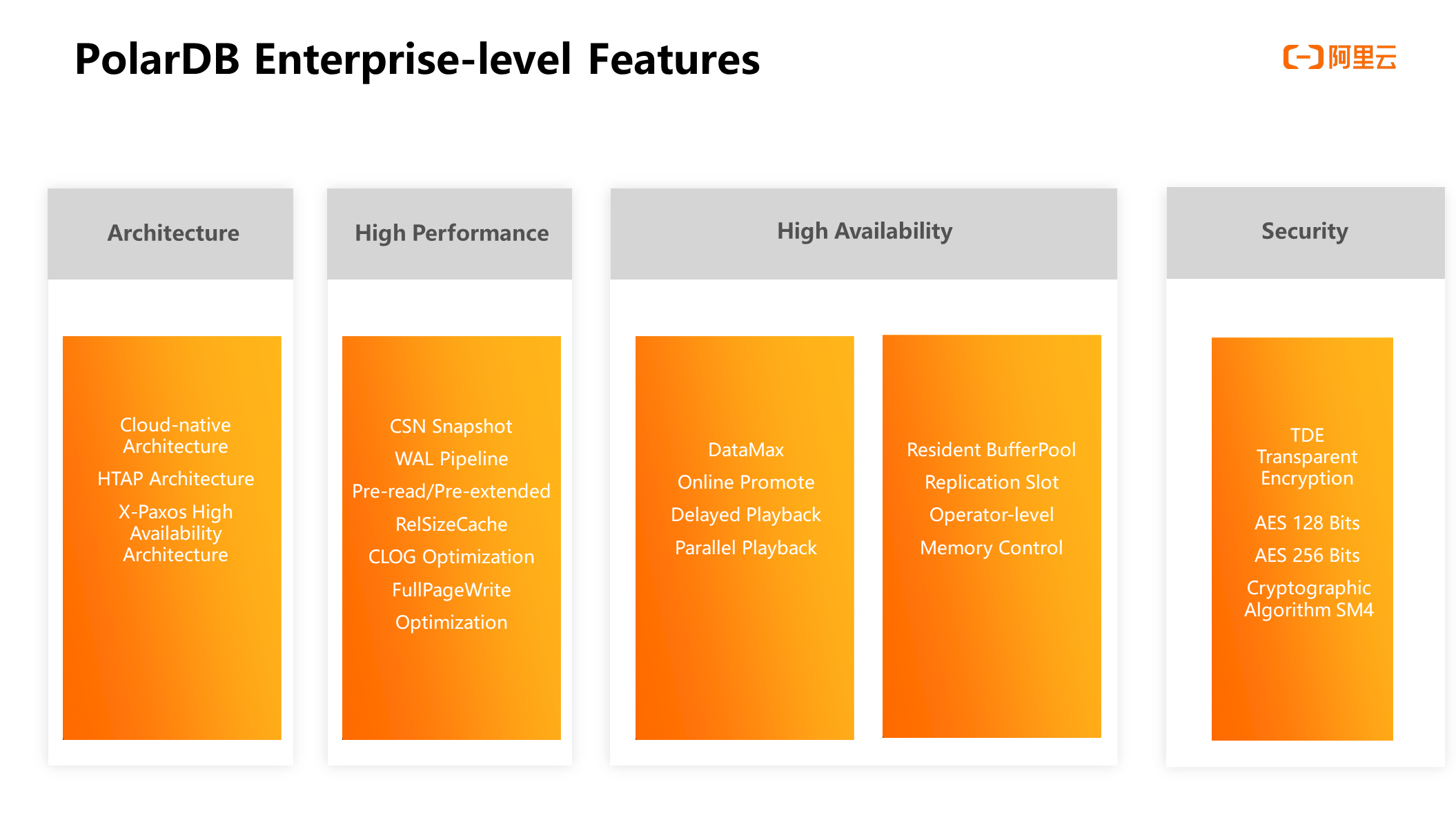

PolarDB provides four enterprise-level features:

① Architecture Support: It has been explained in detail above.

② High Performance:

1) PolarDB implements the pipeline of CSN snapshots and WAL logs, which solves the problem of critical zones with high concurrency.

2) It optimizes read-ahead, pre-expansion, RelSizeCache, and CLOG. Then, these optimizations are for IO under the DirectIO model. After storage and computing are separated, each I/O of the storage needs to access the backend storage pool through the network, which is different from the native scenario. As a result, a lot of optimization work needs to be done.

3) The LogIndex core data structure is developed, which records the redo logs that occur in the history of each page. It can solve the problem of data correctness of past pages and future pages unique to compute-storage separation but also solve the semi-write problem unique to the PB database.

③ High Availability:

1) DataMax is implemented. It provides log mode to support the deployment of three centers in two places and online promotion, delayed playback, and parallel playback. These three big features optimize the speed of crash recovery and shorten the unavailability time when the DB process crashes.

2) The resident BufferPool is implemented. After the DB process is restarted, the buffer needs to be reinitialized. However, the current machine configuration causes the buffer to become larger and larger, which makes the initialization of the buffer take a lot of time.

3) It provides a Replication Slot to resolve the loss of slots during DB failover. It uses shared storage to store slot information on the shared storage, thus solving the problem of replication slot loss.

4) It implements operator-level memory control and sets an upper limit for the memory of each operator to prevent the entire DB process from crashing due to excessive memory of a single operator.

④ Safety: PolarDB provides transparent encryption to ensure that data stored on disks is encrypted. Currently, transparent encryption supports AES 128-bit, AES 256-bit, and SM4 encryption algorithms.

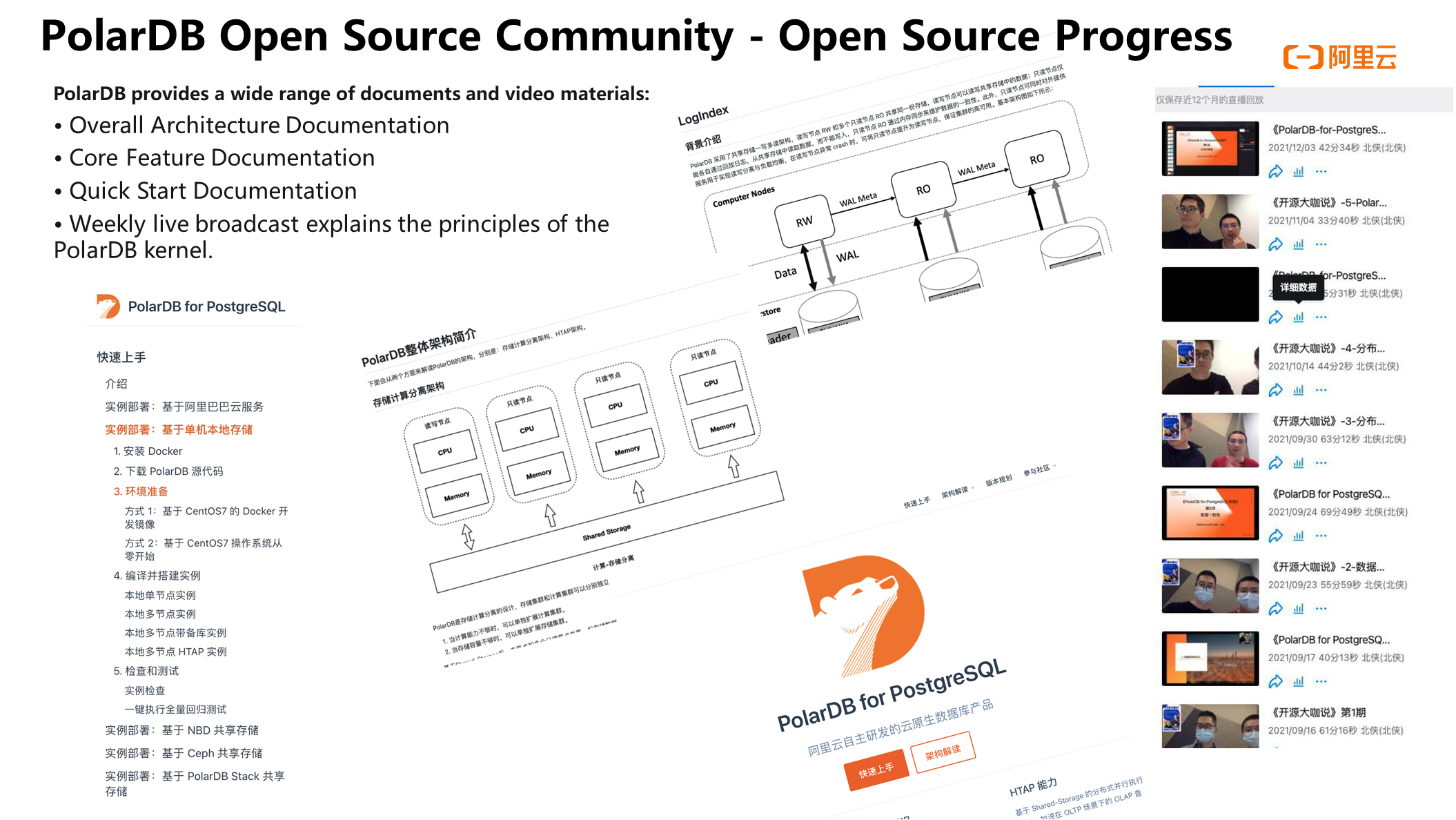

PolarDB is open-source on GitHub.

The source code repository address: https://github.com/ApsaraDB/PolarDB-for-PostgreSQL

In the open-source process, we insist on being 100% compatible with PostgreSQL (community standard) to ensure that users can seamlessly migrate from standard standalone PostgreSQL to PolarDB. We open-sourced all components, including the PolarDB kernel, PolarDB distributed file system, and PolarDB cloud control. The open-source code is the same as the code of the public cloud.

Apart from sharing cloud codes, we provide a wide range of documents and video materials, such as architecture documents, core function documents, and quick start documents.

Analyze the Technical Essentials of PolarDB at the Architecture Level

Alibaba Cloud Community - December 20, 2022

ApsaraDB - July 23, 2021

ApsaraDB - January 3, 2024

ApsaraDB - March 3, 2020

ApsaraDB - January 17, 2025

Alibaba Clouder - January 8, 2021

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by ApsaraDB