Alibaba Cloud Data Transmission Service (DTS) is a convenient and efficient service for data migration and synchronization. Generally, a complete DTS data migration task includes several stages: pre-check, structure migration, full data migration, and incremental migration. The full data migration stage involves transferring all existing data from the source database to the target database. Given various user scenarios, this article focuses on how to accelerate full data migration using Alibaba Cloud DTS to shorten migration time and ensure the efficiency and stability of data migration.

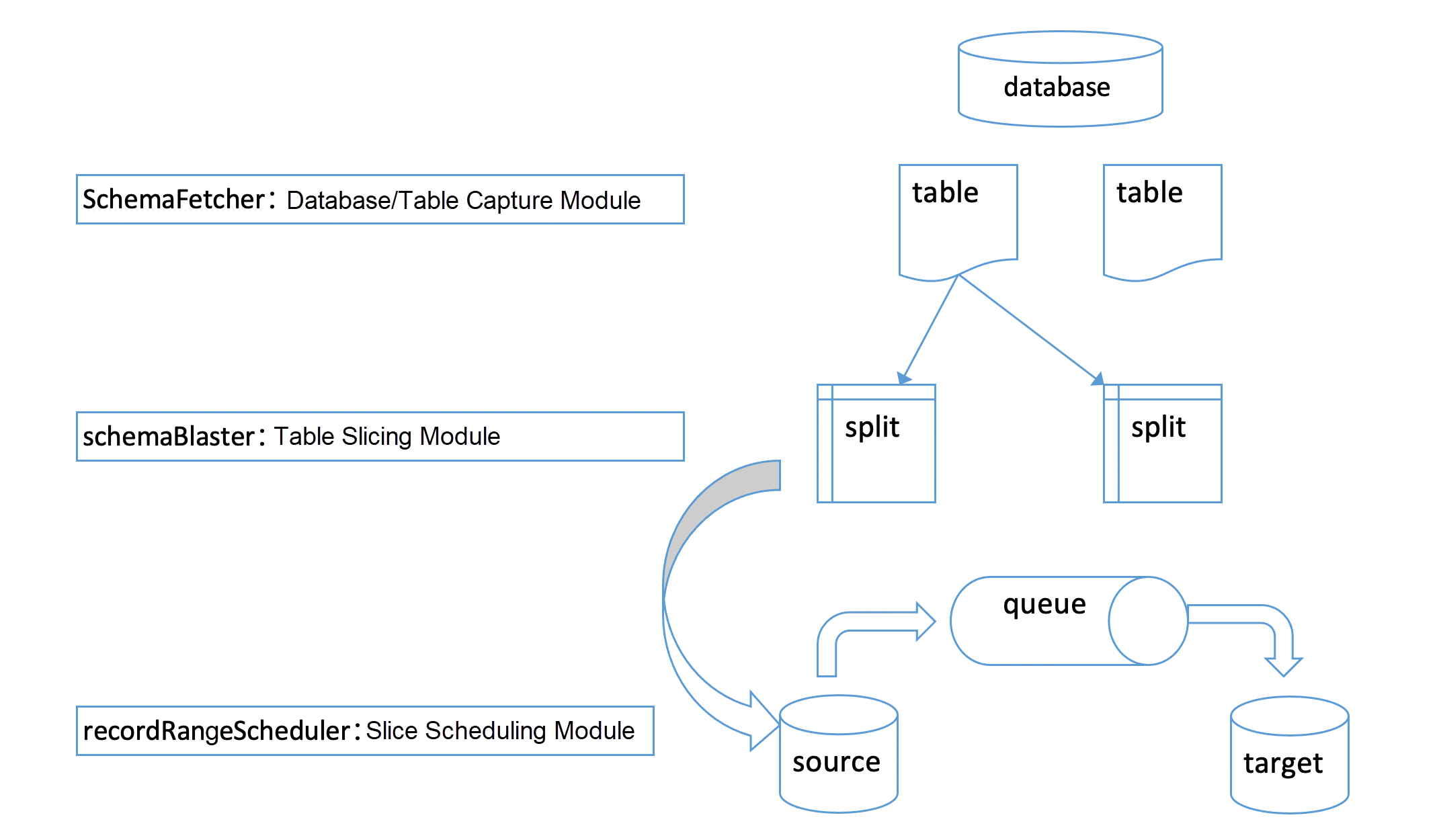

To accelerate DTS full data migration, it is essential to understand how DTS full data migration works. As shown in the following figure, DTS full data migration consists of three main modules: the database/table capture module, the table slicing module, and the slice scheduling module. The database/table capture module retrieves the databases and tables to be migrated based on the dblist and fetches the table structures from both the source and target databases. The table slicing module divides each table into fixed-size slices according to a certain size. The slice scheduling module initiates multiple source threads to pull data corresponding to each slice from the source database and places it into the memory queue inside DTS. It then starts multiple sink threads to pull the data corresponding to each slice from the memory queue and write it to the target database.

According to experience in serving thousands of users, the most common reasons for slow full data migration are as follows:

• Users perceive the full data migration as slow, but the actual task is proceeding normally; they just have a large amount of data and wish to speed up the process.

• The task has actually encountered errors, but because it is retrying, the task has not failed, and the full data migration progress has stopped. Users mistakenly think the full data migration is slow.

o Users' tasks may be affected by special scenarios, such as large fields or high RT at the target end, leading to unexpected full data migration rates.

For the first issue, the following sections will discuss some common methods to accelerate full data migration. You can try these methods. For the second issue, since the task has already failed, you can wait for the retries to stop and then follow the error prompts on the control panel to take appropriate actions to resume the task. Alternatively, you can submit a ticket for assistance from support staff. For the third issue, we need to first identify the cause of the slow full data migration by examining the DTS task logs and the state of the databases, and then apply targeted acceleration strategies.

According to the migration principles described earlier, full data migration primarily involves fetching the database/table structure, slicing tables, pulling data from the source database, and writing data to the target end. The reasons for slow full data migration can be analyzed from these four aspects.

Generally, fetching table structures does not take long, but there are exceptions. To speed up the process of fetching table structures, DTS currently fetches them in batches from the relevant views in information_schema. However, when the number of tables to be migrated is extremely large, for example, in the tens of thousands, the SQL command used by DTS to fetch these structures in batches may get stuck. In such cases, the only current solution is to submit a ticket, and DTS support staff will switch to fetching table structures individually.

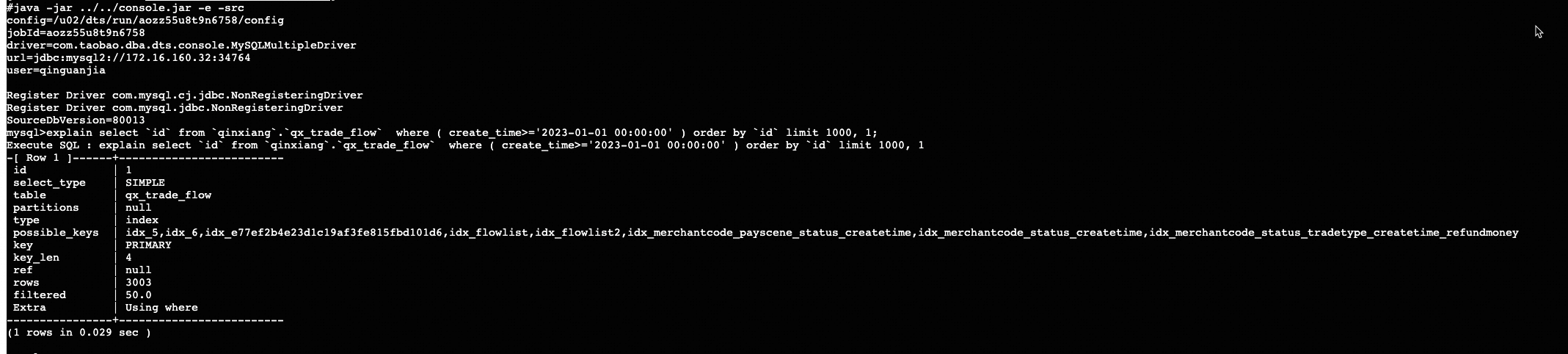

The reason for the slow slicing is that DTS is slow when executing SQL commands to obtain the left and right boundaries of the slice. A common scenario is when users configure filters to migrate only data that meets specific conditions to the target end. When DTS slices tables, it adds this filter condition to the WHERE clause of the slicing SQL command, which can change the execution plan and make the command slow. For example, if a user wants to migrate only data after 2023, the slicing SQL command first sorts by the primary key (ORDER BY) and then sequentially scans the primary key until the number of rows where create_time >=' 2023-01-01 00:00:00 reaches 1,000. Since the distribution of create_time >=' 2023-01-01 00:00:00 tends to be toward the end of the table, the slicing SQL command ends up performing nearly a full table scan, making it slow. Currently, the only solution for this case is to submit a ticket, and DTS support staff will switch to slicing without adding the filter condition.

There are many reasons why data retrieval can be slow, such as a self-managed source database with high RT, or tables with large fields such as text, longtext, and blob, which can lead to longer execution times for SQL commands run by DTS to retrieve data from the source database. You can connect to the source database from the DTS server and execute some simple SQL commands to estimate the RT of the source end. If the high RT is due to network issues, you can ask the user to increase the bandwidth of their dedicated line or consult with network specialists to diagnose the problem. If the high RT is due to the database, then database support staff need to intervene and investigate. If the source database tables contain large fields, you can adjust the slice size and the number of records written per batch. By observing the RPS or BPS metrics of the full data migration and making adjustments, the goal is to ensure that the SQL commands can be executed smoothly and that OOM issues do not occur during full data migration, while maximizing the BPS or RPS as much as possible.

Slow data writing is a common issue, which can be due to the database, the network, or unreasonable default parameter settings in DTS for specific scenarios. You can check the time taken by the sink thread to execute SQL commands on the task process page in Numen. Additionally, you can monitor the DTS logs to determine the time taken to write a batch. Based on the execution time of the sink SQL commands, you can determine whether slow data writing is the reason for slow full data migration. If it is confirmed that data writing is slow, you can perform checks on the target database, such as verifying if the disk space is full, if the target database has reached its performance limits, checking the RT of the target end, identifying any table locks, and assessing if the tables are wide. Alternatively, you can refer to the following methods to accelerate full data migration by observing the RPS or BPS metrics and making adjustments as needed.

Based on the past support experience, you can try the following methods to accelerate full data migration:

• If slow slicing is caused by filters, contact support staff to switch to slicing SQL commands without filter conditions.

• When there are few tables left to migrate, set source.polling.schema.max.missed.times to 1 to reduce the wait times of load balancing.

• If the task gets stuck during slicing but individual slices execute quickly, increase the number of slicing threads with dts.datamove.record.blasters.

• In batch mode, you can try increasing the number of read and write threads and the size of each batch to speed up full data migration:

o Number of read threads: dts.datamove.record.pumper.readers

o Number of write threads: sink.task.number

o Minimum batch size: dts.datamove.record.batch.size.min

o Maximum batch size: dts.datamove.record.batch.size.max

• In bulk copy mode, you can try increasing the mysql.bulk.copy.rows.per.transaction parameter to load more data per transaction.

• When DTS tasks are limited by specifications, you can try increasing the memory size and CPU limit for full data migration tasks to speed up the process.

• In extremely accelerated full data migration scenarios, you can switch DTS tasks to high-specification oxs machines, which will be faster than vpc machines.

Data Transmission Service (DTS) is the best choice for data migration. It supports various database engines to meet the needs for single way and bi-directional synchronization. If you would like to know more about best practices over database migration and synchronization, check out Database Migration on our website.

In-depth Comparison between MySQL MGR and Alibaba Cloud PolarDB-X Paxos

ApsaraDB - March 15, 2024

Alibaba Cloud Indonesia - August 12, 2024

Alibaba Cloud Community - January 4, 2026

ApsaraDB - November 7, 2025

ApsaraDB - July 12, 2023

Ali Ali - May 15, 2025

Data Transmission Service

Data Transmission Service

Supports data migration and data synchronization between data engines, such as relational database, NoSQL and OLAP

Learn More Time Series Database (TSDB)

Time Series Database (TSDB)

TSDB is a stable, reliable, and cost-effective online high-performance time series database service.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn MoreMore Posts by ApsaraDB