Author: Wang Chen

In classic application architecture, a "gateway" often signifies unified access for user requests, authentication, flow control, protocol transformation, and other functions. Gateways like Nginx, Envoy, and Kong are typical representatives of such capabilities. Whether in microservices architecture or cloud-native architecture, the usage logic for these products is relatively straightforward, and the selection criteria are also quite stable.

However, entering the era of AI applications, the originally clear definition of the "gateway" concept is being reshaped. For example, the recently popular OpenRouter, which previously identified itself as an LLM Marketplace, has begun to position itself as an "AI Gateway. "

This marks three significant changes:

This brings a certain cognitive burden to developers and enterprise users. In the past, we only needed to compare several hard indicators such as the standards followed by the gateway, kernel, performance, plugin system, upstream and downstream integration capabilities, observability, and other enterprise-level capabilities; today, we need to define different types of AI Gateways by combining user profiles and demand scenarios. This article aims to clarify the correlation and differences between these two different forms of AI Gateway, represented by OpenRouter vs. Higress, from the perspectives of background, development history, positioning, and functions.

The evolution trajectory of every tech product is deeply influenced by its initial form and customer needs. AI Gateway is no exception. Therefore, understanding the background and evolution of Higress and OpenRouter will deepen our understanding of the two.

OpenRouter was initially launched in 2023 with the goal of addressing the cumbersome issue of "invoking and comparing multiple mainstream large model API performance differences." OpenRouter unified and encapsulated the API interfaces of mainstream model vendors such as OpenAI, Anthropic, Google, DeepSeek, Qwen, Kimi, etc., integrating previously dispersed and structurally inconsistent invocation methods into a unified standard (most of which follow OpenAI's interface specifications), and providing a single API Key management entry, allowing developers to invoke multiple large model vendors' APIs simultaneously. They can use their API Key (BYOK) or recharge to use the platform-provided Key (with a 5% service fee).

The speed of invoking models and the quick landing of popular models are its core advantages, directly addressing the pain points of the programming community.

From the early simple interface unified integration to gradually introducing load balancing between models, continuously optimizing calling performance, invoking log queries, Key permission division, model availability observation, etc., it has gradually evolved from a "model aggregator" to a lightweight "model invocation gateway." By late 2024, OpenRouter clearly stated that it is an "AI Gateway" and expanded its capability boundaries to include model load balancing, traffic limiting, and immediate caching, aligning more closely with gateway capabilities.

The core driving force behind this evolutionary path comes from the genuine needs of enterprise-level customers: how to invoke multiple models with minimal access costs, experiment with model effects, manage Token costs, etc. Therefore, OpenRouter has consistently focused on "simplifying the invocation experience," forming its current model aggregation + lightweight gateway form.

In contrast to OpenRouter's "new species" characteristics, Higress represents the classic route of gateway evolution, developing gradually alongside application architecture.

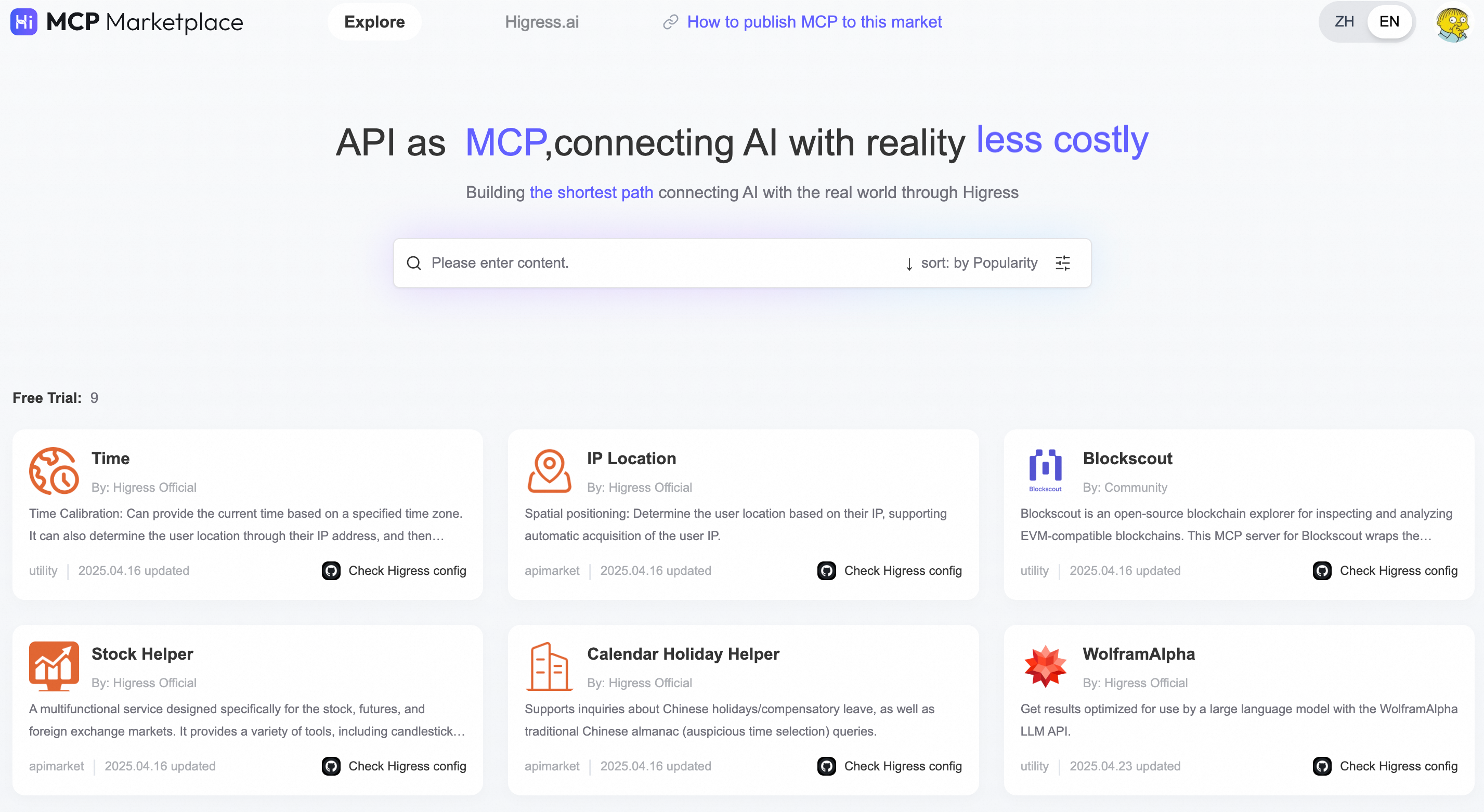

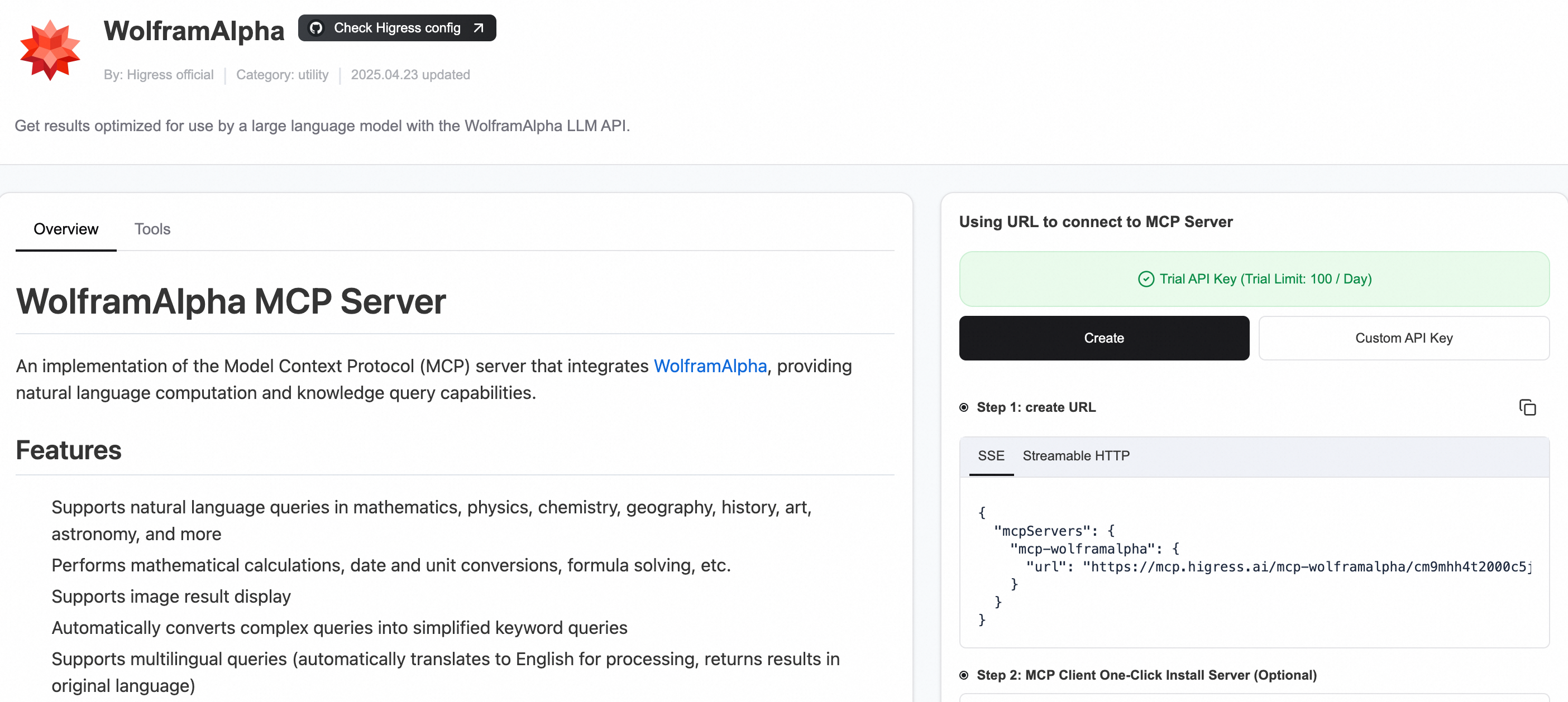

Higress was open-sourced in 2022, initially aimed at building a gateway for cloud-native scenarios, integrating Istio's service governance philosophy, Envoy's high-performance data plane capabilities, and implementing strong customization capabilities based on a WebAssembly plugin architecture. In 2024, with the arrival of the AI application wave, Higress released v1.4, becoming the first in the country to offer capabilities such as large model proxies, security protection, access authentication, observability, caching, and prompt engineering targeting large model scenarios, quickly attracting the attention of AI developers. In 2025, it further enhanced its open-source offerings, enabling bulk zero-code transition of existing APIs to MCP Servers and offloading the MCP network protocol, eliminating the maintenance work associated with releasing new MCP versions, and launched the Higress MCP Marketplace, becoming the only open-source gateway solution in the domestic MCP scenario.

The evolution of Higress is an extension driven by an upgrade of application architecture, rather than starting from scratch, but integrating AI capabilities into the cloud-native gateway’s foundation through plugins and integration mechanisms. This method naturally aligns with the demands for AI implementation in existing applications, making it friendlier for enterprise-level customers.

Although both OpenRouter and Higress promote themselves as “AI Gateways,” from the perspective of product positioning and functions, the two are not direct competitors but have each made their own deconstruction and reconstruction of the "gateway" capabilities from different starting points.

In summary: OpenRouter is designed for programmers to invoke AI services, while Higress is built for enterprises delivering AI applications. Next, we will explore the positioning and core functions of each project based on their user interfaces.

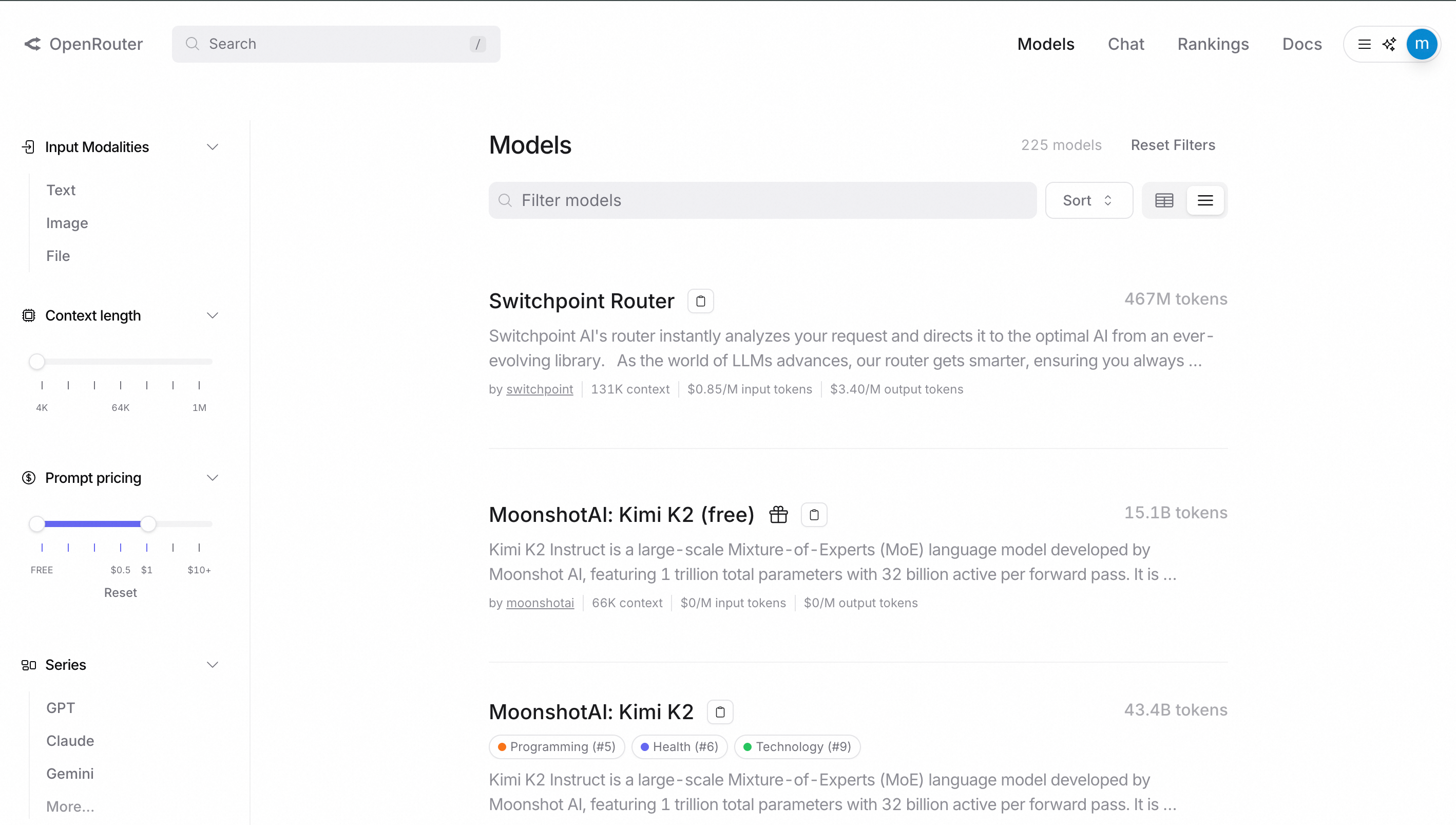

OpenRouter's positioning revolves around "standardized model invocation experience", continuously providing value-added SaaS services. Functionally, OpenRouter focuses on two core dimensions: model aggregation (Model) and invocation experience (Chat).

Model Aggregation (Model): It supports multiple model vendors, providing a unified API, allowing users to access hundreds of AI models through a single endpoint, and includes white screen parameter configurations such as input types, supported context lengths, and unit price filtering for models.

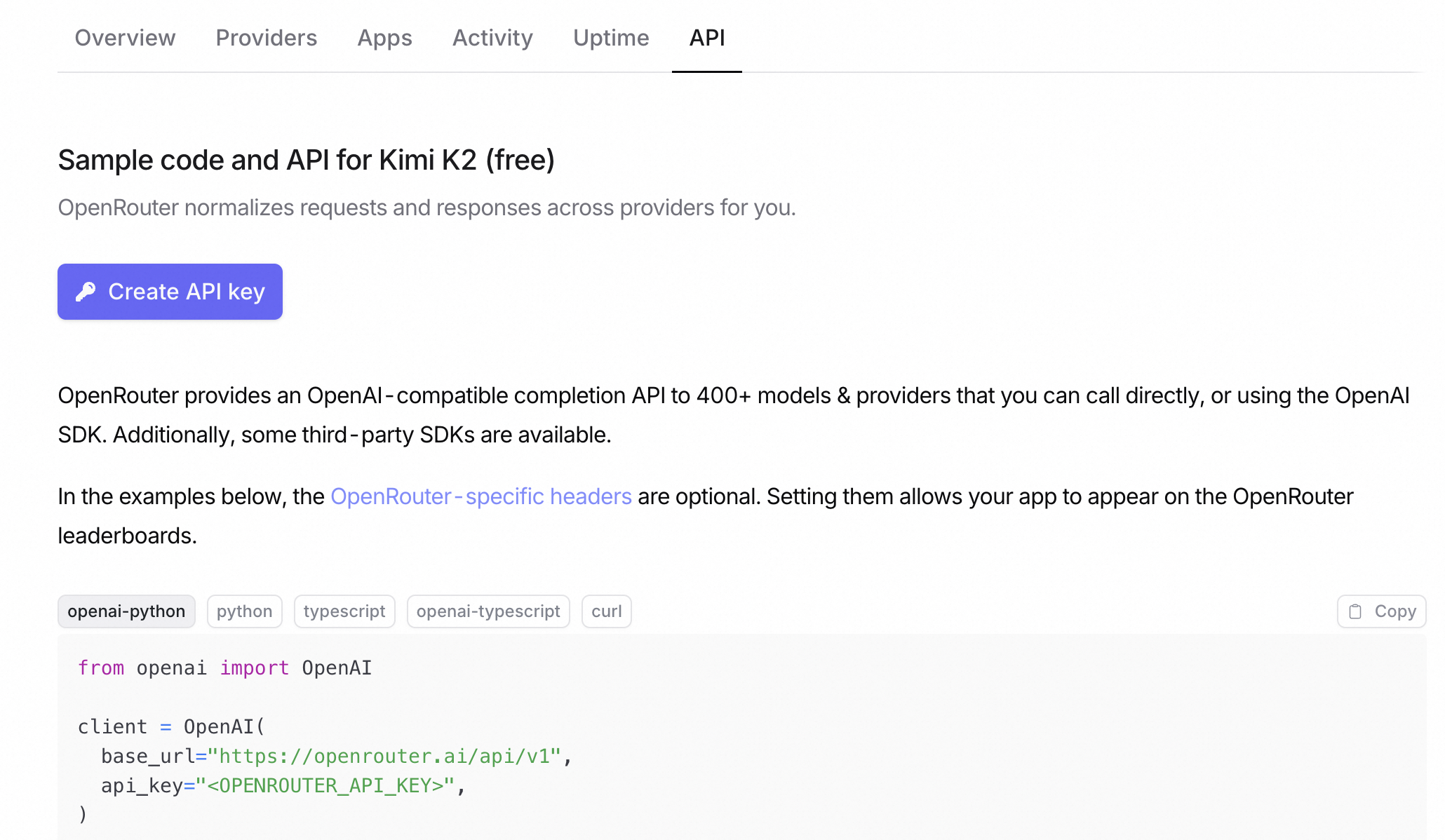

OpenRouter provides completion APIs compatible with OpenAI for over 400 models and vendors, which can be directly invoked or called using the OpenAI SDK. Additionally, it offers some third-party SDKs.

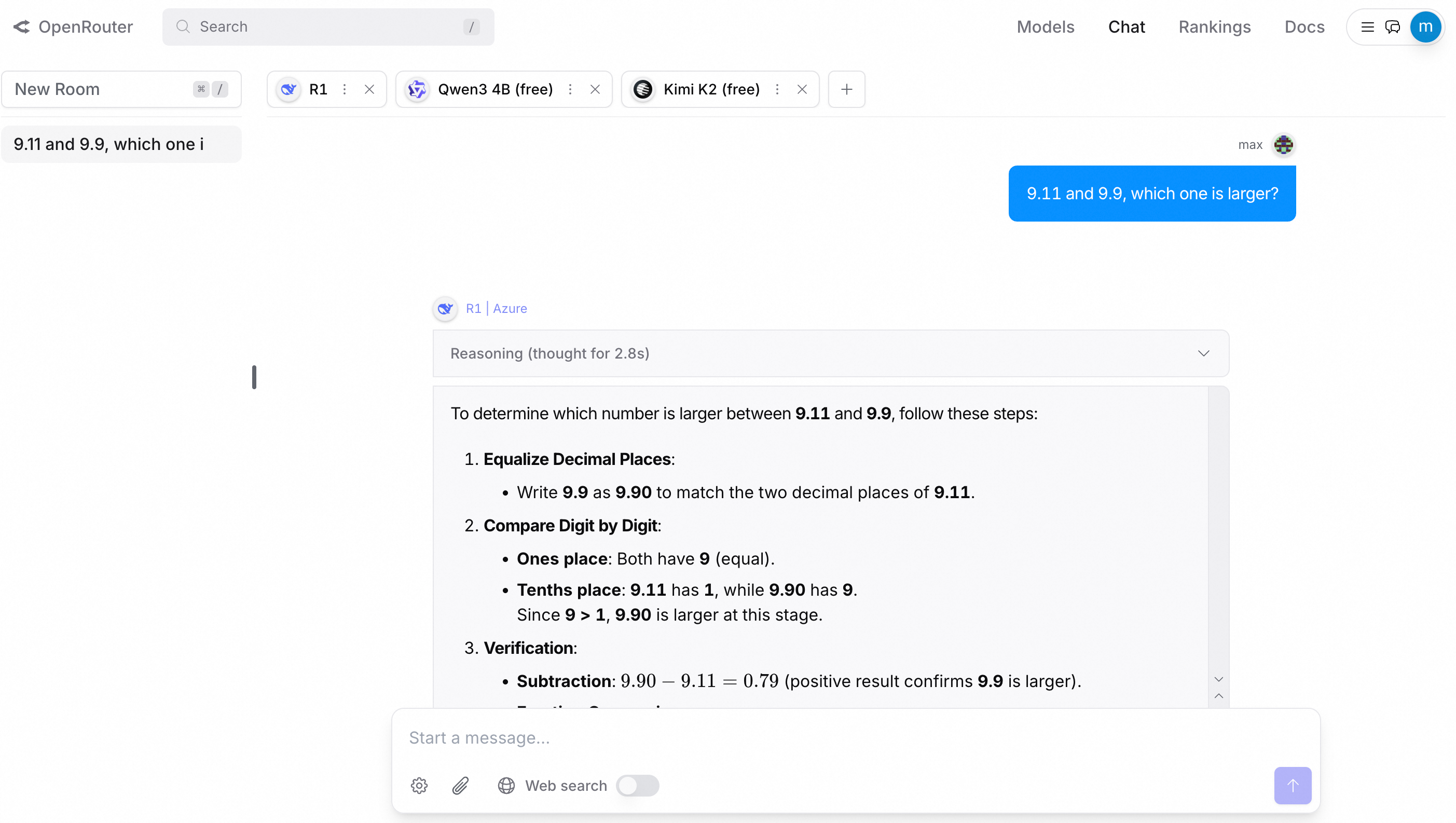

Invocation Experience (Chat): An online multi-model conversation tool that provides a unified conversation interface, making it easy for developers to view output differences, compare model response effects, debug prompts, and evaluate context performance.

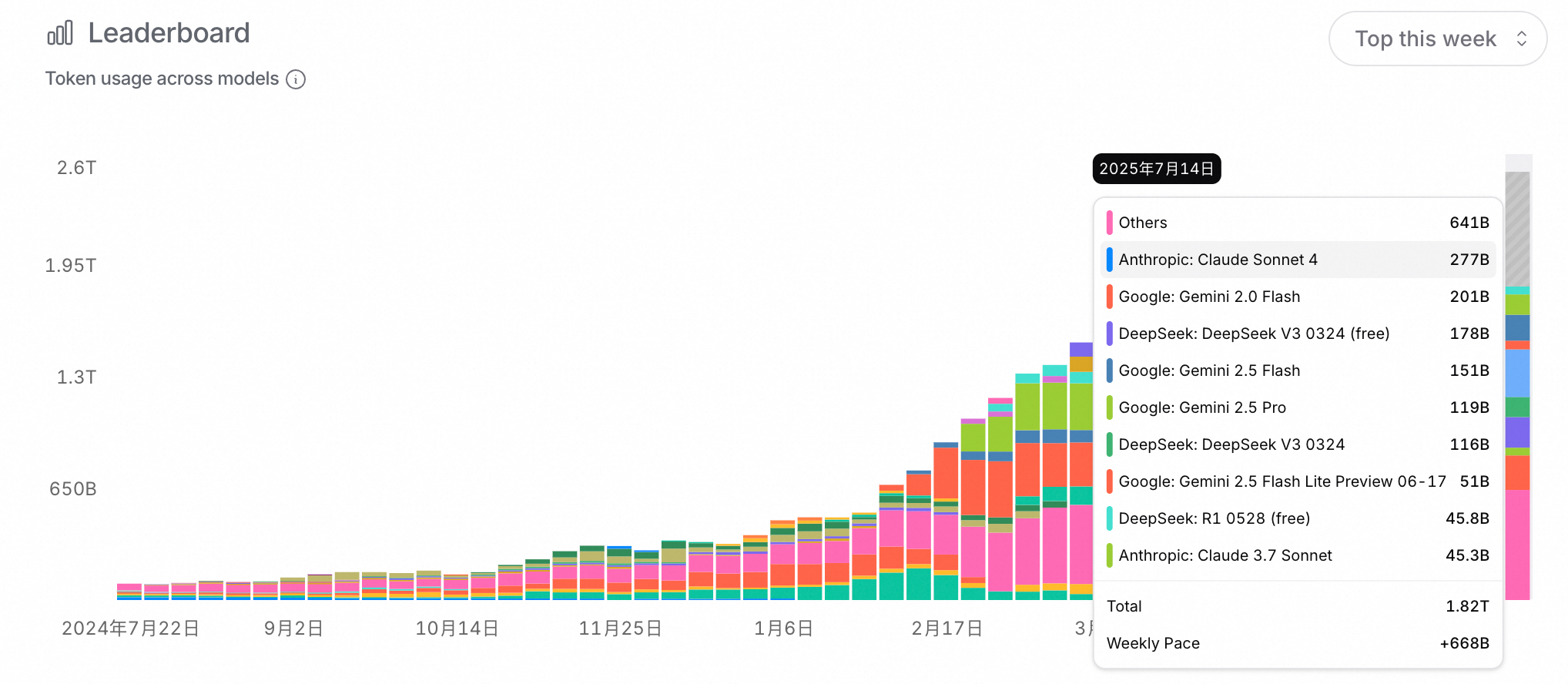

Ranking: It uses tokens as measurement units to provide rankings of invocation volume across three dimensions: general models, Coding models, and Agents.

Moreover, it offers many experience optimizations around model invocation, such as:

Overall, OpenRouter is inherently suitable for constructing an abstract layer for model invocation; however, it still has significant shortcomings in lower-level network protocols, granular security governance, and enterprise application integration.

Higress's positioning: A gateway created for enterprises to deliver AI applications, supporting the production-based landing of enterprise AI applications. This means that the clients served by Higress are not programmers but enterprises, ensuring that enterprises can reliably deliver model services to users. Its three main usage scenarios are as follows:

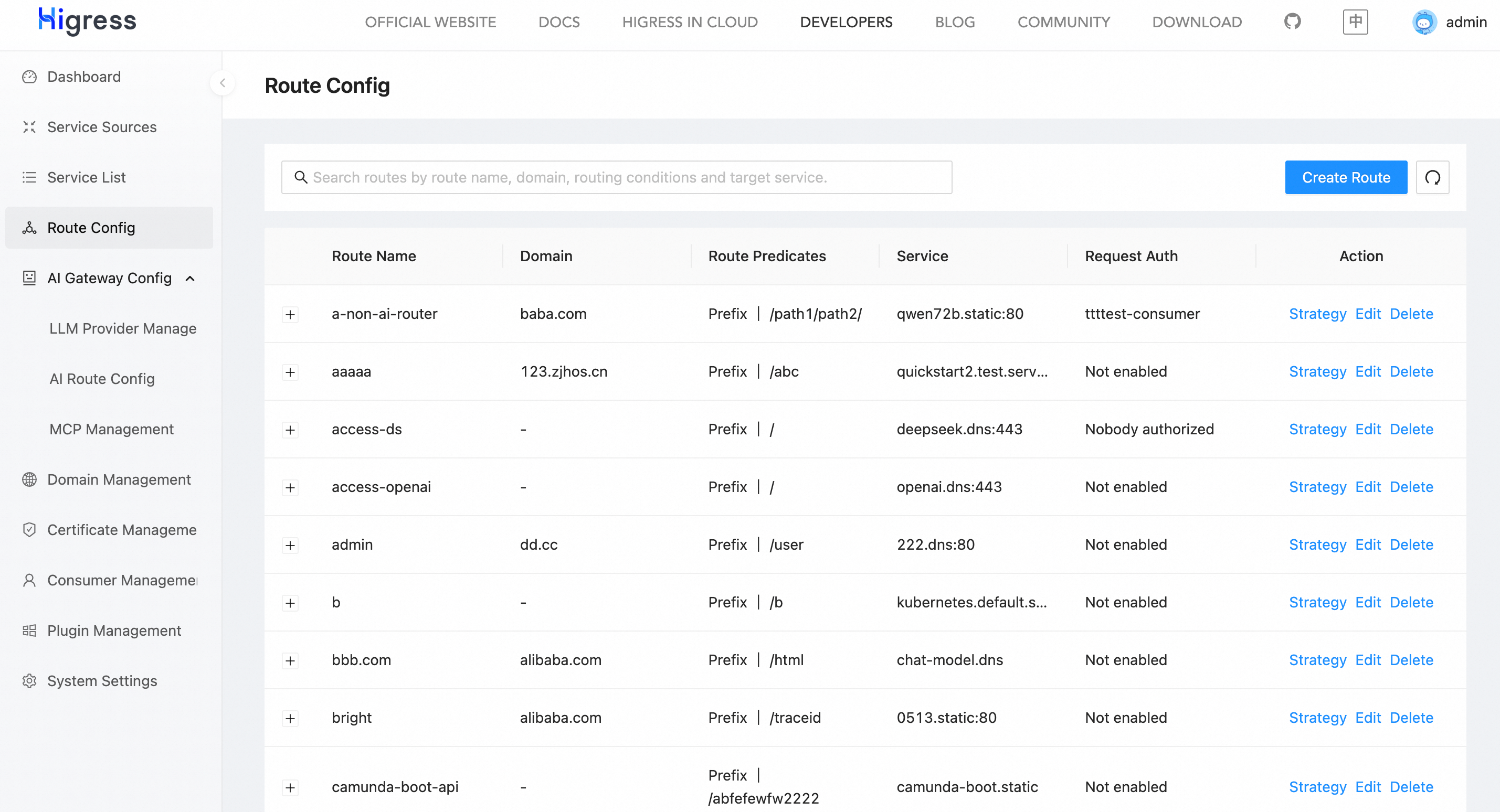

Therefore, from the Higress console, it provides routing, domain, and service source configurations for enterprise application integration, along with consumer management, certificate management, and observability metrics for the gateway's CPU, Memory, and user requests.

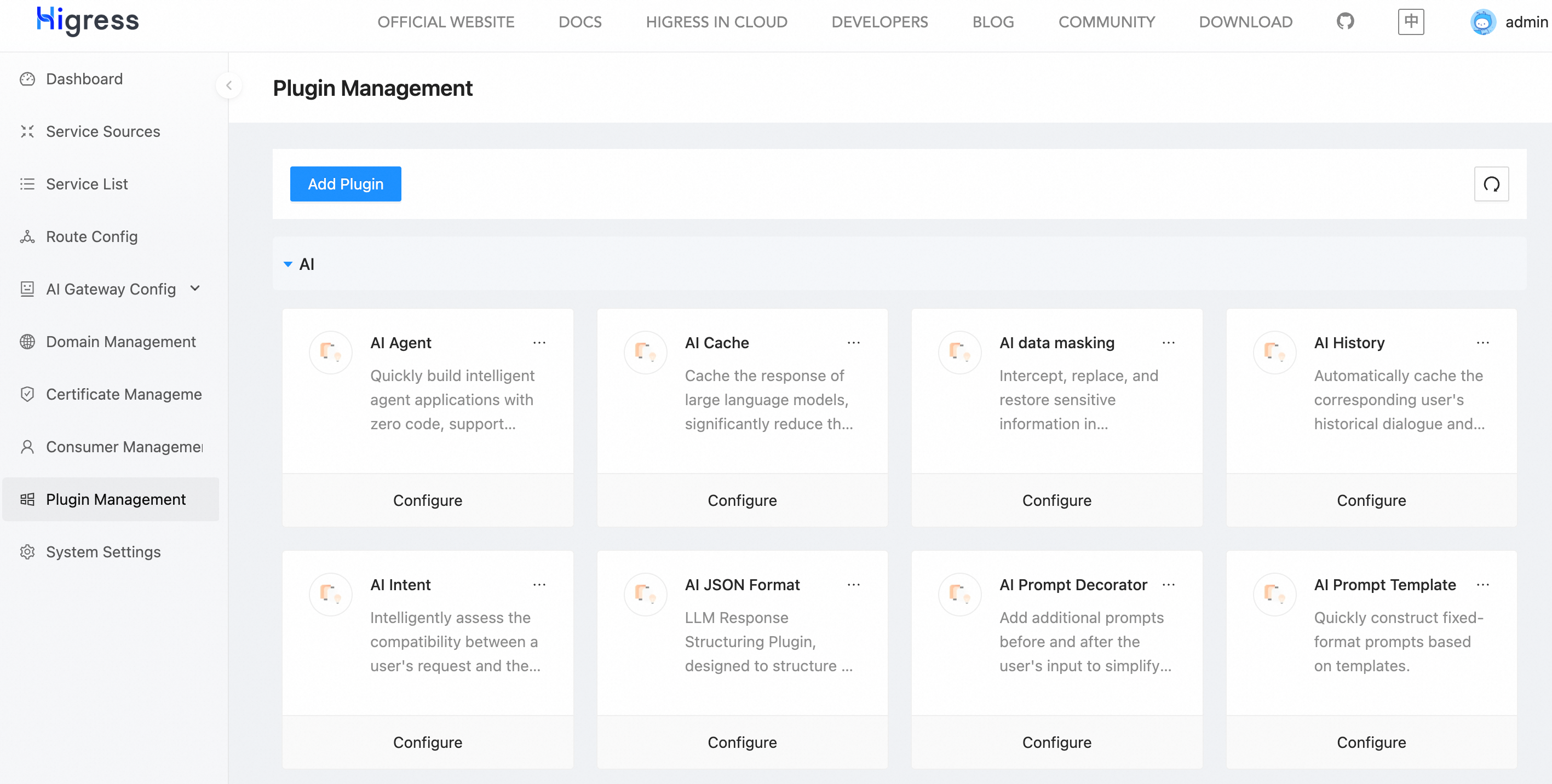

Additionally, through a wealth of plugins, it expands the capabilities of the gateway, such as traffic limiting, intent recognition, content review, etc.

In the Higress MCP marketplace, existing APIs can be converted into Remote MCP Servers and uploaded to the marketplace.

It supports rapid integration and invocation of mainstream open-source Agents in the country.

Moreover, Higress is friendly to multi-cloud and privatization deployments. Its architecture supports various deployment forms, allowing flexible integration into the existing application architecture systems of enterprises. Hence, Higress is an enterprise-level AI gateway targeting "complex access scenarios + high stability requirements," suitable for carrying the foundational infrastructure role for the landing of internal AI applications for enterprises.

From a positioning perspective, OpenRouter and Higress represent two completely different AI Gateways in terms of usage scenarios, thus their service and billing models are completely different.

Since OpenRouter provides model invocation services, it operates under the billing rules of various model vendors:

The core of Higress is based on Istio and Envoy, and its core capabilities are all open-source, following the Apache-2.0 license, supporting multiple deployment modes such as public cloud, private cloud, and hybrid cloud. In addition, it offers a cloud-hosted version of Alibaba Cloud API Gateway, enhancing performance, stability, usability, security, cloud product integration, observability, and other enterprise-level capabilities.

If you want to learn more about Alibaba Cloud API Gateway (Higress), please click: https://higress.ai/en/

DeepWiki × LoongCollector: An Understanding of AI Reshaping Open-source Code

653 posts | 55 followers

FollowAlibaba Cloud Native Community - June 4, 2025

Alibaba Cloud Native Community - May 23, 2025

Alibaba Cloud Native Community - April 3, 2025

Alibaba Cloud Native Community - July 4, 2025

Alibaba Cloud Native Community - September 9, 2025

Alibaba Cloud Native Community - November 14, 2025

653 posts | 55 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by Alibaba Cloud Native Community