2016: Self-sufficiency

If you have ever participated in the development of a large-scale system, you can understand the importance of monitoring systems. Just like our eyes, monitoring systems allow us to track the status of the system. Similarly, our database has a monitoring system. Through the agent deployed on the host, the monitoring system regularly collects key metrics of the host and database, including the CPU and I/O usage, database QPS, TPS, response time, and slow SQL logs. It stores these metrics in the database for further analysis and presentation. Initially, the MySQL database was used as our database product.

With the scale-out of Alibaba databases, the entire monitoring system became a bottleneck. For example, due to the limited system capability, we could only reach the collection granularity up to one minute. After years of optimization, we improved the granularity to 10 seconds. However, what embarrassed us the most was this: before 00:00 on each Double Eleven, we usually had a plan to degrade the monitoring system, for example, by reducing the collection accuracy or disabling some metrics. This was due to the high traffic during peak hours.

Another business challenge came from the security department, which asked us to collect every SQL statement that ran on the database and send these statements to the big data computing platform for real-time analysis. This requirement seemed to be impossible for us, because a large number of SQL statements are running at every moment. In most cases, the only solution was sampling. However, the security department required that all of the SQL statements be correctly recorded and analyzed, presenting a huge challenge.

During Double Eleven in 2016, we launched a project for reconstructing the entire monitoring system. The objective was to enable the monitoring system to provide second-level monitoring and full-SQL collection and computing capabilities at the same monitoring grade during Double Eleven. To implement this, we first worked to resolve the problem of storage and computation of mass monitoring data. For this purpose, we used TSDB, a time-series database developed by Alibaba that is specific to massive time series data in scenarios like IoT and APM. The second task was to resolve the problem of full-SQL collection and computing. For this purpose, we provided a built-in real-time SQL collection interface for AliSQL. After an SQL statement is run, the statement could be directly transferred to StreamCompute for real-time processing through Message Queue without logging. This implemented the analysis and processing of full-SQL statements. By resolving these two technical problems, we met the business objective of second-level monitoring and full-SQL collection during Double Eleven in 2016.

Later, the monitoring data and full SQL statements became a huge treasure trove for us to explore. Using data analysis and the linkage with the AI technology, we launched the intelligent database diagnostic engine, CloudDBA. We believe that the future of databases lies in self-driving databases with the following features: self-diagnosis, self-optimization, self-decision-making, and self-recovery. As mentioned earlier, we made some progress in advancing intelligence.

Now, TSDB is already a product available on Alibaba Cloud. In addition to serving tens of thousands of engineers in Alibaba, CloudDBA also provides database optimization services to users on the cloud. We not only practiced self-sufficiency but also leveraged different Alibaba scenarios to continuously hone the involved technologies to serve additional users on the cloud. This is how Double Eleven has driven technology.

2016 to 2017: Further development around the database and cache technologies

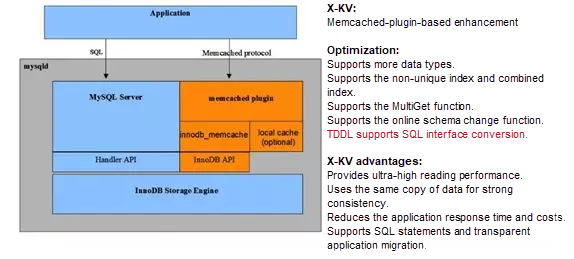

Tair, a distributed cache service developed by Alibaba, is a key technology for Double Eleven. With the help of Tair, the database can withstand the high data traffic of Double Eleven. When using Tair on a large scale, the development personnel wanted the database to provide a high-performance KV interface, with consistency of the data queried through the KV and SQL interfaces to greatly simplify the workload of business development. Developed in this context, X-KV is a KV component of X-DB. By bypassing the SQL resolution process and directly accessing data in the memory, X-KV can provide outstanding performance and a response time several times lower than that of the SQL interface. The X-KV technology was first applied in 2016. It supports a QPS of over 100,000 and is highly recognized by users. During Double Eleven in 2017, we used another "secret technology", namely, the automatic conversion between the SQL and KV interfaces through the middleware TDDL. With this technology, developers no longer need to simultaneously develop both interfaces. Instead, they can use the SQL interface to access the database, while TDDL can automatically transform the SQL interface into the KV interface in the background. This further enhances development efficiency and reduces the load on the database.

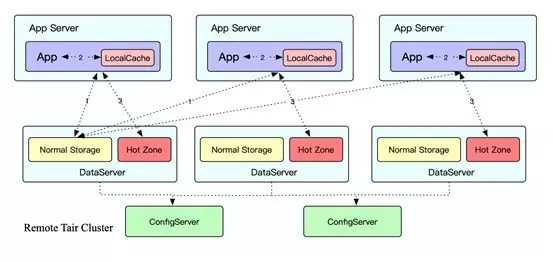

During Double Eleven in 2016, Tair encountered an industry-level technical problem: hotspots. As you all know, a key in a cache system can only be deployed on one machine. However, during Double Eleven, the concentrated hotspots and the large access volume make it easy to exceed the capacity threshold of the single machine. As a result, the CPU and the NIC become bottlenecks. Because the type of a hotspot is unpredictable, and can be a traffic or frequency hotspot, we worked like bees in 2016, exhausting ourselves to resolve problems everywhere. In 2017, the Tair team was thinking about how to resolve this industry-level technical problem. Eventually, they proposed a creative technical solution of adaptive hotspots. In this solution, the first element is intelligent identification technology. Tair uses the multi-level LRU data structure. After different weights are set for the frequency and size of the keys to be accessed, the keys are deployed on the LRUs at different levels. This ensures that the keys with high weights can be retained during key removal. Then, the keys that are retained and exceed the threshold are taken as hotspot keys. The other element is the dynamic hash technology. When a hotspot is detected, the application server is linked with the Tair server to dynamically hash the hotspot data into the HotZone storage areas on other data nodes of the Tair server for access based on the pre-set access model.

The hotspot hash technology was remarkably effective during Double Eleven in 2017. By hashing the hotspot data into the clusters, the water level of all clusters was dropped below the security line. Without this capability, many Tair clusters could have encountered problems during Double Eleven in 2017.

Clearly, the database and the cache depend on and complement each other to support the storage and access of massive data during Double Eleven.

2016 to 2017: Achieving smooth transaction curves

With the introduction of full-link stress testing, we hoped that the transaction curve at 00:00 of every Double Eleven could be as smooth as possible. However, in fact normally the opposite occurs. After 00:00 of Double Eleven in 2016, the transaction curve fluctuated and slowly climbed to the peak. When reviewing this issue later, we found that a major problem occurred at 00:00 in the database for the shopping carts. Because the data in the buffer pool was "cold," when a large number of requests arrived at 0:00, the database had to be "warmed up" first. In other words, the data had to be read from the SSD into the buffer pool, resulting in a long response time to a large number of requests, compromising the user experience.

After locating the cause, we proposed the "preheating" technology in 2017, which can fully "warm up" each component before the opening of Double Eleven, including Tair, databases, and applications. For this purpose, we developed a preheating system, which is divided into two parts: data preheating and application preheating. Data preheating includes database preheating and cache preheating. In this mechanism, the preheating system simulates an application access, through which data is loaded into the cache and the database to ensure the BP hit rate of the cache and database. Application preheating consists of connection preheating and JIT preheating. For this mechanism, a database connection is established before 00:00 of Double Eleven to eliminate the connection overhead during peak hours. In addition, the business is very complicated and the Java code must be interpreted for execution. For this reason, if JIT compilation is also implemented during peak hours, a large number of CPU resources will be consumed and the system response time will be prolonged. Through the JIT preheating mechanism, the code can be fully compiled in advance.

During Double Eleven in 2017, the transaction curve was smooth at 00:00 because the system was fully preheated.

2017 to 2018: Technological breakthrough in storage and computing separation

In the beginning of 2017, the Group's senior technical personnel initiated a technical discussion on the following topic: is separation of storage and computing required? This led to a prolonged discussion. When I was taking Dr. Wang's class, we also had a technical debate on this topic. It was a close contest, neither party could persuade the other. For databases, storage and computing separation is a complex technical topic. In the IOE era, minicomputers and storage devices were interconnected through the SAN, which essentially uses a storage-and-computing-separation architecture. Now, we are going back to this architecture. Is it a step backwards in technology? In addition, the I/O response latency directly affects database performance. In this case, how can we resolve the network latency problem? We had a variety of problems without a conclusion.

At that time, the database was already able to use ECS resources for auto scaling in large sales campaigns, and containerized deployment had also been implemented. However, one problem that we still had was: if computing and storage are bound together, the maximal flexibility cannot be achieved because the migration of compute nodes requires the movement of data. Moreover, by studying the growth curve of computing and storage capabilities, we found that during the peak hours of Double Eleven, the demand for computing capability increased sharply, while the demand for storage capability did not change significantly. Therefore, if storage and computing separation were available, we would only need to resize the compute nodes during peak hours. In summary, the separation of storage and computing was a must.

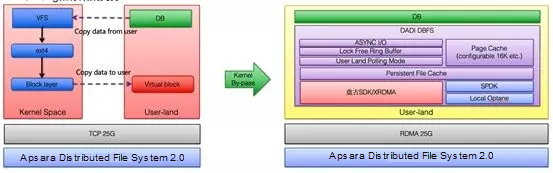

In 2017, to verify the feasibility of this idea, we chose to optimize based on the open source distributed storage solution, Ceph. At the same time, Alibaba's high-performance distributed storage Apsara Distributed File System 2.0 was also under development. In the meantime, the database kernel team participated in the development of, and managed to optimize, the database kernel to mitigate the impact of network latency on database performance. With everyone's hard work, a computing-and-storage-separation solution based on Apsara Distributed File System 2.0 was launched in 2017, and the elastic solution for mounting shared storage with offline heads was verified. During this year's Double Eleven, we proved that database storage and computing separation is totally feasible.

The successful implementation of storage and computing separation depends on the background technology: a high-performance, low-latency network. During Double Eleven in 2017, we used the 25 Gbit/s TCP network. To further reduce latency, during Double Eleven in 2018, we also adopted RDMA technology on a large scale, which significantly reduced network latency. Also, this was the first time in the industry that RDMA technology had been applied on such a large scale. To reduce the I/O latency, we also prepared another key file system, DBFS. By using user-state technology and bypass kernel, zero copy of the I/O path was implemented. Through the application of these technologies, the latency and throughput were made close to those of the local storage device.

Double Eleven in 2018 saw the large-scale application of storage and computing separation technology, ringing in a new database era.

Between 2012 and 2018, I witnessed the constant increase in transaction amounts at 00:00, breakthroughs in database technologies, and the spirit of perseverance of Alibaba's employees. The progress from "impossibility" to "possibility" demonstrates the success of the constant pursuit of technological advances by Alibaba's technologists.

Thanks to this last decade of Double 11s, we look forward to an even better decade of Double 11s in the future.

2,593 posts | 791 followers

FollowAlibaba Clouder - February 4, 2019

Apache Flink Community - December 17, 2024

Qiyang Duan - May 28, 2020

Apache Flink Community - July 28, 2025

Alipay Technology - August 21, 2019

Alibaba Clouder - February 9, 2021

2,593 posts | 791 followers

FollowLearn More

CDN(Alibaba Cloud CDN)

CDN(Alibaba Cloud CDN)

A scalable and high-performance content delivery service for accelerated distribution of content to users across the globe

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreMore Posts by Alibaba Clouder