By Wang Xun from Government Procurement Cloud

The Internet has developed rapidly in recent years. New technologies have sprung up like mushrooms to follow the rapid growth of businesses. There are a large number of promising technologies throughout the industry. Cloud-native technologies centering on containers are growing quickly. Kubernetes is the de facto standard for container orchestration, which is undoubtedly the most notable technology.

Kubernetes solves the problems of large-scale application deployment, resource management, and scheduling, but it is not convenient for business delivery. Moreover, the deployment of Kubernetes is relatively complex. Among the emerging applications oriented with the Kubernetes ecosystem, there is always a lack of applications that can integrate business, middleware, and clusters for integrated delivery.

The Sealer open-source project was initiated by the Alibaba Cloud Intelligent Cloud-Native Application Platform Team and jointly built by Government Procurement Cloud and Harmony Cloud. The project remedies the Kubernetes deficiency in integrated delivery. Sealer considers the overall delivery of clusters and distributed applications with a very elegant design scheme. As a representative of the government procurement sector, Government Procurement Cloud has used Sealer to complete the overall privatized delivery of large-scale distributed applications. The delivery practice proves that Sealer has the flexible and powerful capability for integrated delivery.

The customers of privatized delivery of Government Procurement Cloud are governments and enterprises. They need to deliver large-scale business with 300-plus business components and 20-plus middleware. The infrastructure of delivery targets is different and uncontrollable, and the network limits are strict. In some sensitive scenarios, the biggest pain point of business delivery is the handling of deployment dependency and delivery consistency, which are completely isolated from the network. The unified delivery of business based on Kubernetes achieves the consistency of the running environment. However, it is still urgent to solve problems, such as the unified processing of all images, various packages depended during the deployment, and the consistency of delivery systems.

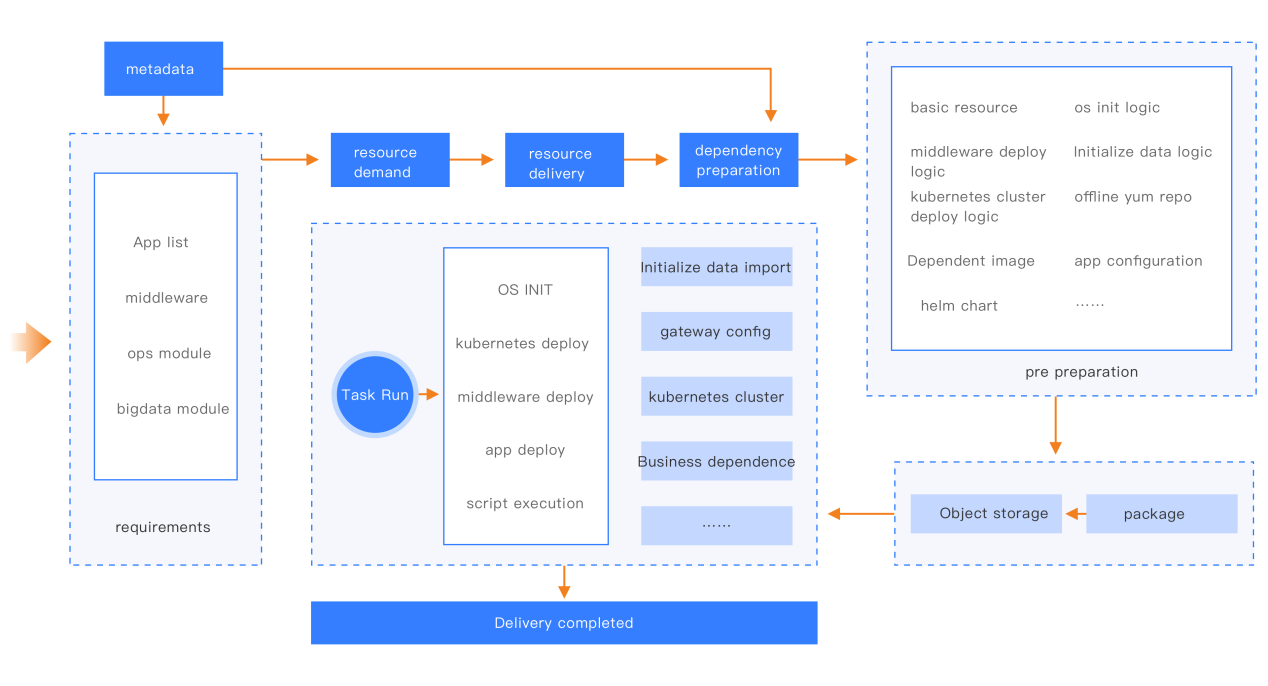

As shown in the preceding figure, the process of localization delivery of Government Procurement Cloud is divided into six steps:

Confirm user requirements → give resource demand to users → obtain a resource list provided by users → generate preparation configurations based on the resource list → prepare deployment scripts and dependency → complete delivery

Pre-preparation and delivery require a lot of workers and time to be prepared and deployed.

In the cloud-native era, the emergence of Docker deals with the environment consistency and packaging problems of a single application. The delivery of business no longer spends a lot of time deploying environment dependencies like traditional delivery. Then, the advent of container orchestration systems (such as Kubernetes) deals with the unified scheduling of underlying resources and unified orchestration of applications runtime. However, the delivery of a complex business itself is a huge issue. For example, Government Procurement Cloud needs to deploy and configure various resource objects, such as helm chart, RBAC, Istio gateway, CNI, and middleware, and deliver more than 300 business components. Each privatized delivery brings a lot of workforce and time costs.

Government Procurement Cloud is in a period of rapid business development, so the demand for privatized deployment projects is constantly increasing. However, it is more difficult to support actual needs with high-cost delivery methods. Learning how to reduce delivery costs and ensure delivery consistency is the most urgent problem for O&M teams to solve.

In the early days, Government Procurement Cloud used Ansible to deliver business. The Ansible solution achieves automation and reduces delivery costs to some extent. However, it causes the following problems:

Ansible does more bonding and O&M work with simple logic. With the continuous addition of localization projects, the disadvantages of Ansible delivery are beginning to appear. Each localization project requires a lot of time investment. The Government Procurement Cloud O&M Team has begun to explore the optimized direction of the delivery system. We have analyzed many technical solutions. The current Kubernetes delivery tools focus on the delivery of the cluster itself instead of the delivery of the business layer. It can be encapsulated based on cluster deployment tools, but this solution is not fundamentally different from using Ansible to deploy upper-layer services after the deployment of clusters.

Fortunately, we discovered the Sealer project. Sealer is a packaging and delivery solution for distributed applications. It solves the delivery problem of complex applications by packaging distributed applications and their dependency together. Its design concept is very elegant, and it can manage the packaging and delivery of the entire cluster in the ecosystem of container images.

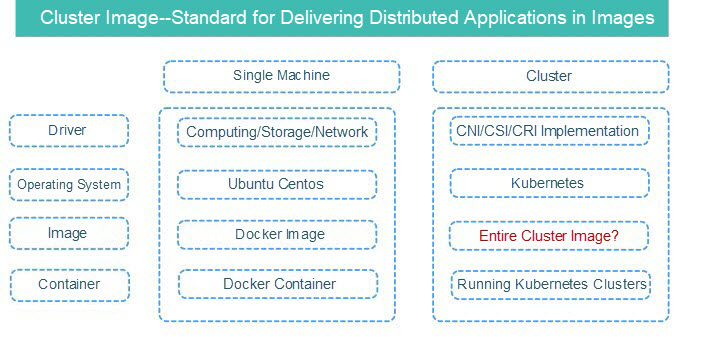

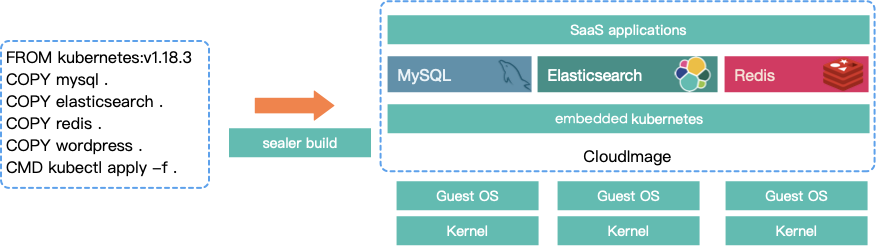

When using Docker, we use Dockerfile to define the running environment and packaging of a single application. Correspondingly, the technical principle of Sealer can be explained by the analogy of Docker. The entire cluster can be regarded as a machine, and Kubernetes can be defined as the operating system. The applications in this operating system are defined by Kubefile and packaged into images. Then, Sealer run can deliver the entire cluster and applications (like Docker run) to deliver a single application.

We invited community partners to communicate with each other. We encounterd problems and pitfalls since Sealer was a new project. And we also found many issues that didn't meet the needs. However, we didn't give up because we had great expectations and confidence in the design model of Sealer. We chose to work together with the community to grow together. The final successful implementation practices also proved that our choice was correct.

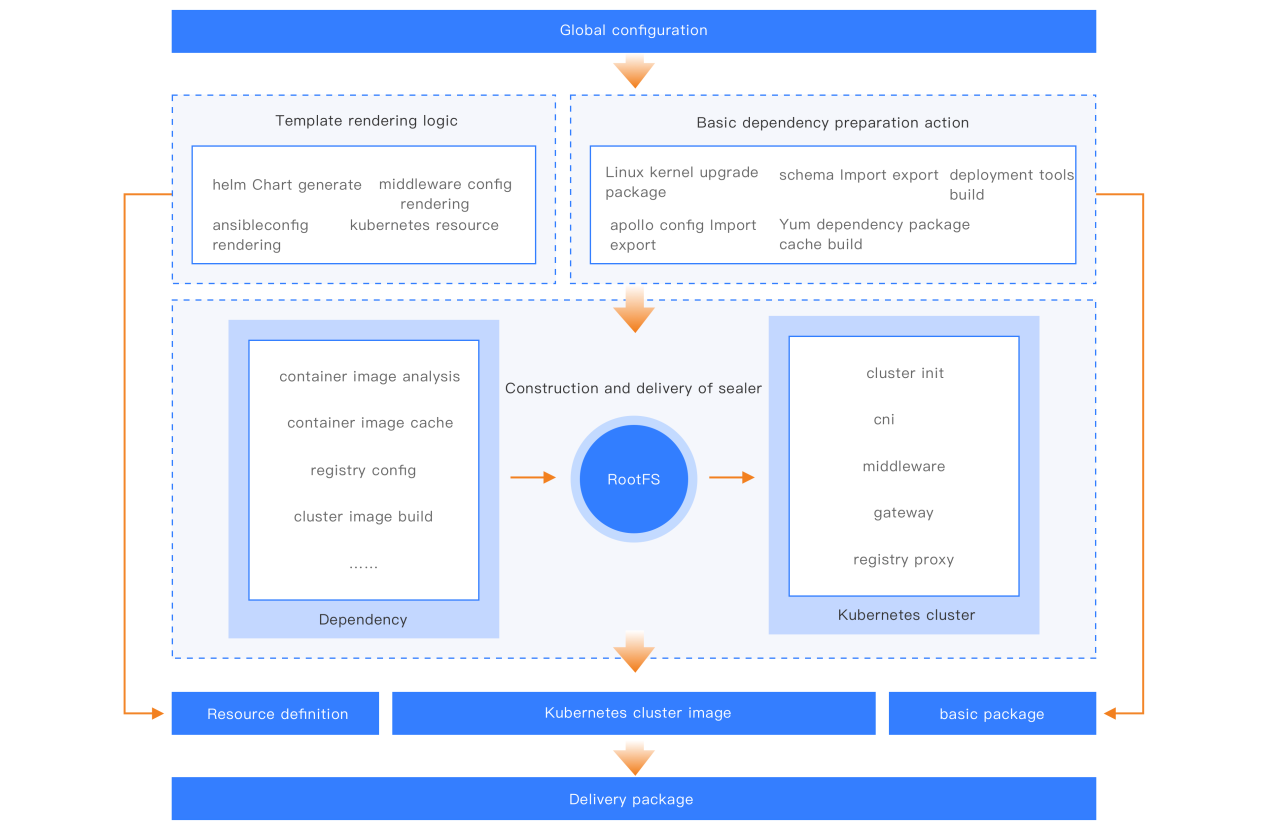

When deciding to cooperate with the community, we conducted a comprehensive evaluation of Sealer. Considering our requirements, here are the main problems:

In addition, it is necessary to talk about several practical and powerful Sealer features when we implement the Sealer solution:

We redefined the delivery process to use Sealer. The delivery of business components, containerized middleware, image cache, and other components is completed using Sealer through Kubefile. The Sealer lite build mode is used to automate the parsing and built-in caching of dependency images.

Sealer is used to save the complex process logic and dependency processing logic of a great deal of application delivery, which simplifies the implementation. The continuous simplification of the implementation logic makes delivery possible on a large scale. We use the new delivery system in practice scenarios. The delivery period is shortened from a 15 person-day to a 2 person-day. In addition, the delivery of a cluster containing 20 GB business image cache, more than 2,000 GB memory, and 800-plus core CPU is realized. In the next step, we plan to continuously simplify the delivery process so a novice can complete the delivery of an entire project with simple training.

The success of the implementation of Sealer is the result of the delivery system and the power of open-source. Moreover, we explore a new model of cooperation with the community. In the future, Government Procurement Cloud will continue to support and participate in the construction of the Sealer community and contribute more to the community according to actual business scenarios.

As a new open-source project, Sealer is imperfect. It has problems that need solving and features that need optimizing. Also, there are still more requirements and business scenarios to be realized. We hope Sealer can serve more user scenarios through our continuous contribution. At the same time, we also hope more partners can participate in the community construction to make Sealer more promising.

An Implementation Practice of Kubernetes Ingress Gateway – Concept, Deployment, and Optimization

A Practical Guide on Dubbo-Admin Function Display and Operations

661 posts | 55 followers

FollowAlibaba Cloud Native Community - July 6, 2022

Alibaba Cloud Native - May 23, 2022

Alibaba Cloud Native Community - June 29, 2022

Alibaba Developer - December 14, 2021

Alibaba Cloud Native Community - September 7, 2022

Alibaba Cloud Native Community - May 31, 2022

661 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by Alibaba Cloud Native Community