By Digoal.

The recent coronavirus outbreak (COVID-19) has been ruthless, severely affecting the economy worldwide. Most companies are frustrated as a result, especially small and medium-sized enterprises, even to the point of layoffs to cut costs. But for many, talent is the most valuable resource. Is there another way to cut costs without layoffs? How can businesses survive this crisis? The short answer - by using technology.

Today, when it comes to the question of how you can reduce the IT costs associated with enterprise–level databases, there happens to be new and interesting methods available for increasing output while reducing costs. Nowadays databases account for about half of an enterprise's IT expenditure.

However, most enterprises are not well equipped with it comes to databases, often lacking a team of specialist database administrators (DBA). So, as you can image, it's not that easy to make big changes about how your enterprise does databases, let alone using new methods of reducing costs.

Thankfully, though, by investing in a reliable cloud vendor, you can go and cut costs easily. Today, in this post, I'm going to show you how Alibaba Cloud can help in this regard. At Alibaba Cloud, I have figured out how you may be able to potentially cut your enterprise IT costs by a large margin.

Got your attention? So how do I do this? Well to start off, I would like to discuss what are the advantages of using PostgreSQL and MySQL on Alibaba Cloud, and then I'll cover what you'll want to do to save on costs, and show you how I figured this out.

Below is a list of some of the cost-effective and cost-saving advantages of ApsaraDB RDS for PostgreSQL, Alibaba Cloud's solution for using a PostgreSQL database on Alibaba Cloud. There are a lot of advantages, from good capabilities to convenience and cost-saving features.

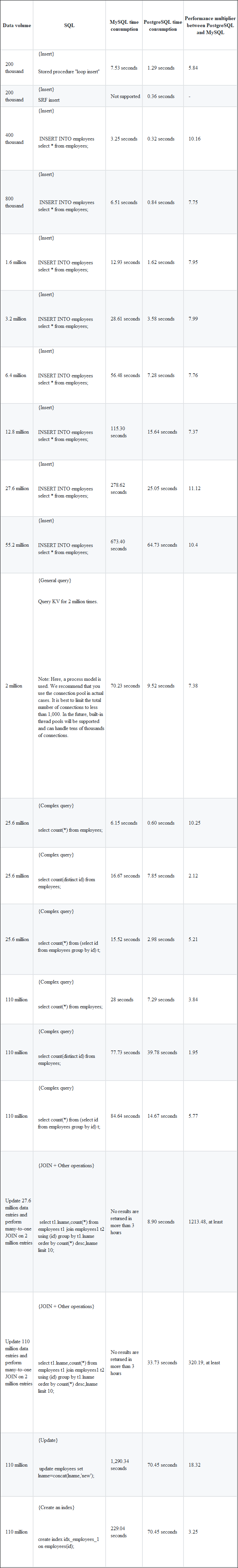

oss_fdw, making it possible to store the archived data of databases in Object Storage Service (OSS), which can in turn help you greatly reduce costs without changing access methods.So now that we've gone through the advantages of using ApsaraDB RDS for PostgreSQL. Here's the biggie as to why PostgreSQL can have you a lot in terms of your database expenses. I have found in a test, which I'll go through with you below, that the overall performance of PostgreSQL is an order of magnitude higher than that of MySQL. Yet, the combined use of PostgreSQL and MySQL can greatly reduce your enterprise's database expenses.

For the test, I will perform I need an Alibaba Cloud ApsaraDB RDS for PostgreSQL 12 instance. The instance is with the following specifications: 8 cores, 32 GB memory, and 1,500 GB ESSDs. Also, I will create an Alibaba Cloud ApsaraDB RDS for MySQL 8.0 instance with the same specifications. Set up a user password and database name. For the PostgreSQL database instance, I ran this command:

export PGPASSWORD=xxxxxx!

psql -h pgm-bp1z26gbo3gx893a129310.pg.rds.aliyuncs.com -p 1433 -U user123 db1And, for the MySQL database instance:

mysql -h rm-bp1wv992ym962k85888370.mysql.rds.aliyuncs.com -P 3306 -u user123 --password=xxxxxx! -D db1For me here, for the test, I've got an ECS instance running CentOS 7.x x64 client with MySQL and PostgreSQL installed.

yum install -y mysql-*

yum install https://download.postgresql.org/pub/repos/yum/reporpms/EL-7-x86_64/pgdg-redhat-repo-latest.noarch.rpm

yum install -y postgresql12CREATE TABLE employees (

id INT NOT NULL,

fname VARCHAR(30),

lname VARCHAR(30),

birth TIMESTAMP,

hired DATE NOT NULL DEFAULT '1970-01-01',

separated DATE NOT NULL DEFAULT '9999-12-31',

job_code INT NOT NULL,

store_id INT NOT NULL

);Insert stored procedures in batch. Note that you may need to scroll right and left to see all of the information here.

DROP PROCEDURE IF EXISTS BatchInsert;

delimiter // -- 把界定符改成双斜杠

CREATE PROCEDURE BatchInsert(IN init INT, IN loop_time INT) -- 第一个参数为初始ID号(可自定义),第二个位生成MySQL记录个数

BEGIN

DECLARE Var INT;

DECLARE ID INT;

SET Var = 0;

SET ID = init;

WHILE Var < loop_time DO

insert into employees

(id, fname, lname, birth, hired, separated, job_code, store_id)

values

(ID, CONCAT('chen', ID), CONCAT('haixiang', ID), Now(), Now(), Now(), 1, ID);

SET ID = ID + 1;

SET Var = Var + 1;

END WHILE;

END;

//

delimiter ; -- 界定符改回分号Next, insert 200,000 entries in batch.

-- 开启事务插入,否则会很慢

begin;

CALL BatchInsert(1, 200000);

commit;

Query OK, 1 row affected (7.53 sec)Use "insert into" to continue with the batch insertion.

mysql> insert into employees select * from employees;

Query OK, 200000 rows affected (1.61 sec)

Records: 200000 Duplicates: 0 Warnings: 0

mysql> insert into employees select * from employees;

Query OK, 400000 rows affected (3.25 sec)

Records: 400000 Duplicates: 0 Warnings: 0

mysql> insert into employees select * from employees;

Query OK, 800000 rows affected (6.51 sec)

Records: 800000 Duplicates: 0 Warnings: 0

mysql> insert into employees select * from employees;

Query OK, 1600000 rows affected (12.93 sec)

Records: 1600000 Duplicates: 0 Warnings: 0

mysql> insert into employees select * from employees;

Query OK, 3200000 rows affected (28.61 sec)

Records: 3200000 Duplicates: 0 Warnings: 0

mysql> insert into employees select * from employees;

Query OK, 6400000 rows affected (56.48 sec)

Records: 6400000 Duplicates: 0 Warnings: 0

mysql> insert into employees select * from employees;

Query OK, 12800000 rows affected (1 min 55.30 sec)

Records: 12800000 Duplicates: 0 Warnings: 0Query the performance.

mysql> select count(*) from employees;

+----------+

| count(*) |

+----------+

| 25600000 |

+----------+

1 row in set (6.15 sec)Query the distinct performance.

mysql> select count(distinct id) from employees ;

+--------------------+

| count(distinct id) |

+--------------------+

| 200000 |

+--------------------+

1 row in set (16.67 sec)Query the group distinct performance.

mysql> select count(*) from (select id from employees group by id) t;

+----------+

| count(*) |

+----------+

| 200000 |

+----------+

1 row in set (15.52 sec)Insert another 2 million entries.

begin;

CALL BatchInsert(1, 2000000);

commit;Insert 2 million entries to test table 2. Note that, again, you may need to scroll right and left to see all the information.

CREATE TABLE employees1 (

id INT NOT NULL,

fname VARCHAR(30),

lname VARCHAR(30),

birth TIMESTAMP,

hired DATE NOT NULL DEFAULT '1970-01-01',

separated DATE NOT NULL DEFAULT '9999-12-31',

job_code INT NOT NULL,

store_id INT NOT NULL

);

DROP PROCEDURE IF EXISTS BatchInser1;

delimiter // -- 把界定符改成双斜杠

CREATE PROCEDURE BatchInsert1(IN init INT, IN loop_time INT) -- 第一个参数为初始ID号(可自定义),第二个位生成MySQL记录个数

BEGIN

DECLARE Var INT;

DECLARE ID INT;

SET Var = 0;

SET ID = init;

WHILE Var < loop_time DO

insert into employees1

(id, fname, lname, birth, hired, separated, job_code, store_id)

values

(ID, CONCAT('chen', ID), CONCAT('haixiang', ID), Now(), Now(), Now(), 1, ID);

SET ID = ID + 1;

SET Var = Var + 1;

END WHILE;

END;

//

delimiter ; -- 界定符改回分号Use "loop insert" to insert 2 million rows.

-- 开启事务插入,否则会很慢

begin;

CALL BatchInsert1(1, 2000000);

commit;

Query OK, 1 row affected (1 min 7.06 sec)Update 25.6 million data entries, perform many-to-one JOIN on 2 million entries, and then perform grouping and sorting.

select t1.lname,count(*) from employees t1 join employees1 t2 using (id) group by t1.lname order by count(*) desc,lname limit 10;Query the performance. Since no results are returned for the preceding query for several hours, another table with 2 million entries has to be created for the query test.

CREATE TABLE employees2 (

id INT NOT NULL,

fname VARCHAR(30),

lname VARCHAR(30),

birth TIMESTAMP,

hired DATE NOT NULL DEFAULT '1970-01-01',

separated DATE NOT NULL DEFAULT '9999-12-31',

job_code INT NOT NULL,

store_id INT NOT NULL

);

DROP PROCEDURE IF EXISTS BatchInser2;

delimiter // -- 把界定符改成双斜杠

CREATE PROCEDURE BatchInsert2(IN init INT, IN loop_time INT) -- 第一个参数为初始ID号(可自定义),第二个位生成MySQL记录个数

BEGIN

DECLARE Var INT;

DECLARE ID INT;

SET Var = 0;

SET ID = init;

WHILE Var < loop_time DO

insert into employees2

(id, fname, lname, birth, hired, separated, job_code, store_id)

values

(ID, CONCAT('chen', ID), CONCAT('haixiang', ID), Now(), Now(), Now(), 1, ID);

SET ID = ID + 1;

SET Var = Var + 1;

END WHILE;

END;

//

delimiter ; -- 界定符改回分号

-- 开启事务插入,否则会很慢

begin;

CALL BatchInsert2(1, 2000000);

commit;

Query OK, 1 row affected (1 min 7.06 sec)Create an index.

create index idx_employees2_1 on employees2(id);Create a query stored procedure to query 2 million times.

DROP PROCEDURE IF EXISTS select1;

delimiter // -- 把界定符改成双斜杠

CREATE PROCEDURE select1(IN init INT, IN loop_time INT) -- 第一个参数为初始ID号(可自定义),第二个位生成MySQL记录个数

BEGIN

DECLARE Var INT;

DECLARE ID1 INT;

DECLARE vid INT;

DECLARE vfname VARCHAR(30);

DECLARE vlname VARCHAR(30);

DECLARE vbirth TIMESTAMP;

DECLARE vhired DATE;

DECLARE vseparated DATE;

DECLARE vjob_code INT;

DECLARE vstore_id INT;

SET Var = 0;

SET ID1 = init;

WHILE Var < loop_time DO

select t.id,t.fname,t.lname,t.birth,t.hired,t.separated,t.job_code,t.store_id

into

vid,vfname,vlname,vbirth,vhired,vseparated,vjob_code,vstore_id

from employees2 t

where t.id=id1;

SET ID1 = ID1 + 1;

SET Var = Var + 1;

END WHILE;

END;

//

delimiter ; -- 界定符改回分号Perform a simple query based on KEY, query 2 million times of the time consuming.

-- 开启事务查询

begin;

CALL select1(1, 2000000);

commit;

Query OK, 1 row affected (1 min 10.23 sec)Continue to test 100 million data entries.

mysql> insert into employees select * from employees;

Query OK, 27600000 rows affected (4 min 38.62 sec)

Records: 27600000 Duplicates: 0 Warnings: 0

mysql> insert into employees select * from employees;

Query OK, 55200000 rows affected (11 min 13.40 sec)

Records: 55200000 Duplicates: 0 Warnings: 0

mysql> select count(*) from employees;

+-----------+

| count(*) |

+-----------+

| 110400000 |

+-----------+

1 row in set (28.00 sec)

mysql> select count(distinct id) from employees ;

+--------------------+

| count(distinct id) |

+--------------------+

| 2000000 |

+--------------------+

1 row in set (1 min 17.73 sec)

mysql> select count(*) from (select id from employees group by id) t;

+----------+

| count(*) |

+----------+

| 2000000 |

+----------+

1 row in set (1 min 24.64 sec)Perform a full update of 110 million entries.

mysql> update employees set lname=lname||'new';

Query OK, 110400000 rows affected, 65535 warnings (21 min 30.34 sec)

Rows matched: 110400000 Changed: 110400000 Warnings: 220800000Update 110 million entries, perform many-to-one JOIN on 2 million entries, and then perform grouping and sorting. However, no query results are returned in more than 3 hours.

select t1.lname,count(*) from employees t1 join employees1 t2 using (id) group by t1.lname order by count(*) desc,lname limit 10;Create an index for 110 million entries.

mysql> create index idx_employees_1 on employees(id);

Query OK, 0 rows affected (3 min 49.04 sec)

Records: 0 Duplicates: 0 Warnings: 0Create a test table.

CREATE TABLE employees (

id INT NOT NULL,

fname VARCHAR(30),

lname VARCHAR(30),

birth TIMESTAMP,

hired DATE NOT NULL DEFAULT '1970-01-01',

separated DATE NOT NULL DEFAULT '9999-12-31',

job_code INT NOT NULL,

store_id INT NOT NULL

);Use srf to quickly insert 200,000 data entries.

\timing

insert into employees

(id, fname, lname, birth, hired, separated, job_code, store_id)

select

ID, CONCAT('chen', ID), CONCAT('haixiang', ID), Now(), Now(), Now(), 1, ID

from generate_series(1,200000) id;

INSERT 0 200000

Time: 355.652 msYou can also use the same "loop insert" method as that for MySQL to insert 200,000 entries.

create or replace function BatchInsert(IN init INT, IN loop_time INT) -- 第一个参数为初始ID号(可自定义),第二个位生成记录个数

returns void as $$

DECLARE

Var INT := 0;

begin

for id in init..init+loop_time-1 loop

insert into employees

(id, fname, lname, birth, hired, separated, job_code, store_id)

values

(ID, CONCAT('chen', ID), CONCAT('haixiang', ID), Now(), Now(), Now(), 1, ID);

end loop;

end;

$$ language plpgsql strict;

db1=# select batchinsert(1,200000);

batchinsert

-------------

(1 row)

Time: 1292.559 ms (00:01.293)Use "insert into" to continue with the batch insertion.

db1=> insert into employees select * from employees ;

INSERT 0 400000

Time: 322.335 ms

db1=> insert into employees select * from employees ;

INSERT 0 800000

Time: 835.365 ms

db1=> insert into employees select * from employees ;

INSERT 0 1600000

Time: 1622.475 ms (00:01.622)

db1=> insert into employees select * from employees ;

INSERT 0 3200000

Time: 3583.787 ms (00:03.584)

db1=> insert into employees select * from employees ;

INSERT 0 6400000

Time: 7277.764 ms (00:07.278)

db1=> insert into employees select * from employees ;

INSERT 0 12800000

Time: 15639.482 ms (00:15.639)

db1=> \dt+ employees

List of relations

Schema | Name | Type | Owner | Size | Description

--------+-----------+-------+---------+---------+-------------

public | employees | table | user123 | 2061 MB |

(1 row)Query the performance.

db1=> select count(*) from employees ;

count

----------

25600000

(1 row)

Time: 604.982 msRequest the distinct performance.

db1=> select count(distinct id) from employees ;

count

--------

200000

(1 row)

Time: 7852.604 ms (00:07.853)Query the group distinct performance.

db1=> select count(*) from (select id from employees group by id) t;

count

--------

200000

(1 row)

Time: 2982.907 ms (00:02.983)Insert another 2 million entries.

CREATE TABLE employees1 (

id INT NOT NULL,

fname VARCHAR(30),

lname VARCHAR(30),

birth TIMESTAMP,

hired DATE NOT NULL DEFAULT '1970-01-01',

separated DATE NOT NULL DEFAULT '9999-12-31',

job_code INT NOT NULL,

store_id INT NOT NULL

);

insert into employees1

(id, fname, lname, birth, hired, separated, job_code, store_id)

select

ID, CONCAT('chen', ID), CONCAT('haixiang', ID), Now(), Now(), Now(), 1, ID

from generate_series(1,2000000) id;

INSERT 0 2000000

Time: 3037.777 ms (00:03.038)Insert 2 million entries to test table 2.

select t1.lname,count(*) from employees t1 join employees1 t2 using (id) group by t1.lname order by count(*) desc,lname limit 10;

lname | count

----------------+-------

haixiang1 | 129

haixiang10 | 129

haixiang100 | 129

haixiang1000 | 129

haixiang10000 | 129

haixiang100000 | 129

haixiang100001 | 129

haixiang100002 | 129

haixiang100003 | 129

haixiang100004 | 129

(10 rows)

Time: 8897.907 ms (00:08.898)Update 25.6 million data entries, perform many-to-one JOIN on 2 million entries, and then perform grouping and sorting.

select t1.lname,count(*) from employees t1 join employees1 t2 using (id) group by t1.lname order by count(*) desc,lname limit 10;

lname | count

----------------+-------

haixiang1 | 129

haixiang10 | 129

haixiang100 | 129

haixiang1000 | 129

haixiang10000 | 129

haixiang100000 | 129

haixiang100001 | 129

haixiang100002 | 129

haixiang100003 | 129

haixiang100004 | 129

(10 rows)

Time: 8897.907 ms (00:08.898)Query the performance of index creation.

create index idx_employees1_1 on employees1(id);

CREATE INDEX

Time: 1436.346 ms (00:01.436)Query the time of querying 2 million entries based on keys.

do language plpgsql $$

declare

begin

for i in 1..2000000 loop

perform * from employees1 where id=i;

end loop;

end;

$$;

DO

Time: 9515.728 ms (00:09.516)

db1=> select 9515.728/2000000;

?column?

------------------------

0.00475786400000000000

(1 row)INSERT 0 27600000

Time: 25050.665 ms (00:25.051)

db1=> INSERT INTO employees select * from employees;

INSERT 0 55200000

Time: 64726.430 ms (01:04.726)

db1=> select count(*) from employees;

count

-----------

110400000

(1 row)

Time: 7286.152 ms (00:07.286)

db1=> select count(distinct id) from employees;

count

---------

2000000

(1 row)

Time: 39783.068 ms (00:39.783)

db1=> select count(*) from (select id from employees group by id) t;

count

---------

2000000

(1 row)

Time: 14668.305 ms (00:14.668)

db1=> select t1.lname,count(*) from employees t1 join employees1 t2 using (id) group by t1.lname order by count(*) desc,lname limit 10;

lname | count

----------------+-------

haixiang1 | 516

haixiang10 | 516

haixiang100 | 516

haixiang1000 | 516

haixiang10000 | 516

haixiang100000 | 516

haixiang100001 | 516

haixiang100002 | 516

haixiang100003 | 516

haixiang100004 | 516

(10 rows)

Time: 33731.431 ms (00:33.731)Update 110 million entries.

db1=> update employees set lname=lname||'new';

UPDATE 110400000

Time: 385372.063 ms (06:25.372)Create an index.

db1=> create index idx_employees_1 on employees(id);

CREATE INDEX

Time: 70450.491 ms (01:10.450)Now, here's a comprehensive performance comparison between MySQL 8.0 and PostgreSQL 12. Both of these database have the same specifications of 8 cores, 32 GB memory, and 1,500 GB ESSDs.

The above tests show that in most scenarios, the overall performance of Alibaba Cloud ApsaraDB RDS for PostgreSQL is an order of magnitude higher than MySQL, so by using PostgreSQL and MySQL wisely in a combined fashion, understanding their strengths and weaknesses, you can greatly reduce your enterprise's database expenses.

While continuing to wage war against the worldwide outbreak, Alibaba Cloud will play its part and will do all it can to help others in their battles with the coronavirus. Learn how we can support your business continuity at https://www.alibabacloud.com/campaign/supports-your-business-anytime

How Is Alibaba DAMO Academy Helping to Fight the Outbreak of the Novel Coronavirus?

How Does DingTalk Cope with Traffic Spikes during the COVID-19 Outbreak Using ROS?

2,605 posts | 747 followers

FollowAlibaba Clouder - April 23, 2020

Alibaba Clouder - December 28, 2020

Alibaba Clouder - April 9, 2020

Alibaba Clouder - April 30, 2020

Alibaba Clouder - March 17, 2020

Alibaba Clouder - March 19, 2020

2,605 posts | 747 followers

Follow Database Overview

Database Overview

ApsaraDB: Faster, Stronger, More Secure

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn MoreMore Posts by Alibaba Clouder