By Afzaal Ahmad Zeeshan, Alibaba Cloud Community Blog author and Alibaba Cloud MVP.

Despite having logical modularity and segmentation in our monolith applications, the overall maintainability of microservices could be quite challenging. Moreover, there is a caveat, the deployment of single large application or package (which couples multiple modules) introduces multiple issues; complexity, finding out the problem area in the case of any exception or dependency conflict, resource consumption etc. That way, continuous deployment becomes a nightmare of epic proportion, because, these applications need some time to boot, setup caching and logging services etc., frequent deployment will kill the optimal performance.

This article will be a part 2 for our previously published article online, Scalable Serverless APIs on Alibaba Cloud, so most of the content is already shared online and you can review most of the basic serverless development over there. But before we dive into the architectural designs of the serverless applications, let us quickly review how we reach to the conclusion of serverless, starting from the old days of monolith applications and their development and deployment designs.

Embracing microservices to deal with scalability issues in a monolith architecture is a prevalent business concern. Because, microservices solve the problems of scalability in a monolith application quite vigorously. Fundamentally, that means, we are splitting our monolith application into intractably divisible sub services which support their own architectural flow and business logic. For instance, think about scaling certain components for specialized features related to some specific tasks. Unlike monolith architecture, microservices come with scaling only the required components (individual and autonomous services). This way, we can scale technology, aspects, and architecture refactoring without disturbing or risking the overall performance of our solution.

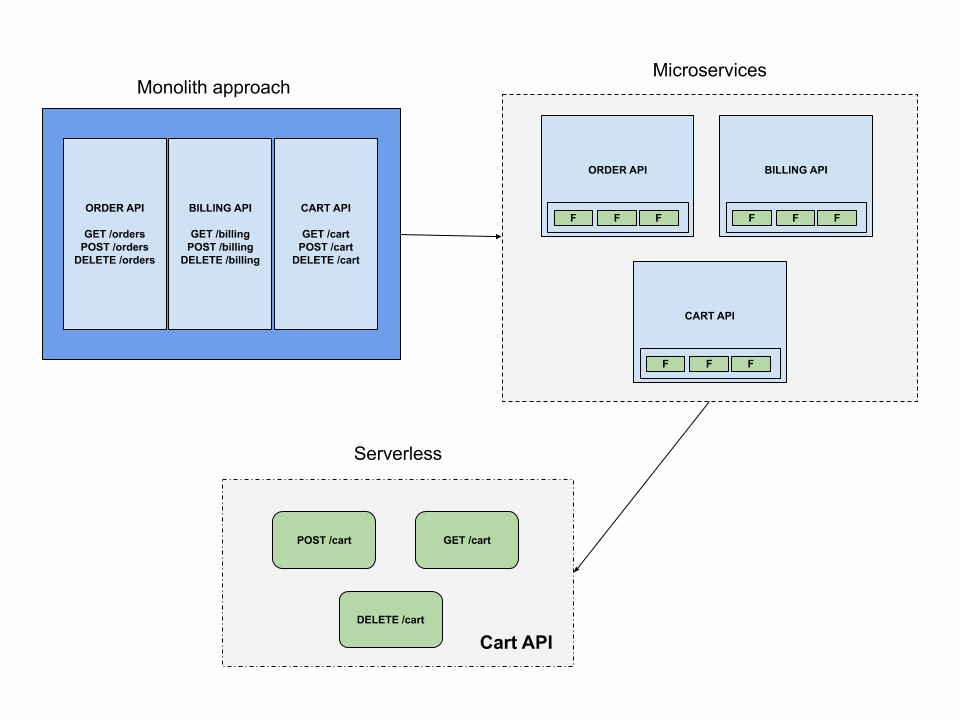

Look at the following depiction of how monolith allows the interaction to happen with each individual service component, and then individual endpoint within those services. This has a bad design for modern solutions and leads to scalability issues. That is why we most often update the systems and introduce service-oriented patterns, such as microservices.

Microservices enable your solutions to be deployed as a separate process and enables your developers to focus on core business logic for each individual service. You can think of this as a service or solution deployed for an ecommerce website. Your catalogue endpoints have nothing in common with your cart or user profile endpoints, thus they can be deployed separately and can have a separate quota for the hardware and infrastructure.

Furthermore, scaling your solution to microservices based architecture solves some other mega problems, by supporting well-organized architecture, logical and modular decoupling and freedom of work on multiple languages. For example, our logging API is highly appropriate to be written in Python, let’s say, but it does not mean that we should explicitly force other modules to be written in the same language or ecosystem.

Ironically, scaling your application from monolith to microservices is not the end of the problematic world. At the larger scale while working on microservices-based architecture requirement comes when we strongly need to concern about the scalability in those applications too. However, the process of scaling microservices application is entirely different than that of scaling monolith application, moreover, it demands highly capable and skilled engineers and is a super-challenging phase for any application.

Scaling microservices incudes the scaling of individual components and services while considering the overall complexities of overall system as well. Being distributed in nature, one of the biggest challenges that comes during the scalability is the resource allocation and understanding the requirement of the intermediate layer which deals with the overall communication at the systematic layer.

Assume we have an e-commerce site, just like the one that we discussed previously above, comprising of different services, such as, booking API, cart API, checkout API, billing API etc. In the legacy monolith approach, we will have one large package in which we have all the modules. Moreover, our users will be interacting with this one large process regardless the individual usage of each module. Thus, leading to a single point of failure in our systems, and in case we need to scale the solution, it would require us to redeploy a complete application.

On the other hand, in microservices based architecture, we will break this mega process into small services; booking, cart, checkout and billing will be individual services having their separate ecosystem and architectural dependencies to manage load and traffic spikes according to the user demand and requirement in an efficient way.

As discussed, to leverage scalability in microservices we welcome the concept of serverless architecture. Meaning that, we no longer deal with processes or services, rather, we work on direct end-points and functions. Now in the previous post, we merely discussed how we ca create a function and like how a serverless function is created and deployed on Alibaba Cloud, now in this post we will discuss how we can lift-and-shift our existing Web APIs and HTTP modules to leverage the benefits of the serverless architectures.

Consequently, from the user perspective, all the request will be made directly as per the actual load and requirement. Which is, that since even inside the microservice, only a couple of endpoints will be called more often, and some endpoints will receive maximum amount of HTTP traffic. In the context of above example, we will not be working on the individual service instances but the standalone functions. And, working on the standalone functions will be more rational, cost effective and business-friendly. The reason being that now each function itself received the hardware quota and infrastructure support. So instead of paying for the memory requirements of the functions or endpoints that do not get called, we remove them from the process and load them as needed in the overall solution.

The major benchmark associated with the serverless approach is that we must execute only the functions without caring about the overall infrastructure, and obviously all the headache of maintainability, tooling, scaling etc. One should opt for serverless computing mainly if your application requires the execution of code only when some certain event triggers, and obviously you do not want to pay unnecessarily for the resources which you are not using or using less. Though, moving to serverless makes things oversimplified but significantly beneficial for overall business development.

Alibaba Cloud Function Compute takes the operational load from your hands entirely. And everything seems magical because the management is entirely abstracted away from you. However, under the hood, you know the underlying steps which are being taken to support the all the operational overhead of your system. All you need to do is write code for the standalone functions to get triggered and manage a code repository for your code.

As discussed, it supports an event-based architecture which means you get flexible resource-consumption, expeditious development and deployment process which automatically scale up your business productivity.

Serverless approach can cut the functions cost which are not being used frequently. Eventually, it helps you to balance your cost in the seasonal occasions as well because you can better recognize the most accessed functions which cuts the unnecessary cost.

Now, let us discuss a simple scenario where we might have to lift and shift an existing Web API to Alibaba Cloud Function Compute platform. A common API will have several HTTP endpoints configured for itself, so a typical catalogue microservice will have several HTTP endpoints set up, some of them will be returning a complete list of items in the database, some will show the categories that are available, some will show the most recently viewed items and some might just show an item itself. If you see a pattern here, then you can study that every user, and in most cases, majority of the users will not be viewing these pages in any time of day. They will at maximum, visit the home page, sometimes they will review the categories and hardly they will visit the recently viewed pages as they might have already found the product, or they will move away to other services—such as a search service in our solution.

In this case, we can see that a microservice might be able to solve a couple of problems for us, but it still has traces of the ancestral problems—the scalability of endpoints and modules, not being used at all. So, we create multiple functions, and deploy our code in them.

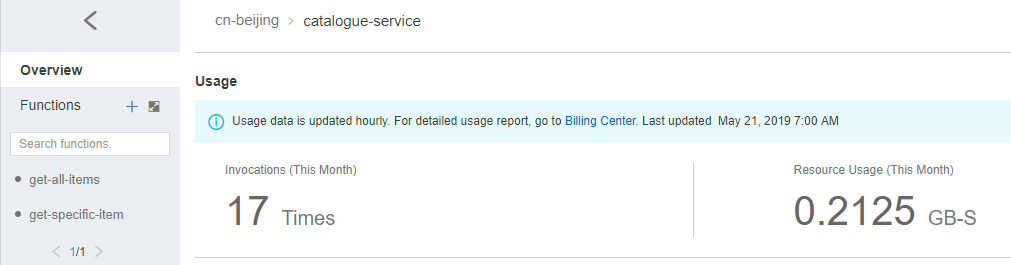

Inside the service, you can see we have 2 functions, and an overview of the service utilization. Our service has 2 functions, and to keep things simple, I wrote 2 functions depicting the cases where one endpoint enlists the entire catalogue of products and other one returns the values.

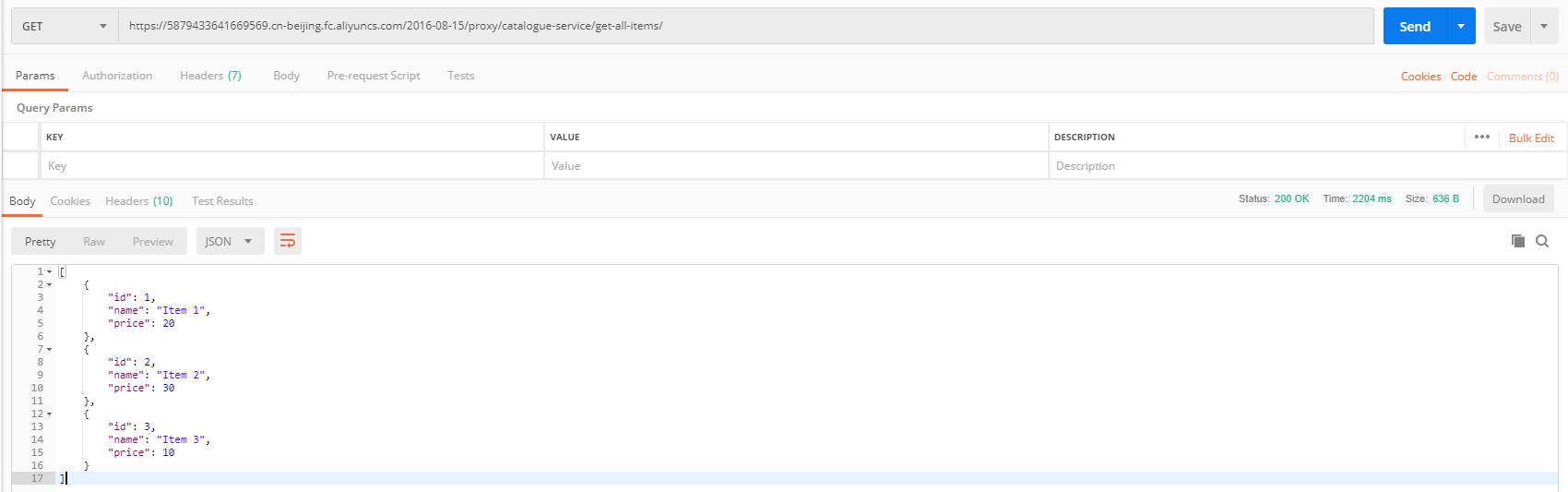

Using Postman if we send over the request to our service, this is what we get,

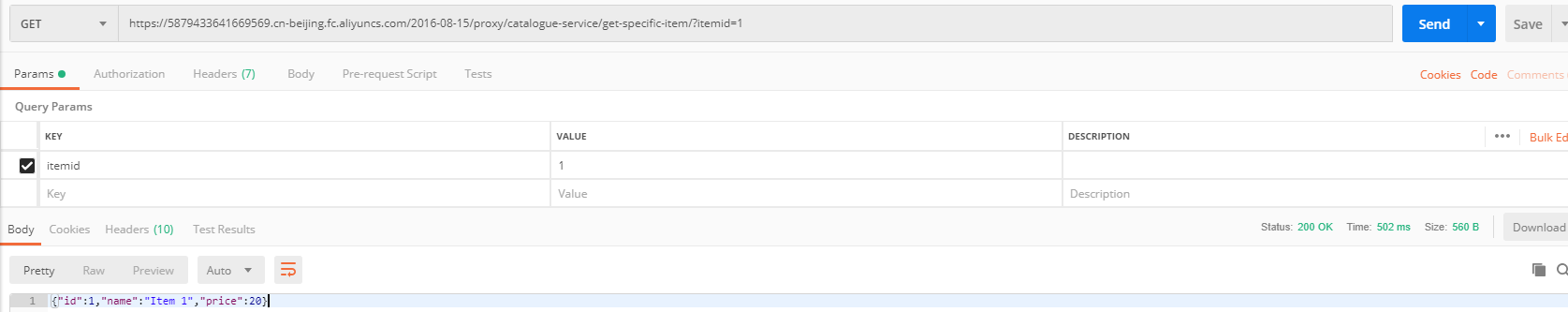

Similarly, if we send the request to our individual item returning endpoint, we get the following response,

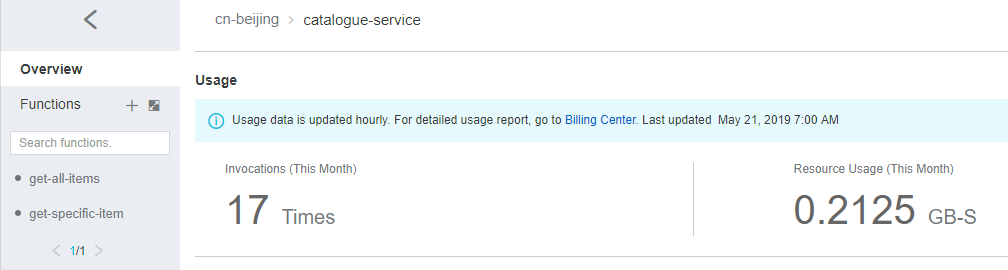

How does this differ in the overall system is that now our individual endpoints are all managed by the HTTP requests, and we can explore how our services are being utilized as standalone instances. See the following images,

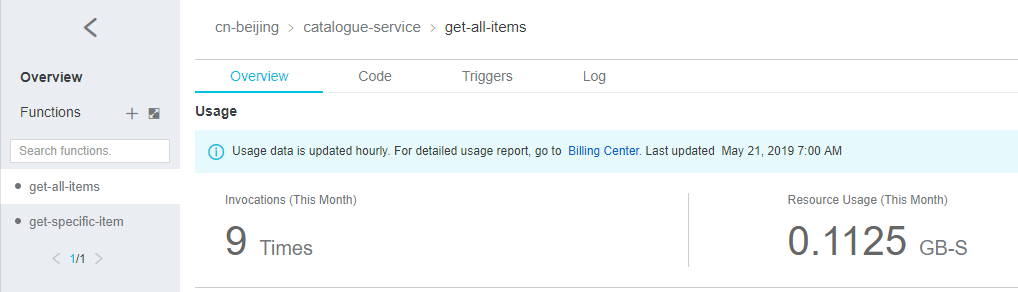

This shows the overall usage of our web solution, 17 requests and a bit infrastructure usage involved. Now, we can go ahead and explore how each of our services is using infrastructure of ours. The first function that returns every instance is here,

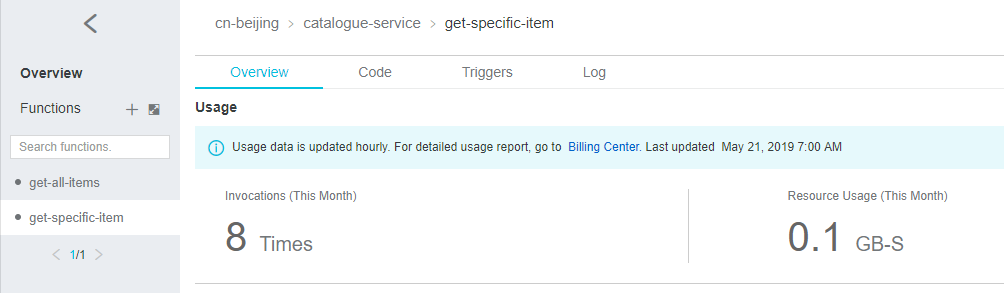

Now for the second of our function instance, this has a different infrastructure consumption included.

And this is how we can separate the modules and functions to their own separate instance. Now in these instances we know that our cost will be associated with the functions that are being executed, and not with extra memory or CPU charges that might be associated with the functions or microservices. Although you might argue that the overall cost is still the same, but once you see that the code base as well as the variables or parameter requirements are different for these functions, you can see that once your functions are executed at least a couple million times you will start to see some improvements in the performance as well as the costs.

And that is pretty much how we can extend the functionality of our existing applications and solutions to serverless and improve the performance, while at the same time also controlling the pricing and costs associated with the solutions.

2,593 posts | 793 followers

FollowAlibaba Clouder - April 16, 2019

Alibaba Clouder - June 23, 2020

Alibaba Developer - March 3, 2020

Alibaba Clouder - December 28, 2020

ClouderLouder - August 18, 2020

Alibaba Developer - August 24, 2020

2,593 posts | 793 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More API Gateway

API Gateway

API Gateway provides you with high-performance and high-availability API hosting services to deploy and release your APIs on Alibaba Cloud products.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreMore Posts by Alibaba Clouder