By Sun Xiangzheng

Alibaba Cloud Elastic High Performance Computing (E-HPC) provides users with HPC services over the public cloud based on the Alibaba Cloud infrastructure. In addition to the computing resources, E-HPC also provides many stand-alone functional modules, such as job management, user management, cluster command execution, and CloudMetrics. CloudMetrics allows users to view the usage of cluster resources on the performance dashboard and monitor the status of all cluster nodes.

For node performance, users can view the change curves and historical data of node-specific metrics and associate such information with the scheduled job information. For process performance, users can view the historical information of specific processes to determine the process that needs to be analyzed. Based on the performance analysis, users can obtain the function distribution and stack calling information of hotspots and learn the execution status of applications.

Weather Research and Forecasting (WRF) is a type of open source National Weather Service (NWS) software that adopts the next-generation mesoscale forecasting model and is widely used in the meteorological industry. As meteorological and climatic computing is gradually migrated to the cloud, WRF must be optimized to adapt to the cloud super computing environment. This article describes how to use CloudMetrics to analyze the performance characteristics of WRF running on the cloud and optimize WRF step by step.

The example used for computing in this article is based on Chinese weather forecast data. The platform used is ecs.scch5.16xlarge, which is equipped with 32 cores, 64 vCPUs, 192 GB of memory, and 10 Gbit/s Ethernet, and 46 Gbit/s RoCE network adapters.

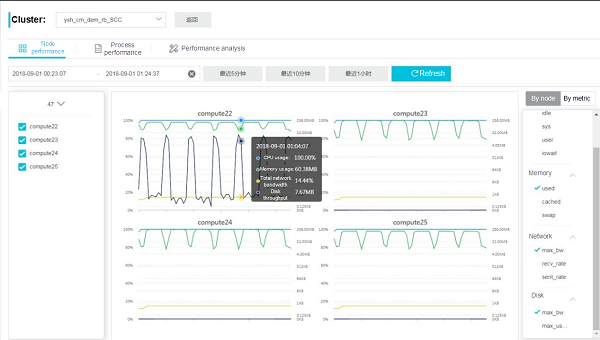

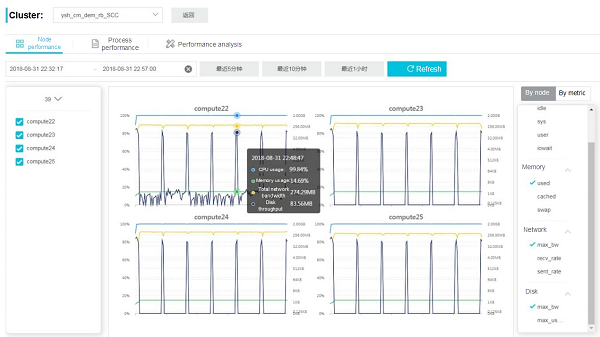

CloudMetrics is connected with the cluster scheduling system, and the historical data can be automatically stored. Therefore, you can submit jobs directly in the scheduling system. After these jobs are completed, you can view the runtime performance characteristics of these jobs at any time. Figure 1 displays the runtime performance characteristics of WRF as shown below.

Figure 1a. Performance characteristics displayed by node

Figure 1b. Performance characteristics displayed by metric

Figure 1.a shows the changes of different metrics of a single node, which exhibit the obvious periodic nature of the program. The storage bandwidth increases significantly at a certain interval, accompanied by the decrease in network communication between nodes. In this example, a total of seven result files are generated, corresponding to the seven peaks of the monitored storage bandwidth. In addition, all the result files are written after the hourly forecast, so no pressure is imposed on the storage bandwidth during the running process.

Figure 1.b shows the changes of network performance between nodes, which exhibit the unbalanced loads between nodes. The unbalanced loads can be explained by the application logic. Weather forecasting is related to terrains (such as land or ocean), and information exchanged on the process boundaries may vary according to dynamic changes in loads (such as cloud movement). Therefore, an imbalance exists in network communication between nodes. However, the network bandwidths of all nodes are relatively low. As two network types are configured on the platform, you need to check whether the high-speed RoCE network is used.

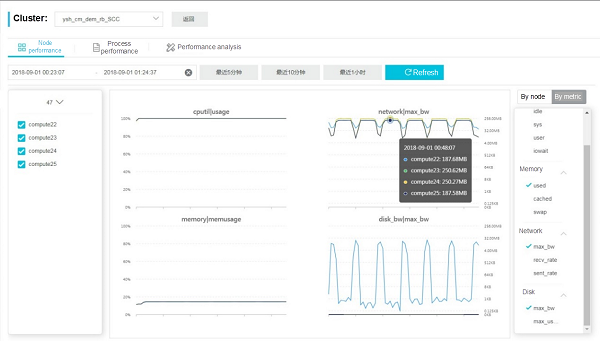

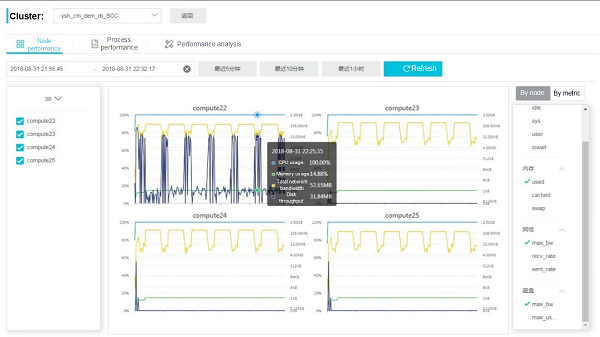

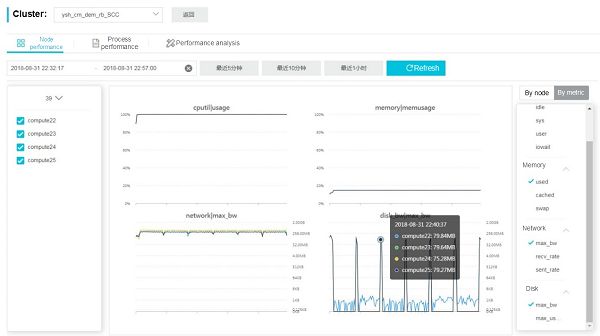

The run configuration indicates that the MPI communication is set to Ethernet. Therefore, you need to modify the network settings to use the high-speed RoCE network instead and submit jobs to be monitored again. Figure 2 shows the runtime performance characteristics of WRF obtained after MPI communication is changed to RoCE.

Figure 2a. Performance characteristics displayed by node

Figure 2b. Performance characteristics displayed by metric

Obviously, the network bandwidth and storage bandwidth between nodes increase when the program is running. This is because the RoCE network is used for communication during computing. The Ethernet bandwidth resources can be used for storage operations. The network communication bandwidth increases from the original 180 Mbit/s and 240 Mbit/s to the current 320 Mbit/s and 450 Mbit/s.

The monitoring results indicate that the storage bandwidth is not balanced between nodes. Only the compute22 node handles the file operations. Therefore, it is difficult to effectively utilize the overall bandwidth resources of NAS.

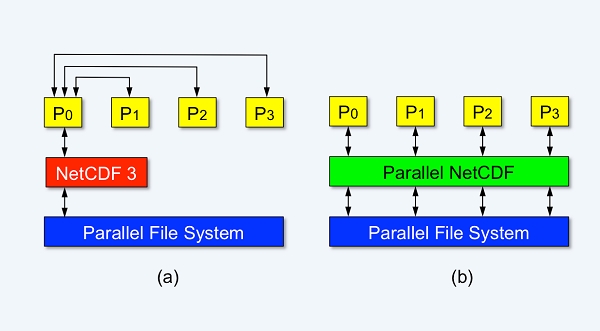

It is time consuming to analyze the file I/O operations using logs. About 25% more time is needed in certain examples, and this may change with the computing scale. WRF uses the NetCDF file format. If you use the NetCDF classic mode, that is, the primary process collects the result data from all secondary processes and completes the file writing operations (as shown in Figure 3.a), the network and storage monitoring data are consistent with the previous monitoring data.

Figure 3. Comparison of NetCDF I/O schemes (3a. Serial I/O; 3b. Parallel I/O)

To improve the running efficiency, you can use the optimized parallel NetCDF (PNetCDF) scheme, that is, all nodes participate in I/O operations to reduce the wait time, as shown in Figure 3.b.

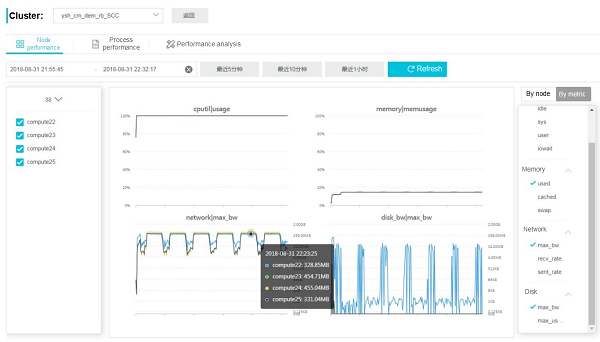

You can use this PNetCDF scheme to enhance the WRF performance, and submit jobs to be monitored again. Figure 4 shows the runtime performance characteristics of WRF obtained after RoCE and PNetCDF scheme is used.

Figure 4a. Performance characteristics displayed by node

Figure 4b. Performance characteristics displayed by metric

As shown in Figure 4, every node has monitoring data for metrics related to I/O operations, which is consistent with the working principle of the PNetCDF scheme. The monitoring results indicate that the file operation time is significantly reduced after each hourly forecast task is completed, and performance is further improved.

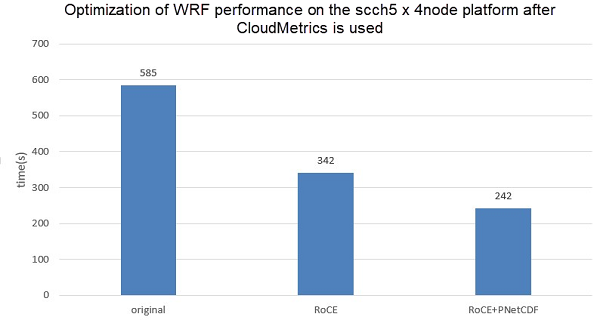

CloudMetrics on the E-HPC platform is a cluster operation monitoring and application performance analysis module. In the past, you had to rely on the timestamp output by WRF log to observe the running status of WRF. Now, you can fully monitor the runtime performance characteristics of WRF with the help of CloudMetrics. In addition, based on the runtime performance characteristics of the application, you can deploy the high-speed RoCE network and optimized PNetCDF scheme to gradually improve the operational efficiency of WRF and eventually achieve a WRF performance of 2.4X, as shown in Figure 5.

Figure 5. WRF performance optimization results (average time required to complete hourly forecasting)

What's Behind Alibaba Cloud's Record-Breaking Image Recognition Performance?

Optimizing GPU Heterogeneous Parallel Performance (GROMACS) with E-HPC CloudMetrics

33 posts | 12 followers

FollowAlibaba Cloud ECS - March 12, 2019

Alibaba Cloud ECS - March 12, 2019

Alibaba Clouder - November 5, 2018

Alibaba Clouder - February 12, 2020

Alibaba Cloud ECS - March 5, 2021

Alibaba Clouder - December 16, 2020

33 posts | 12 followers

Follow Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Super Computing Cluster

Super Computing Cluster

Super Computing Service provides ultimate computing performance and parallel computing cluster services for high-performance computing through high-speed RDMA network and heterogeneous accelerators such as GPU.

Learn More Super App Solution for Telcos

Super App Solution for Telcos

Alibaba Cloud (in partnership with Whale Cloud) helps telcos build an all-in-one telecommunication and digital lifestyle platform based on DingTalk.

Learn MoreMore Posts by Alibaba Cloud ECS