Recent reports on Kubernetes GPU scheduling and operation mechanisms suggest that the company will deprecate the traditional alpha.kubernetes.io/nvidia-gpu main code in version 1.11, and will completely remove the GPU-related scheduling and deployment code from the main code.

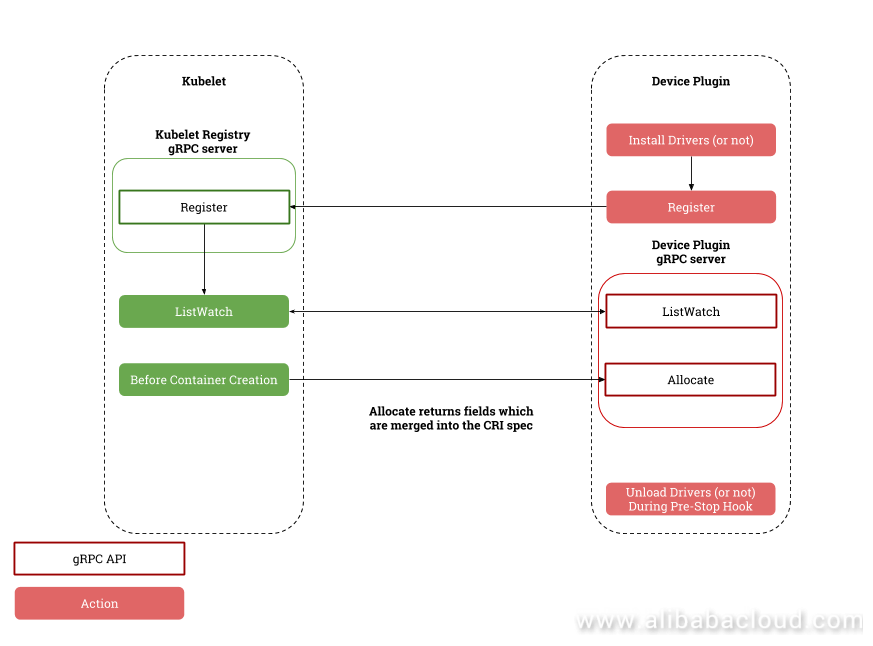

Instead, the two Kubernetes built-in modules, Extended Resource and Device Plugin, together with the device plugins developed by device providers, will implement scheduling from a device cluster to working nodes, and then bind devices with containers.

Let me briefly introduce the two modules of Kubernetes:

--feature-gates=DevicePlugins=true.ListAndWatch and Allocate and listens to Unix sockets under /var/lib/kubelet/device-plugins/, such as /var/lib/kubelet/device-plugins/nvidia.sock.service DevicePlugin {

// returns a stream of []Device

rpc ListAndWatch(Empty) returns (stream ListAndWatchResponse) {}

rpc Allocate(AllocateRequest) returns (AllocateResponse) {}

}

Of them,

When the plugin starts, it registers with Kubelet /var/lib/kubelet/device-plugins/kubelet.sock in GRPS format and provides the plugin listening Unix socket, API version, and device name (for example, nvidia.com/gpu). Kubelet exposes the devices to the Node status and sends them to the API server in an Extended Resource request. The scheduler schedules the devices based on the information.

After the plugin starts, Kubelet establishes a persistent listAndWatch connection to the plugin. When detecting an unhealthy device, the plugin automatically notifies the Kubelet. If the device is idle, Kubelet moves it out of the allocatable list; if the device is used by a pod, Kubelet kills the pod.

The plugin monitors the Kubelet status by using the Kubelet socket. If Kubelet restarts, the plugin also restarts and registers with Kubelet again.

Typically, it supports daemonset deployment and non-containered deployment. However, the company officially recommends the deamonset deployment.

Nvidia Official GPU Plugin

NVIDIA provides a user-friendly GPU device plugin NVIDIA/k8s-device-plugin that is based on the Device Plugins interface. You do not need to use volumes to specify the library required by CUDA as you do for the traditional alpha.kubernetes.io/nvidia-gpu.

apiVersion: apps/v1

kind: Deployment

metadata:

name: tf-notebook

labels:

app: tf-notebook

spec:

template: # define the pods specifications

metadata:

labels:

app: tf-notebook

spec:

containers:

- name: tf-notebook

image: tensorflow/tensorflow:1.4.1-gpu-py3

resources:

limits:

nvidia.com/gpu: 1

As Kubernetes has gained its position in the ecosystem, extensibility will be its main battlefield. Heterogeneous computing is an important new battlefield for Kubernetes. However, heterogeneous computing requires powerful computing and high-performance networks. Therefore, it needs to integrate with high-performance hardware such as GPU, FPGA, NIC and InfiniBand in a unified manner. Kubernetes Device Plugin is simple, elegant, and still evolving. Alibaba Cloud Container Service will launch the Kubernetes GPU 1.9.3 cluster based on the Device Plugin.

Read similar articles and learn more about Alibaba Cloud products and solutions at www.alibabacloud.com.

Beating Counterfeits with Alibaba Cloud Optical Character Recognition (OCR)

Industrial Big Data: An Interview with K2Data’s Chief Operating Officer

2,593 posts | 793 followers

FollowAlibaba Developer - June 17, 2020

Alibaba Developer - May 8, 2019

Alibaba Container Service - June 12, 2019

Alibaba Cloud Native Community - November 3, 2025

Alibaba Cloud Community - November 14, 2024

Xi Ning Wang - August 30, 2018

2,593 posts | 793 followers

FollowLearn More

Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ROS(Resource Orchestration Service)

ROS(Resource Orchestration Service)

Simplify the Operations and Management (O&M) of your computing resources

Learn MoreMore Posts by Alibaba Clouder