Abstract: Redis®* supports the MIGRATE command to transfer a key from a source instance to a destination instance. During migration, the serialized version of the key value is generated with the DUMP command, and then the target node executes the RESTORE command to load data into memory. In this article, we migrated a key with a data size of 800 MB and a data type (ZSET). We compared the performance of a migration on a native Redis® environment with a migration on an optimized environment.

Redis® supports the MIGRATE command to transfer a key from a source instance to a destination instance. During migration, the serialized version of the key value is generated with the DUMP command, and then the target node executes the RESTORE command to load data into memory. In this article, we migrated a key with a data size of 800 MB and a data type (ZSET). We compared the performance of a migration on a native Redis® environment with a migration on an optimized environment. The test environment consists of two Redis® databases on the local development machine and the impact of the network is ignored. Based on these conditions, executing the RESTORE command on the native Redis® environment takes 163 seconds while executing it on the optimized Redis® takes only 27 seconds. This analysis was performed using Alibaba Cloud Tair (Redis® OSS-Compatible).

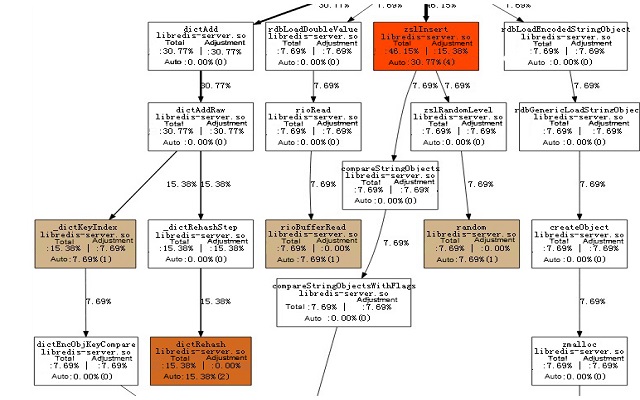

Our analysis result shows the CPU status as follows:

We can see from the source code that the hash table values and scores of the ZSET from migrate traversal are serialized and then packaged to the target node.

The target node then deserializes the data and refactors the ZSET structure, including running the zslinsert and dictadd operations. This process is time-consuming, and the refactoring cost increases as the data size increases.

From our analysis, we can see that the bottleneck is due to data model refactoring. To optimize the process, we can serialize and package the data model of the source node together and send the data to the target node. The target node parses the data, pre-constructs the memory, and then crams the parsed members.

Because ZSET is a fairly complicated data structure in Redis®, we will briefly introduce the concepts used in ZSET..

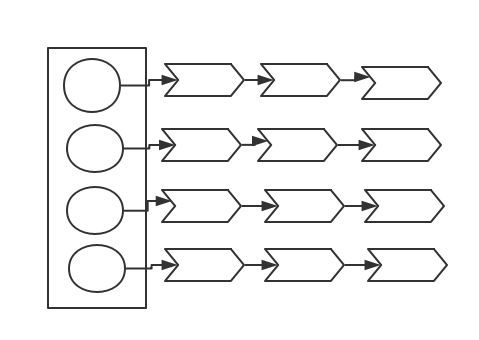

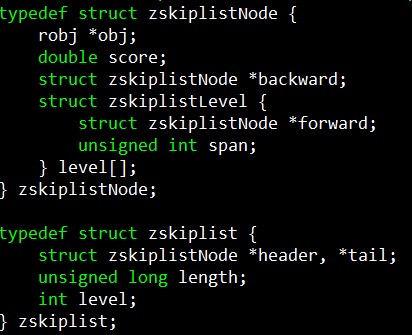

ZSET consists of two data structures, one being the hash table, which stores the value of each member and the corresponding scores, and the other being the skip list, where all members are sorted in order as shown in the figure below:

In Redis®, the memory for ZSET dicts and the memory for zsl members and scores are shared. The two structures also share the same memory. The cost will be higher if you describe the same copy of data in two indexes in the serialization.

When looking at the CPU resource consumption, we can see that the hash table part consumes more CPU resources when calculating the index, rehash, and compare key. (Rehash is used when the pre-allocated hash table size is not enough, and a larger hash table is needed to transfer the old table to the new table. The compare key is used when traversing in the list to determine whether a key already exists).

Based on this, the largest hash table size is specified during serialization, removing the need for rehash when generating a dict table when executing RESTROE.

To restore the zsl structure, we need to deserialize the member and score, as well as recalculate the member index and insert it to the table of the designated index. Because the zsl from the traversal does not contain key conflicts, members of the same index can be added to the list directly, eliminating the compare key.

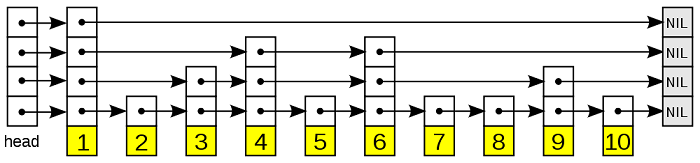

Zsl has a multi-layer structure as shown in the figure below.

The difficulty of the description lies in the unknown total number of levels of zskiplistNode on each layer. We also need to describe the node context on each layer while considering compatibility.

Based on the above considerations, we decided to traverse from the highest level of zsl, and the serialized format is:

level | header span | level_len | [ span ( | member | score ... ) ]

| Item | Description |

| level | Level of the data |

| header span | The span value on the layer of the header node |

| level len | Total number of nodes on this layer |

| span | The span value on the layer of the node |

| member | score | Because redundant nodes may exist on top of Level 0, we can add up the span values to determine whether a redundant node exists. If a redundant node exists, the member | score will not be serialized. Otherwise, member | score are included for non-redundant nodes. The deserialization algorithm follows the same principle. |

By now, the description of the ZSET data model is complete and the performance of RESTORE is faster. However, this optimization method introduces a tradeoff because it consumes more bandwidth. The extra bandwidth originates from the field that describes the node. The data size after optimization is 20 MB larger than the 800 MB of data before the optimization.

Tair (Redis® OSS-Compatible) is a stable, reliable, and scalable database service with superb performance. It is structured on the Apsara Distributed File System and full SSD high-performance storage, and supports master-slave and cluster-based high-availability architectures. Tair (Redis® OSS-Compatible) offers a full range of database solutions including disaster switchover, failover, online expansion, and performance optimization. Try Tair (Redis® OSS-Compatible) today!

*Redis is a registered trademark of Redis Ltd. Any rights therein are reserved to Redis Ltd. Any use by Alibaba Cloud is for referential purposes only and does not indicate any sponsorship, endorsement or affiliation between Redis and Alibaba Cloud.

Protecting Websites through Semantics-Based Malware Detection

2,593 posts | 793 followers

FollowAlibaba Clouder - March 24, 2021

ApsaraDB - August 13, 2025

Alibaba Clouder - February 14, 2020

Alibaba Cloud Community - March 25, 2022

ApsaraDB - March 13, 2025

Alibaba Clouder - January 21, 2021

2,593 posts | 793 followers

Follow Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn More Database Migration Solution

Database Migration Solution

Migrating to fully managed cloud databases brings a host of benefits including scalability, reliability, and cost efficiency.

Learn More Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn More ADAM(Advanced Database & Application Migration)

ADAM(Advanced Database & Application Migration)

An easy transformation for heterogeneous database.

Learn MoreMore Posts by Alibaba Clouder