By Zhang Lei and Deng Hongchao

Recently, the AWS ECS team released an open-source project called Amazon ECS for Open Application Model on the official GitHub website. More cloud providers have started to explore the Open Application Model (OAM) implementation. What are the advantages of OAM that have attracted so many cloud providers to embrace it?

Serverless was first mentioned in a 2012 article titled "Why the Future of Software and Apps is Serverless". However, if you have read this article, you will find that it discussed software engineering for continuous integration and code version control. This is completely different from the "scale to zero," "pay as you go," FaaS, and BaaS concepts that define serverless today.

In 2014, AWS released a product called Lambda. The product has a simple design and was built on the belief that cloud computing ultimately provides services to applications. When you want to deploy an application, the product only requires a place to store the self-written program to execute specific tasks. This means you do not have to care about which server or virtual machine the program runs on.

The release of Lambda raised the "serverless" paradigm to new heights. Serverless provides a brand-new system architecture for deploying and running applications on the cloud. You no longer have to worry about complex server configurations. Instead, you only need to pay attention to your own code and how to package the code into an "operational entity" that can be hosted by the cloud computing platform. After releasing a series of classic features, such as application instance scaling based on traffic and billing based on usage rather than pre-allocated resources, AWS gradually established the de facto standard in the serverless field.

In 2017, AWS released the Fargate service, extending serverless to container-based operational entities. This idea was soon followed by Google Cloud Run and other platforms, kicking off the "next-generation" of container-based serverless runtime boom.

What relationship does OAM have with AWS and serverless?

First, OAM is an application description specification jointly initiated by Alibaba Cloud and Microsoft and maintained by the cloud-native community. The core approach of OAM is application-centric. It emphasizes that R&D and O&M personnel should collaborate using a set of declarative, flexible, and scalable upper-layer abstractions instead of through complex and obscure infrastructure-layer APIs.

For example, in the OAM specification, a container-based application that uses Kubernetes Horizontal Pod Autoscaler (HPA) for horizontal scaling is defined using the following two YAML files.

YAML file written by R&D personnel:

apiVersion: core.oam.dev/v1alpha2

kind: Component

metadata:

name: web-server

spec:

# Details of the application to deploy

workload:

apiVersion: core.oam.dev/v1alpha2

kind: Server

spec:

containers:

- name: frontend

image: frontend:latestYAML file written by O&M or PaaS platform personnel:

apiVersion: core.oam.dev/v1alpha2

kind: ApplicationConfiguration

metadata:

name: helloworld

spec:

components:

- name: frontend

# O&M capabilities required for running the application

traits:

- trait:

apiVersion: autoscaling.k8s.io/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: scale-hello

spec:

minReplicas: 1

maxReplicas: 10We can see that the areas of concern to R&D and O&M personnel are completely separated under the OAM specifications. To define and release applications, R&D and O&M personnel only need to write a few relevant fields instead of a complete Kubernetes Deployment and HPA objects. That is the benefit of upper-layer abstraction.

After the preceding YAML files are sent to Kubernetes, the OAM plug-in automatically converts them to complete Deployment and HPA objects, to be run.

OAM sets out a series of standard definitions for cloud-native application delivery, such as "application," "O&M capability," and "release boundary." Based on these specifications, developers of the application management platform can use simple YAML files to describe various applications and O&M policies and ultimately use the OAM plug-in to map these YAML files to Kubernetes resources, including CRD.

OAM provides a de facto standard that defines upper-layer abstraction. This upper-layer abstraction prevents R&D personnel from having to deal with unnecessary and complex infrastructure details, such as HPA, ingress, containers, pods, and services. OAM is seen as a magic weapon for the development of Kubernetes application platforms.

It removes infrastructure-layer details from application descriptions to provide an extremely friendly upper-layer abstraction to developers. This meshes with the infrastructure-free approach of serverless.

More specifically, OAM is naturally serverless.

That's why OAM has attracted attention in the serverless field, including from AWS.

At the end of March 2020, the AWS ECS team released an open-source project called "Amazon ECS for Open Application Model" on the official GitHub website.

This project is the AWS team's attempt to support OAM based on serverless services. The underlying runtime of this project is the serverless container service Fargate that was mentioned before. This project provides an interesting experience for developers, so let's take a look.

Project preparation includes the following three steps:

aws configure command through the official AWS client to generate the AWS account authentication information locally.oam-ecs executable file.oam-ecs env command to prepare the deployment environment. After this command is run, AWS automatically creates a VPC with the corresponding public or private subnets.After preparation is complete, you only need to define an OAM application YAML file locally, just like in the preceding helloworld application. Then, you can run the following command to deploy a complete application with HPA on Fargate. The application can be accessed through the public network.

oam-ecs app deploy -f helloworld-app.yamlHow easy is this?

The official documentation for the AWS ECS for OAM project gives a more complicated example.

The application to deploy consists of three YAML files, which are still classified according to R&D and O&M.

server-component.yaml: This file contains the first component of the application and describes the application container to deploy.worker-component.yaml: This file contains the second component of the application and describes a cyclic job that checks whether the environment network is unobstructed.example-app.yaml: This file contains the complete application component topology and O&M traits of components. It describes a manual-scaler policy, which is used to scale out the worker component.

The following figure shows the content of the example-app.yaml file.

apiVersion: core.oam.dev/v1alpha1

kind: ApplicationConfiguration

metadata:

name: example-app

spec:

components:

- componentName: worker-v1

instanceName: example-worker

traits:

- name: manual-scaler

properties:

replicaCount: 2

- componentName: server-v1

instanceName: example-server

parameterValues:

- name: WorldValue

value: EveryoneWe can see that it defines two components, worker-v1 and server-v1, and the worker-v1 component has a manual-scaler policy.

To deploy the preceding complex application, you simply need to run a command.

oam-ecs app deploy \

-f examples/example-app.yaml \

-f examples/worker-component.yaml \

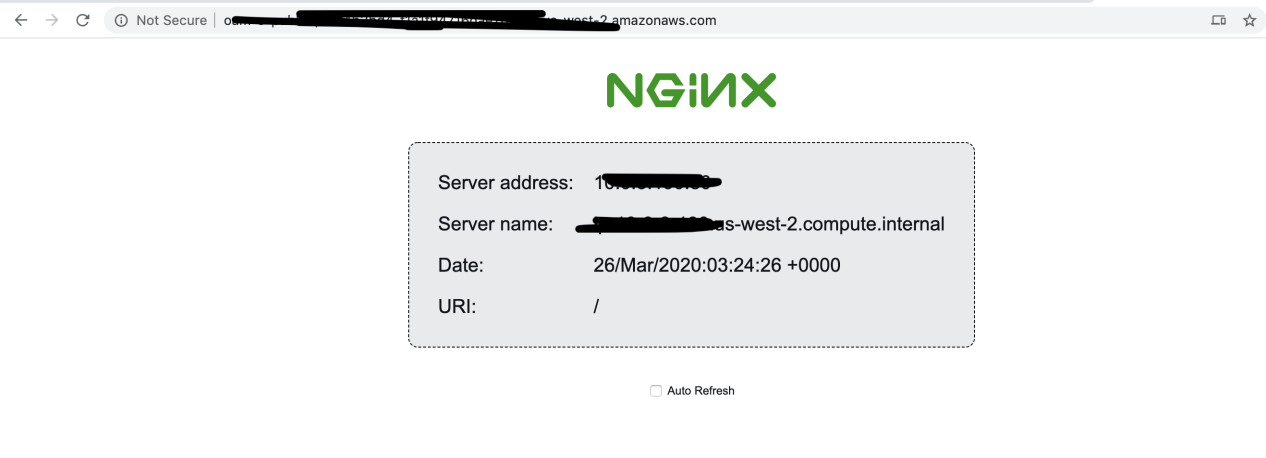

-f examples/server-component.yamlAfter the preceding command is run, you can run the oam-ecs app show command to view the application access information and DNS name. It may take a longer time to run the command in China due to network issues, so please have some patience. Open your browser, enter the access information in the address bar, and press Enter. Then, you can then access the application, as shown in the following figure.

To modify application configuration, for example, to update the image or modify the replicaCount value, you need to modify the preceding YAML files and re-deploy them. This is a fully declarative management method.

If you want to perform the same operation in the AWS console, you need to switch between at least five cloud product pages to configure data, or you need to learn the CloudFormation syntax and write a large CF file to obtain the resources required to run the application, such as the Fargate instance, LoadBalancer, network, and DNS configuration.

Through the OAM specifications, the preceding application definition and deployment processes are simplified. In addition, original process-based cloud service operations are converted to simpler and more friendly declarative YAML files. To implement the OAM specifications, only several hundred lines of code are required.

When you combine the AWS serverless Fargate service with OAM's developer-friendly application definitions, you will experience the simplicity, efficiency, and ease of use that serverless offers to developers.

OAM has had a major influence on the cloud-native application delivery ecosystem. Alibaba Cloud Enterprise Distributed Application Service (EDAS) has become the first OAM-based production-level application management platform and will soon launch a next-generation application-centric product experience. In the CNCF community, the Crossplane project, a well-known cross-cloud application management and delivery platform, has become an important adopter and maintainer of the OAM specifications.

EDAS official website: https://help.aliyun.com/product/29500.html

Crossplane: https://github.com/crossplane/crossplane

In addition to AWS Fargate, all serverless services in the cloud computing ecosystem can easily use OAM as a developer-oriented presentation layer and application definition to simplify and abstract complex infrastructure APIs and upgrade complex process-based operations to the Kubernetes-style declarative application management method with one-click. Thanks to the high scalability of OAM, you can deploy container applications on Fargate and also use OAM to describe functions, virtual machines, WebAssembly, and any other types of workloads. Then, you can easily deploy them to serverless services and seamlessly migrate them between different cloud services. These "magical" capabilities are possible due to the combination of OAM's standardized and scalable application model and a serverless platform.

OAM + serverless has become one of the hottest topics in the cloud-native ecosystem. We invite you to join the CNCF cloud-native application delivery team (SIG App Delivery) and help push the cloud computing ecosystem to adopt application-centric methodologies.

AWS ECS on OAM project: https://github.com/awslabs/amazon-ecs-for-open-application-model/

Open Application Model project: https://github.com/oam-dev/spec

CNCF SIG App Delivery: https://github.com/cncf/sig-app-delivery

The OAM specifications and model have resolved many known issues. However, its journey is just beginning. OAM is a neutral open-source project. We welcome more people to participate in the project and help define the future of cloud-native application delivery.

You can join us by:

Zhang Lei is a Senior Technical Expert at Alibaba Cloud. He is one of the maintainers of the Kubernetes project. He works on the Alibaba Kubernetes team on Kubernetes and cloud-native application management systems.

Deng Hongchao is a Container Platform Technical Expert at Alibaba Cloud. He previously worked at CoreOS and was one of the core authors of the Kubernetes Operator project.

Conversation with the First Chinese TOC about How Dragonfly Became a CNCF Incubation Project

484 posts | 48 followers

FollowAlibaba Developer - March 3, 2020

Alibaba Developer - June 30, 2020

Alibaba Developer - February 9, 2021

Alibaba Developer - March 16, 2021

Alibaba Clouder - May 27, 2019

Alibaba Developer - March 2, 2020

484 posts | 48 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native Community