Soul is a product based on interest maps and playing patterns, which is a virtual social network platform for young people. Founded in 2016, Soul is committed to creating a social meta-universe for young people with the ultimate vision of no more lonely people. In Soul, users can freely express themselves, recognize others, explore the world, exchange interests and opinions, gain spiritual resonance and identity, obtain information in communication, and build new, quality relationships.

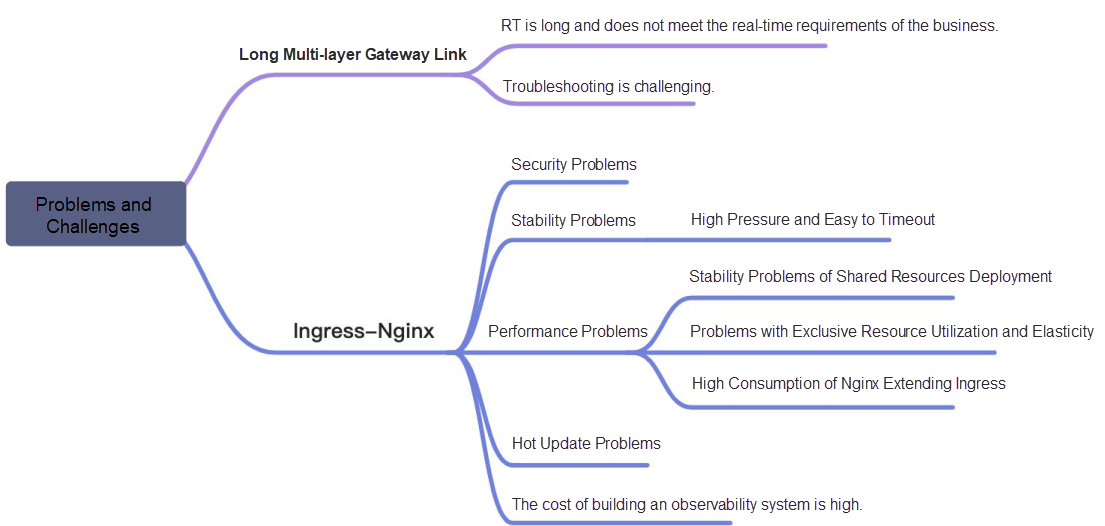

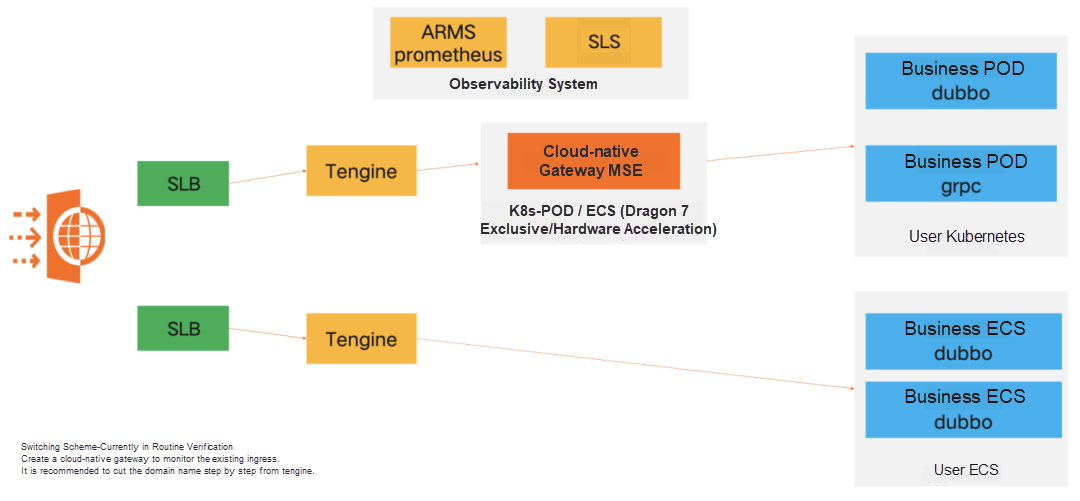

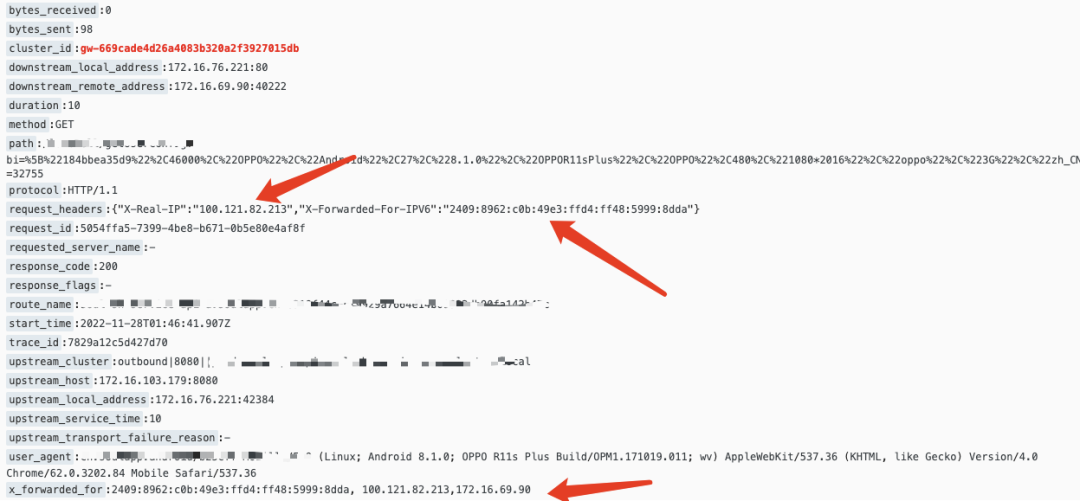

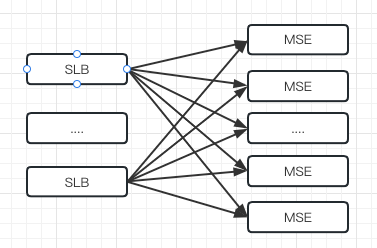

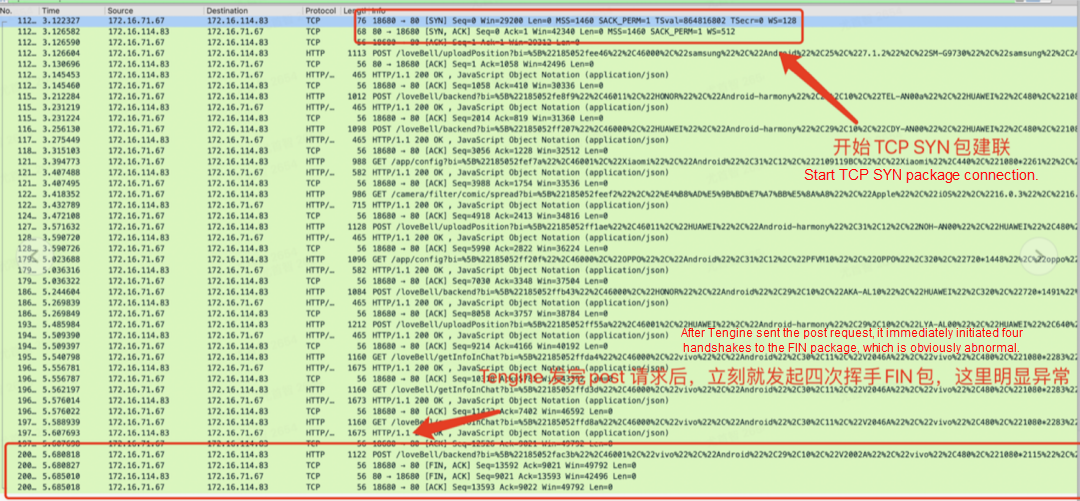

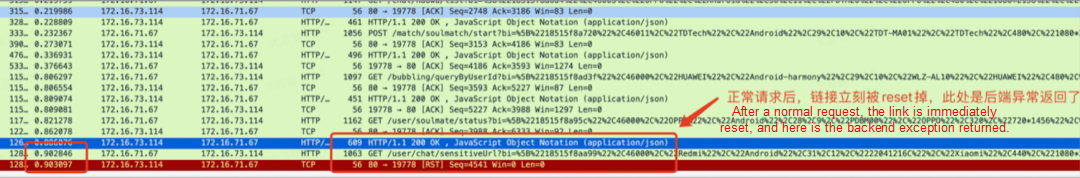

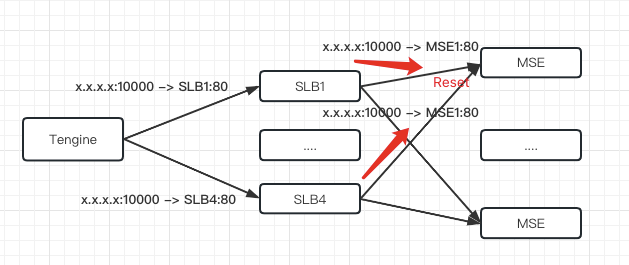

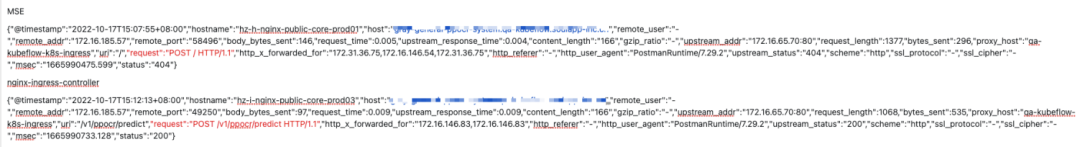

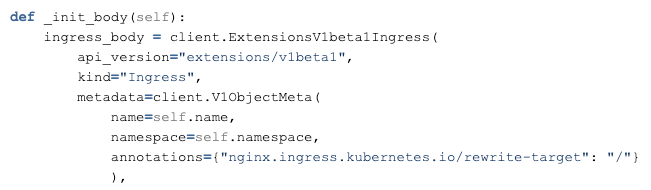

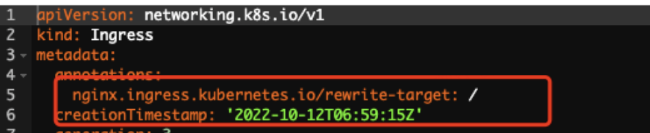

Soul began to try container service in 2020. Container ingress gateway (Ingress-Nginx), microservice gateway, and SLB + the Tengine of the unified access layer appeared in the container transformation phase of ECS. This has resulted in a multi-gateway architecture. The long link brings about the problems of cost and RT. The investigation of a request exception requires a lot of manpower to solve, and the positioning problem is costly.

In 2023, the Ingress-Nginx community gives more feedback about stability and security problems. Soul temporarily stops receiving new features, which is a huge hidden danger.

Open-source Ingress-Nginx encounters many problems, and it is difficult to locate and solve the probability timeout problem due to the huge online traffic. Therefore, will we consider whether to invest in more R&D personnel to solve this problem or choose Envoy gateway and ASM and MSE cloud-native gateway? We have made a comprehensive evaluation of these three new technologies.

| Nginx vs. Envoy | Benefit and Drawback |

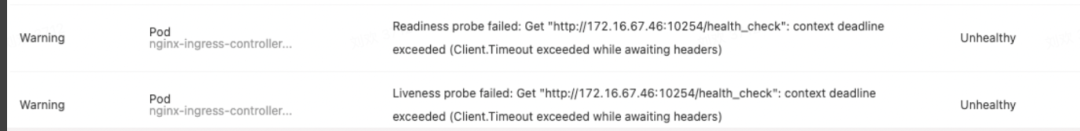

| Nginx Ingress Controller (Default) | 1. It is easy for Nginx ingress to connect to Kubernetes clusters with many files. 2. The configuration loading depends on the original Nginx config reload, and the use of lua reduces the number of reloads. 3. The plug-in capabilities and scalability of Nginx ingress are poor. 4. Unreasonable Deployment Architecture: The control plane controller and the data plane Nginx process run together in one container, and CPU preemption will be carried out. When Prometheus is turned on to collect monitoring metrics, OOM will occur under high load, the container will be killed, and the health check times out. (The control plane process is responsible for a health check and monitoring metric collection.) |

| MSE Cloud-Native Gateway/ASM Ingress Gateway | 1. Envoy is the third project that graduated from the Cloud Native Computing Foundation (CNCF), which is suitable for the data plane of the ingress controller in Kubernetes. 2. Based on C++, many advanced functions required by the proxy are implemented, such as advanced load balancing, fusing, throttling, fault injection, traffic replication, and observability. 3. XDS protocol dynamic hot update - no "reload" required 4. Control plane and data plane are separated. 5. The managed component has a high SLA. |

| ASM Envoy Listio Ingress Gateway) | 1. Install a complete set of istio components (at least ingressgateway and virtualservice are required). 2. Relatively complex 3. ASM does not support direct transfer from http to Dubbo but supports transfer from http to gRPC. 4. Managed Dubbo service https://help.aliyun.com/document_detail/253384.html |

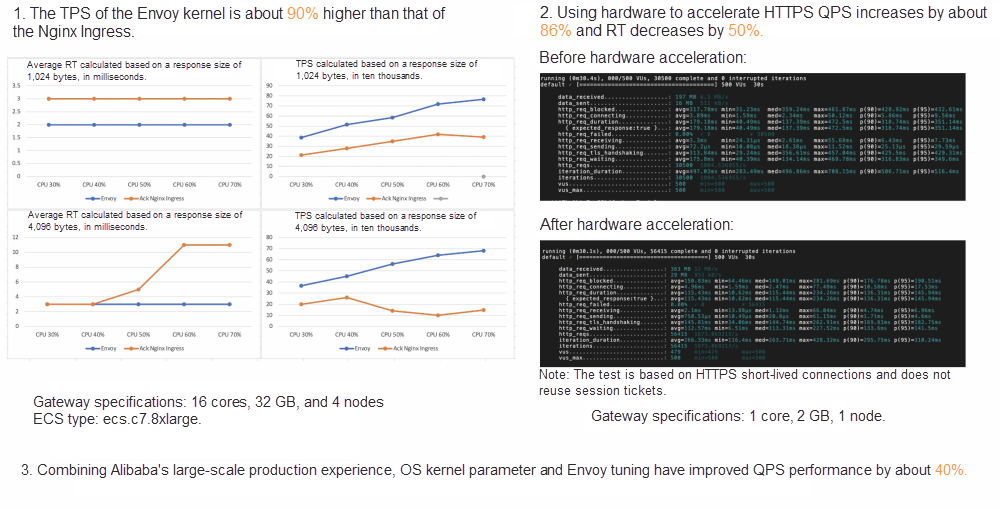

In summary, Envoy is a good choice for the data plane at this stage (it can solve the performance and stability problems of the existing Nginx ingress controller). Due to high-performance requirements, we gave priority to performance stress testing.

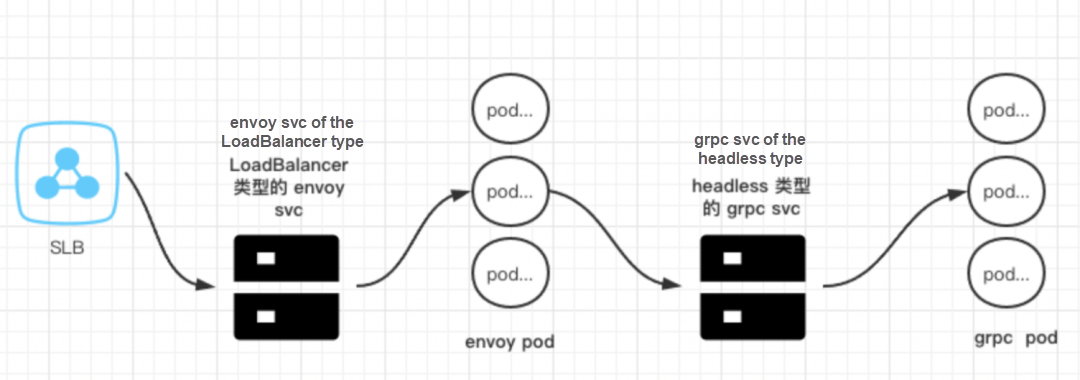

We mainly tested the performance and gRPC load balancing capability by comparing the stress testing data of three different schemes of online services (SLB + Envo + headless svc, ALB, MSE). The data shows that MSE cloud-native gateway has advantages in RT and success rate and can meet the forwarding needs of Soul gRPC. Can MSE meet all the business requirements of Soul? Can it solve the maximum cluster timeout problem? We have conducted a more comprehensive evaluation of MSE.

| Dimension | ACK Scheme | ALB Scheme | MSE Scheme |

| Scenario Documentation | Use an existing SLB instance to expose an application. istio+envoy |

Ingress proxy grpc Based on the Cloud Network Management Platform |

Introduction to MSE Ingress istio+envoy |

| Traces | client→SLB->envoy→headless svc→pod | client→alb ingress → grpc svc→pod | client→mse ingress → grpc svc→pod |

| Conclusions |

Benefits · The stress testing response takes less time and is error-free. · Stable services and uniform traffic during scaling. Drawbacks · You need to build an Envoy service and a headless service. |

Benefits · Only deployment + ALB service binding is required. Drawbacks · Time-consuming instability with an error response |

Benefits · Only deployment + MSE service binding is required. · Time-consuming is further optimized from 6ms to 2ms with no errors. Drawbacks · MSE instances + SLB instances are charged separately. |

| Comparison Items | MSE Cloud-Native Gateway | Self-Built Ingress-Nginx |

| O&M Costs | Resources are fully managed and O&M-free. Ingress and microservice gateway are integrated. In container and microservice scenarios, save 50% of costs. Built-in Free Prometheus Monitoring and Log Analysis Capabilities |

In microservice scenarios, you must build a microservice gateway separately. You must pay for additional resources and products To implement metric monitoring and log analysis. Manual O&M costs are high. |

| Ease of Use | You can perform operations by connecting the backend services of ACK and MSE Nacos. | The operations not connected to microservice registries are error-prone. |

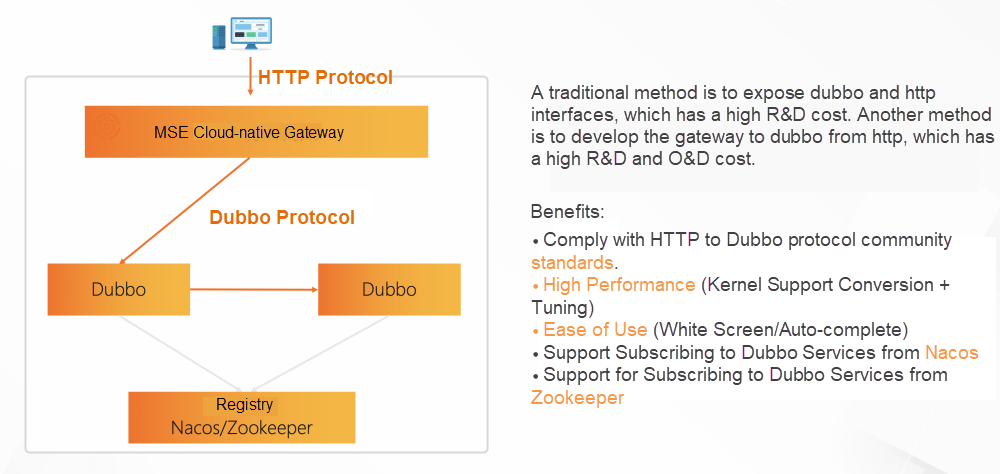

| Convert HTTP to Dubbo | One method is to expose Dubbo/http interfaces, which has high research and development cost. Another method is to develop http to Dubbo gateway with high research and development and operation costs. | Not supported. You need to develop your service or forward the service. The performance is deeply optimized. TPS is about 80% higher than Ingress-Nginx and Spring Cloud Gateway. Manual performance optimization is required. |

| Performance | The performance is deeply optimized. TPS is about 80% higher than Ingress-Nginx and Spring Cloud gateways. | Manual performance optimization is required. |

| Monitoring and Alerting | It is deeply integrated with Prometheus, Log Service, and Tracing Analysis, provides various dashboards and monitoring data at the service level, and supports custom alert rules and alert notifications using DingTalk messages, phone calls, and text messages. | Not supported. You must build your monitoring and alerting system. |

| Gateway Security | Multiple authentication methods are supported, including blacklist and whitelist, JWT, OIDC, and IDaaS. You can also use a custom authentication method. | You must perform complex security and authorization configurations by yourself |

| Routing | It supports the hot update of rules and provides precheck and canary release of routing rules. | Rule updates are detrimental to long links, and routing rules take a long time to precheck and take effect slowly. |

| Service Governance | It supports lossless connection and disconnection of backend applications and fine-grained governance features (such as service-level timeout retries). | Not supported. |

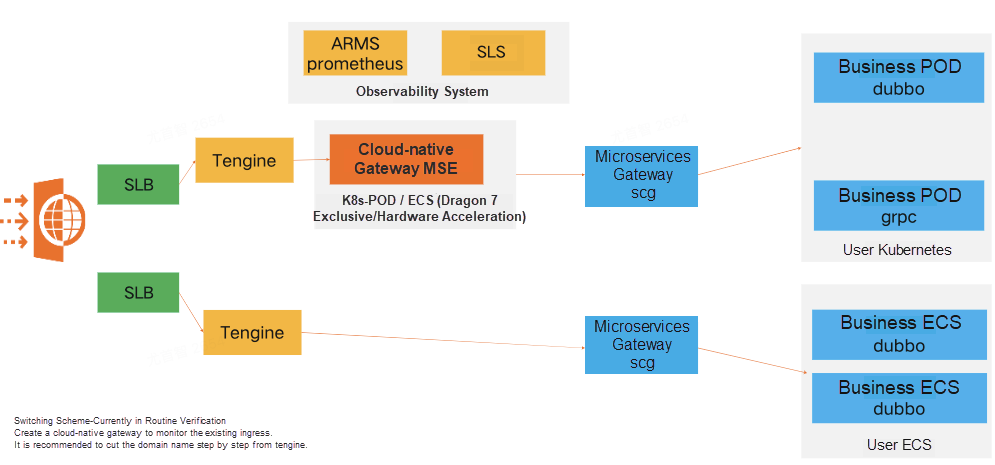

The gateway link of Soul is relatively long, which solves the most urgent timeout problem and service release warm-up problem. Therefore, in the first phase, the Ingress-Nginx is replaced, and the container ingress gateway/microservice gateway is combined.

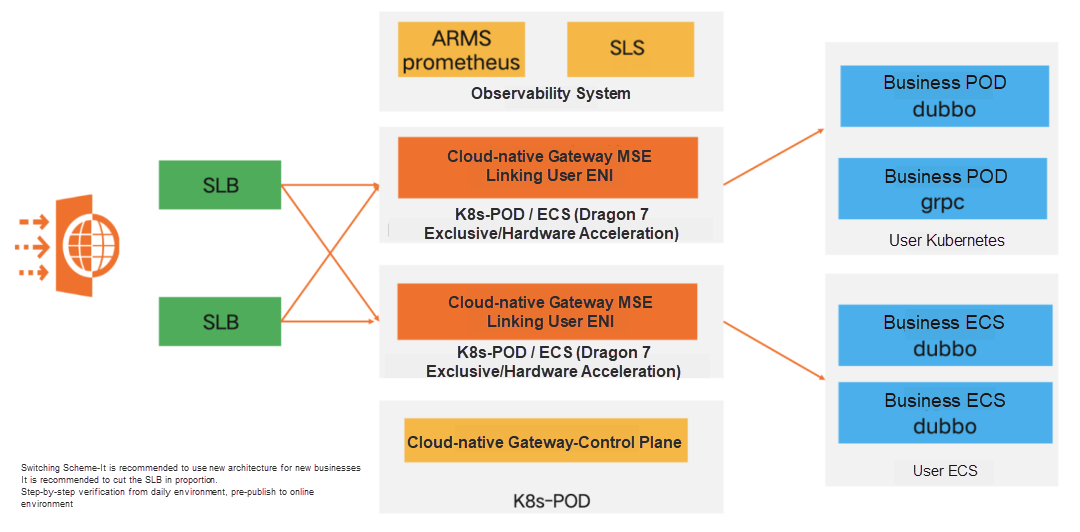

Decrease the gateway link to the shortest (unlink the microservice gateway) and manage the HTTP forwarding RPC capability to MSE. Unlink Tengine and manage the ECS forwarding capability to MSE. Finally, implement SLB->MSE->POD/ECS.

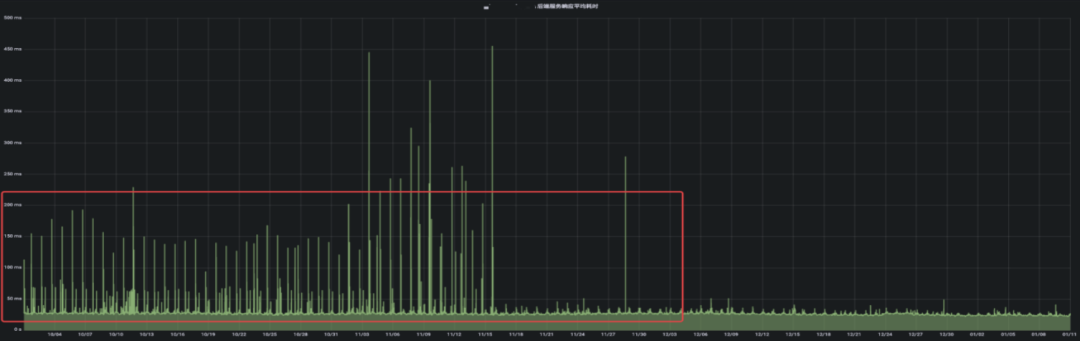

After MSE switching, the processing and response request time is stable, from the peak value of 500ms to the peak value of 50ms.

Ingress-Nginx is compared with MSE error codes. The 502 is reduced to 0 during the service release period, and the average reduction of 499 is 10%.

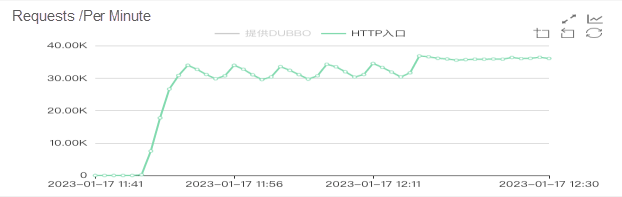

Landing has solved most of the timeout problems, but the timeout problem of the slow Java program release has not been solved. Therefore, we turn on the service warm-up function. The service gradually receives traffic to prevent a large amount of traffic to cause the timeout of the newly started Java process.

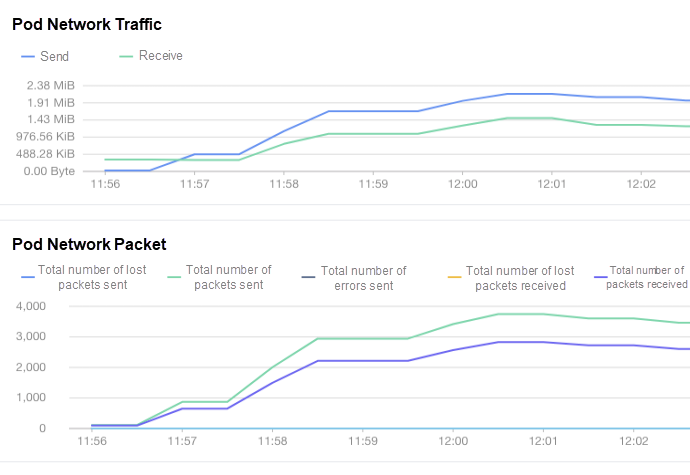

Turn on the warm-up effect: As shown in the figure, the Pod does not receive the full amount immediately after starting but gradually preheats in five minutes. You can see that in the number of service http ingress requests, Pod network inbound and outbound traffic, and Pod CPU utilization. Nginx needs to monitor from the bottom to the upper layer. After using the cloud-native gateway, it provides a comprehensive observation view and rich gateway Prometheus metrics. It is convenient to observe and solve complex problems.

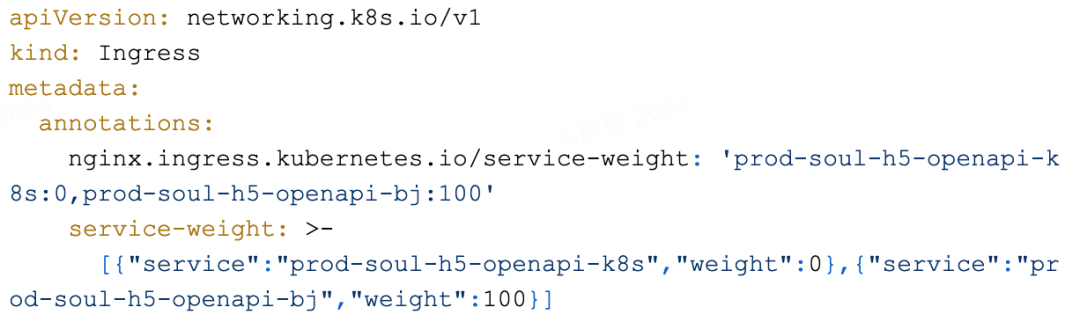

Use canary- Annotation to configure blue-green and canary release. canary- Annotation is a canary release method that is implemented by the community.

Use service- Annotation to configure blue-green and canary release. service- Annotation is the early implementation of ACK Nginx Ingress Controller.

Exploration and Practice of EventBridge in SaaS Enterprise Integration

165 posts | 12 followers

FollowAlibaba Cloud Community - February 17, 2023

Alibaba Cloud Native Community - September 12, 2023

Alibaba Cloud Native Community - May 17, 2022

Alibaba Clouder - April 8, 2020

Alibaba Cloud Native Community - March 2, 2023

Alibaba Clouder - July 15, 2020

165 posts | 12 followers

Follow Best Practices

Best Practices

Follow our step-by-step best practices guides to build your own business case.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Cloud Native