In the past few years, the cloud-native field represented by container technology has received great attention and development. The implementation of containerization is an important means for enterprises to reduce costs and increase efficiency. Up to now, Dewu has completed the containerization of the whole domain. In the process of containerization, the deployment and O&M of services are switched from the previous ECS mode to the containerization mode. What's more, companies have gained many benefits from improving resource utilization and R&D efficiency. As a new trendy online shopping community, Dewu uses a search engine based on AI and big data technology and a personalized recommendation system as strong support for business development. Therefore, the application of algorithms accounts for a large proportion of business applications. In the process of containerization, in view of the difference between the R&D process of algorithm application services and the R&D process of common services, we have built KubeAI (a cloud-native AI platform for Dewu) for the scenarios of algorithm R&D based on the full investigation of the needs of algorithm developers. After the iteration of features and the expansion of supported scenarios, KubeAI has successfully completed the containerization of business domains involving AI capabilities (such as CV, search and recommendation, risk control algorithms, and data analysis) and has achieved good results in improving resource utilization and R&D efficiency. This article explains the implementation and practice of KubeAI.

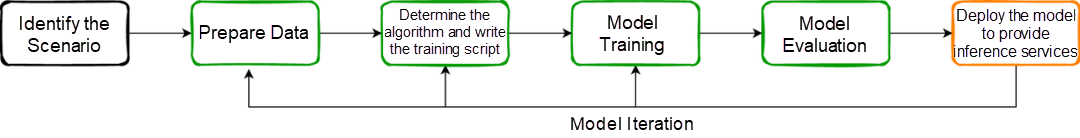

AI businesses are more about the iteration of models. The development process of a model can be summarized into the following steps:

Identify the Scenario: In this step, what should be clear is the problems to be solved, for which scenario the features are provided, and what are the inputs and outputs of the features/services. For example, which brand of shoes will be identified or inspected, what are the product features of the brand, what are the dimensions of the sample features, the type of features, etc. Different scenarios have different requirements for sample data and processing algorithms.

Prepare Data: Various methods (online/offline/mock) can be used to obtain sample data based on the analysis of the scenario requirements. The obtained data must be cleaned and labeled. This process is the foundation of AI business development because all subsequent processes are carried out based on the data.

Determine the Algorithm and Write the Training Script: Based on the understanding of business objectives, developers will select an appropriate algorithm and write a model training script according to past experiences or based on the research and experiment results in this scenario.

Train the Model: We can understand the algorithm model as a complex mathematical formula with many parameters (like w and b in f(x)=wx+b). Training is the process of using a large amount of sample data to find the optimal parameters to make the model have a high recognition rate. Model training is an important part of AI business development. The achievement of business goals depends on the accuracy of the model. Therefore, this step takes more time, effort, and resources and requires repeated experimental training to achieve the best model accuracy and prediction accuracy. This process is not one-time but periodic, which means training is carried out periodically according to the update of the business scenario and data. There are some mainstream AI engines available for the development and training of algorithm models (such as TensorFlow, PyTorch, and MXNet). These engines can provide API support to a certain extent. Therefore, it is convenient for algorithm developers to perform distributed training on complex models or make some optimization for hardware to improve training efficiency. The result of model training is model files. The content of the files can be understood as the parameters of models.

Evaluate the Model: Some evaluation metrics are usually required to guide developers in evaluating the generalization capability of the model to prevent model underfitting due to excessive bias or overfitting due to excessive variance. Commonly used evaluation metrics are precision, recall rate, ROC curve/AUC, and PR curve.

Deploy the Model: After repeated training and evaluation, an ideal model is obtained to help process online or production data. This requires the model to be deployed in the form of a service or a task to receive input data and give inference results. We call this service a model service. Model service is an online service script that loads models and performs inference calculations on preprocessed data after being ready.

After a model service is launched, it needs to be iterated with the change of data features, the upgrade of algorithms, the upgrade of online inference service scripts, and new requirements of the business for inference metrics. Note: This iterative process may require retraining and reevaluation of the model, or it may be an iterative upgrade of the inference service script.

Since 2022, we have been pushing forward the containerization of business services in various domains of Dewu. In order to reduce changes in user operation habits caused by changes in deployment methods during containerization, we continue to follow the deployment process of the release platform to shield the differences between container deployment and ECS deployment.

In the CI process, we customize different compilation and construction templates according to different development languages. The container platform layer is responsible for such operations from source code compilation to container image creation. This solves the common problem when R&D developers cannot make the engineering code into a container image due to a lack of knowledge about containers. In the CD process, we perform hierarchical management of configurations at the application type level, environment level, and environment group level. During the deployment, we merge multi-layer configurations into the values.yaml of Helm Chart and submit orchestration files to the container cluster. Users only need to set corresponding environment variables according to their needs, submit the deployment, and obtain the application cluster instance (container instance, similar to the ECS service instance).

For the O&M of application clusters, the container platform allows logging on to the container instance through WebShell (like logging on to the ECS instance). This feature facilitates troubleshooting issues related to application processes. The platform also provides O&M features (such as uploading and downloading files, restarting and reconstructing instances, and monitoring resources).

As large businesses, AI businesses (such as CV, search and recommendation, and risk control algorithm services) participate in containerization together with common business services. We have gradually completed the service migration of core scenarios represented by communities, transaction waterfalls, and quick-access areas. After the containerization, the resource utilization in the testing environment and production environment has been significantly improved, and the O&M efficiency has been doubled.

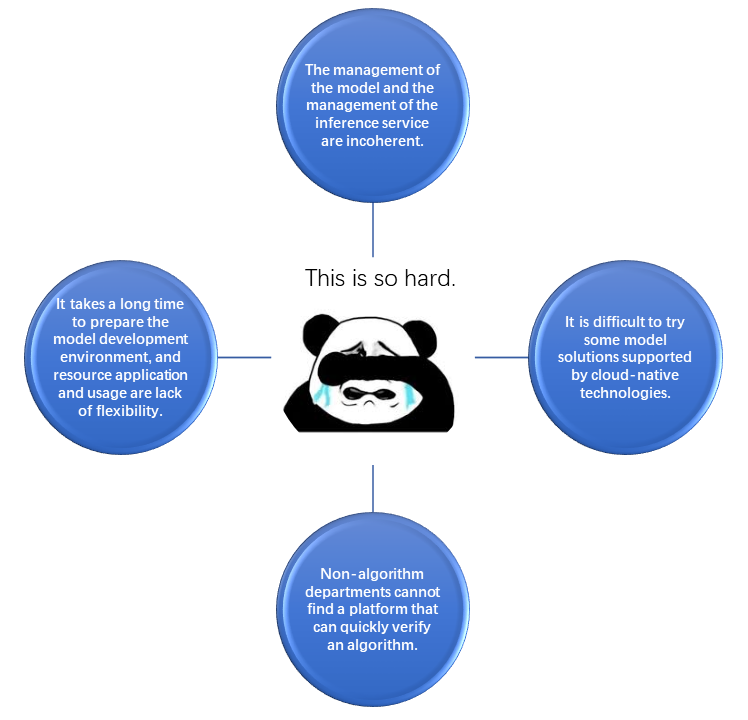

The containerization of Dewu is accompanied by the rapid development of Dewu's technical system. This makes the initially immature R&D of AI services put forward more requirements for containerization. It makes us, who only focus on the containerization of online inference services, see the pain points and difficulties faced by the algorithm developers in model development.

Pain Point 1: The management of the model and the management of the inference service are incoherent. Most of the CV models are trained on desktops and manually uploaded to OSS. Then, the model file's address on OSS is configured to the online inference service. Most of the search and recommendation models are trained on PAI, but they are manually stored in OSS. The release is similar to the CV model. As we can see, the management of models is incoherent in model training and release. It is impossible to track which services a model is deployed on, nor to visually see which one or more models are deployed on a service, so it is inconvenient to manage the model version.

Pain Point 2: It takes a long time to prepare the model development environment, and resource application and usage lack flexibility. Before containerization, resources are generally provided in the form of ECS instances. Application for resources before doing various initialization work is required (such as installing software, installing dependencies, and transferring data). (Most of the software libraries used for algorithm research work are large and complex to install.) If resources provided by one ECS instance are insufficient, the same process will be carried out again to apply for more resources, which is less efficient. At the same time, the application for resources is constrained by quotas (budgets) and lacks the mechanism of self-management and flexible application and release on demand.

Pain Point 3: It is difficult to try some model solutions supported by cloud-native technologies. With the implementation of cloud-native technologies in various fields, solutions (such as Kubeflow and Argo Workflow) provide good support for AI scenarios. For example, tfjob-operator can manage TensorFlow-based distributed training jobs in the form of CRD. Users only need to set the parameters of the corresponding components (such as Chief, PS, and Worker) to submit training tasks to Kubernetes clusters. Before containerization, if algorithm developers want to try this solution, they must master container-related knowledge (such as Docker and Kubernetes) and cannot use this ability as common users.

Pain Point 4: When a non-algorithm department wants to quickly verify an algorithm, it cannot find a platform that can support the verification well. The capabilities of AI are used in various business domains, especially some mature algorithms that business teams can easily use to do some baseline metric prediction or classification prediction work to help achieve better business results. In this case, a platform that can provide AI-related capabilities is needed to meet the need for heterogeneous resources (such as CPU, GPU, storage, and network) in these scenarios and meet the need for algorithm model management to provide users with ready-to-use features.

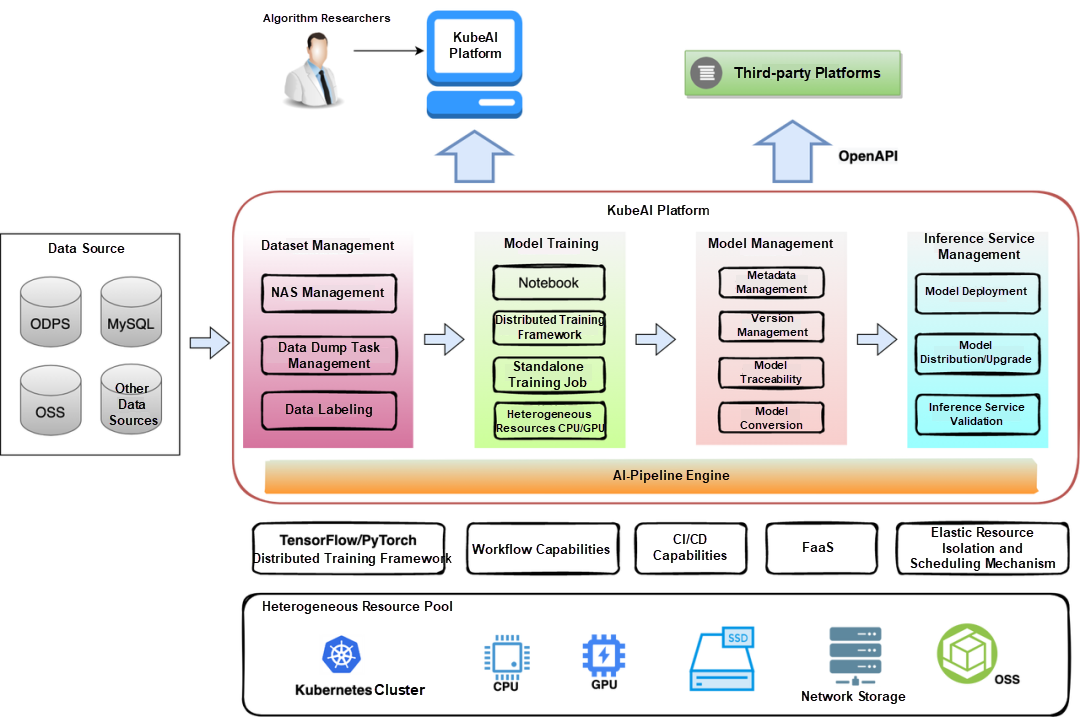

Based on the analysis of the pain points above and the requirements put forward by the algorithm developers to the container platform during the containerization (such as the requirements for unified model management, log collection, resource pool, data persistence, etc.), we have discussed and solved each problem one by one while considering the long-term functional planning of the platform and gradually built the KubeAI platform for AI businesses based on the container platform.

Based on full investigation and study of the basic architecture and product forms of AI platforms in the industry, the container technology team focuses on AI business scenarios and related business requirements, designs and gradually implements the cloud-native AI platform, KubeAI platform, in the containerization of Dewu. KubeAI focuses on solving the pain points of algorithm developers and provides necessary function modules. The platform is used throughout the model development, release, and O&M process to help algorithm developers quickly and cost-effectively access and use AI infrastructure resources for fast and efficient algorithm model design, development, and testing.

KubeAI provides the following function modules around the entire lifecycle of a model:

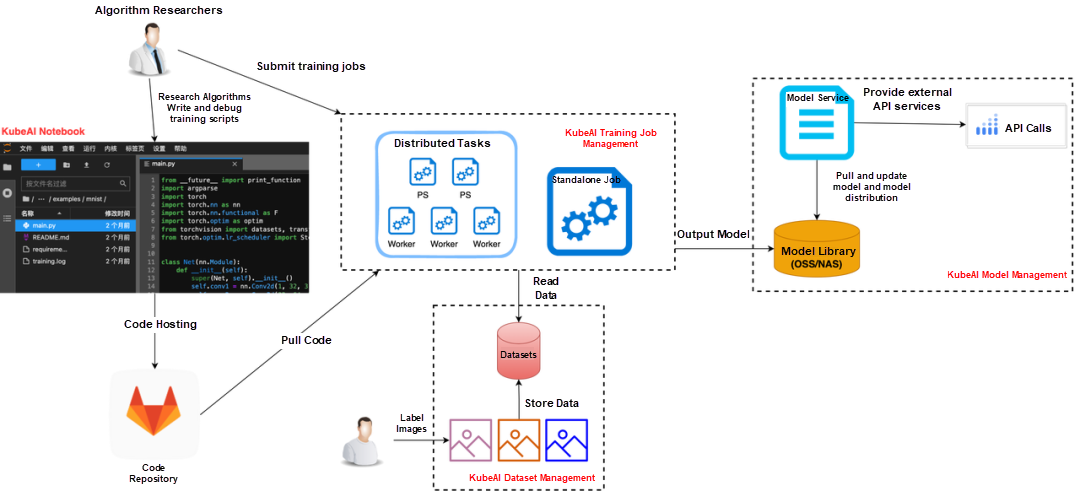

KubeAI supports individual users and platform users. Individual developers can use the KubeAI Notebook to develop model scripts. Small models can be trained in Notebook, and complex models need to be trained through training jobs. After a model is produced, it is managed on KubeAI, including being released as an inference service and iteration. The third-party business platform uses OpenAPI to acquire KubeAI's capabilities for upper-layer business innovation.

Let's focus on the functions of the following four modules: dataset management, model training, model service management, and AI-Pipeline engine.

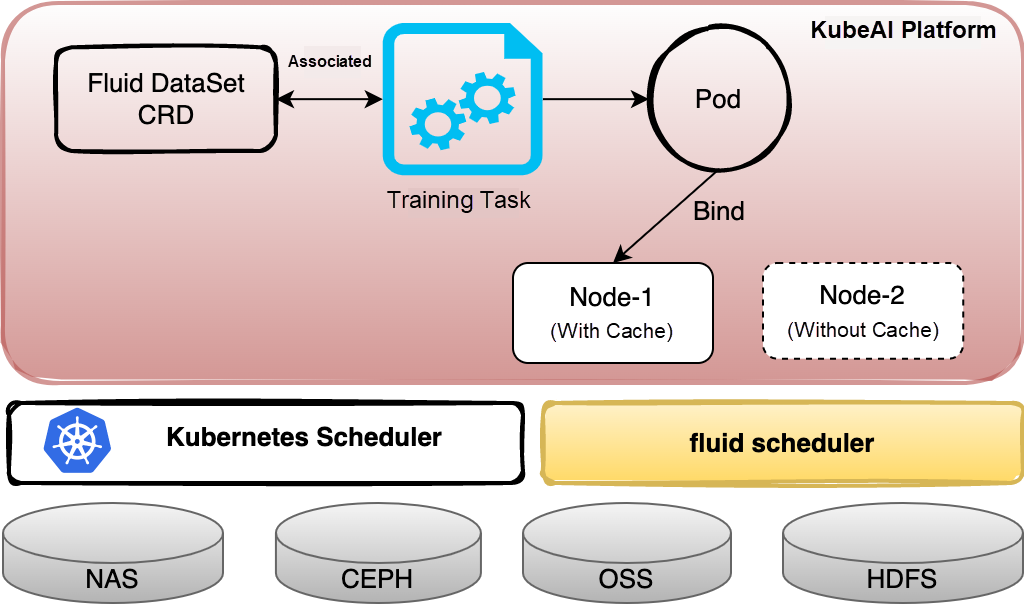

After analysis, the data used by algorithm developers is either stored in NAS, read from ODPS, or pulled from OSS. For unified data management, KubeAI provides users with the concept of datasets based on the Kubernetes Persistent Volume Claim (PVC) and is compatible with different data source formats. In addition, we use Fluid to configure cache service for datasets to decrease the overhead of data access caused by the architecture where computing and storage are separated. This way, data can be cached locally for the next round of iterative computing, and tasks can be scheduled to the computing nodes where datasets have been cached.

We have done the following for model training:

(1) Based on JupyterLab, Notebook is provided, which enables users to develop algorithm models in the same local development mode through shell or Web IDE.

(2) Model training is performed in the form of s, which can apply for and release resources more flexibly, improving the utilization rate of resources and significantly reducing the cost of model training. Based on the good extensibility of Kubernetes, various TrainingJob CRDs in the industry can be easily connected, and training frameworks are supported (such as TensorFlow, PyTorch, and XGBoost). Batch task scheduling and task priority queues are supported.

(3) In collaboration with the algorithm team, we have made some optimizations to the training framework and Tensorflow and achieved some improvement in the efficiency of batch data reading and the communication rate between PSs and Workers. We also provide some solutions to cope with unbalanced loads on PSs and slow workers.

Compared with common services, the most distinctive feature of a model service is that the service needs to load one or more model files. In early containerization, most CV model services directly packaged model files and inference scripts into container images, resulting in large container images and cumbersome model updating.

KubeAI solves the preceding problems. Based on the standardized management of models, KubeAI associates model services with models through configurations. When a model is released, the platform pulls the corresponding model files based on the model configurations for inference scripts to load. This method relieves the pressure on algorithm model developers to manage images and versions of inference services, reduces storage redundancy, improves the efficiency of model update and rollback, increases the model reuse rate, and helps the algorithm team manage models and associated online inference services in a more convenient and efficient manner.

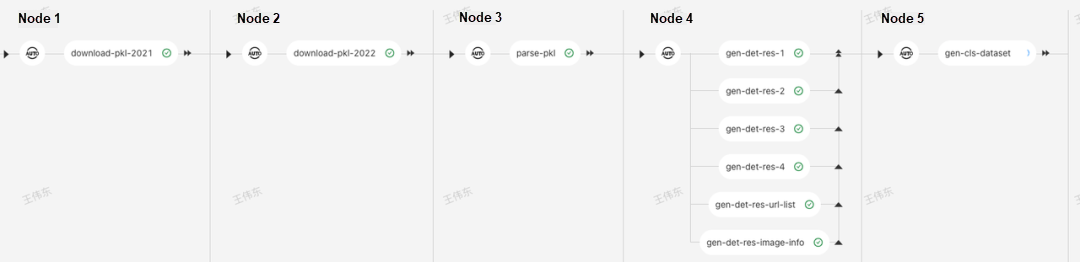

The actual business scenario is not a single task node. For example, a complete model iteration roughly includes data processing, model training, updating online inference services with new models, small traffic verification, and formal release. KubeAI provides workflow/orchestration engines based on Argo Workflow. Workflow nodes support custom tasks, platform preset template tasks, and various deep learning AI training jobs (such as TFJob and PyTorchJob).

The development mode of CV algorithm models is generally to study theoretical algorithms while developing algorithm models for engineering practices. Therefore these models can be debugged at any time. As CV algorithm models are small, the training cost is lower than search and recommendation models, so CV algorithm model developers are more accustomed to developing training scripts in Notebook and then directly training them in Notebook. Users can configure resources for Notebook (such as CPU, GPU, and network storage disk).

Once the training script meets the requirements through debugging, users can use the KubeAI job management feature to configure the training script as a standalone training job or a distributed training job and submit it to the KubeAI platform for execution. KubeAI schedules the task to a resource pool with sufficient resources for running. After successful execution, KubeAI pushes the model to the model repository and registers it in the model list of KubeAI or saves the model in a specified location for users to make a selection and confirmation.

After the model is generated, users can directly deploy the model as an inference service in the model service management of KubeAI. When a new version of the model is generated later, users can configure a new model version for the inference service. Then, according to whether the inference engine supports hot model updates, users can upgrade the model in the inference service by redeploying the service or creating a model upgrade task.

In the machine authentication scenario, AI-Pipeline orchestrates the process above and performs periodic model iteration. It improves the efficiency of model iteration by about 65%. After CV scenarios are connected to KubeAI, the previous local training method is not used, and the resource utilization rate is significantly improved by flexibly obtaining resources on KubeAI on demand. In terms of model management, inference service management, and model iteration, the R&D efficiency is improved by about 50%.

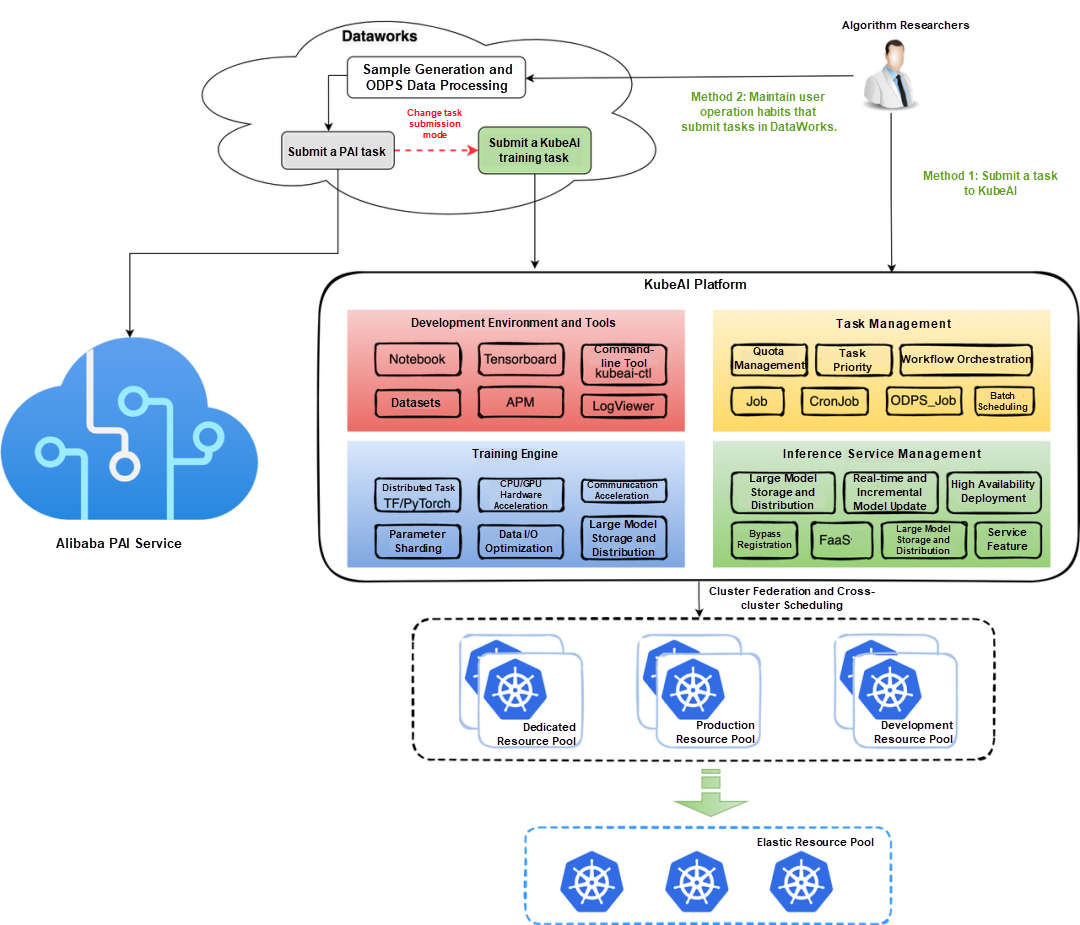

Compared with CV models, search and recommendation models have a higher training cost, which is caused by large data samples, long training time, and large amounts of resources for a single task. Before KubeAI was implemented, since our data was stored on ODPS (a data warehouse solution provided by Alibaba Cloud, now renamed MaxCompute), algorithm developers of search and recommendation models built data processing tasks on the DataWorks console (an ODPS-based big data development and governance platform) and submitted model training jobs to PAI. However, PAI is a public cloud product, and the cost of a single task submitted to it is higher than the cost of resources required by the task. The higher part can be understood as the technical service fee. In addition, this public cloud product cannot meet the internal cost control requirements of enterprises.

We migrate the model training jobs of the search and recommendation scenario on PAI to KubeAI in two ways after KubeAI is gradually implemented. One way is to maintain users' habits of working on DataWorks, so some SQL tasks are still executed on DataWorks and then submit tasks to KubeAI using shell commands. The other method is to directly submit tasks to KubeAI. We expect that as the infrastructure of the data warehouse is improved, the second method will be the only choice in the future.

The KubeAI development environment and tools are fully used in the development process of search and recommendation models. Through the self-developed training project Framework, when only CPU is used, the training duration can be the same as the training using GPU on PAI. The training engine also supports large model training and real-time training. It cooperates with multi-type storage (OSS and file storage) solutions and model distribution solutions to ensure a high success rate of large model training jobs and the efficient model update of online services.

In terms of resource scheduling and management, KubeAI makes full use of technical means (such as cluster federation, overcommitment mechanism, and binding tasks to kernel) to gradually transform the use of dedicated resource pools for training jobs into the allocation of elastic resources by task Pods and then schedules resources to online resource pools and public resource pools. KubeAI makes full use of the features of periodic task production and daytime-based task development to implement staggered scheduling and differentiated scheduling (for example, use elastic resources for small tasks and use conventional resources for large tasks). Judging from the data in recent months, we have managed to consistently undertake a relatively large increase in tasks with little change in total resource increments.

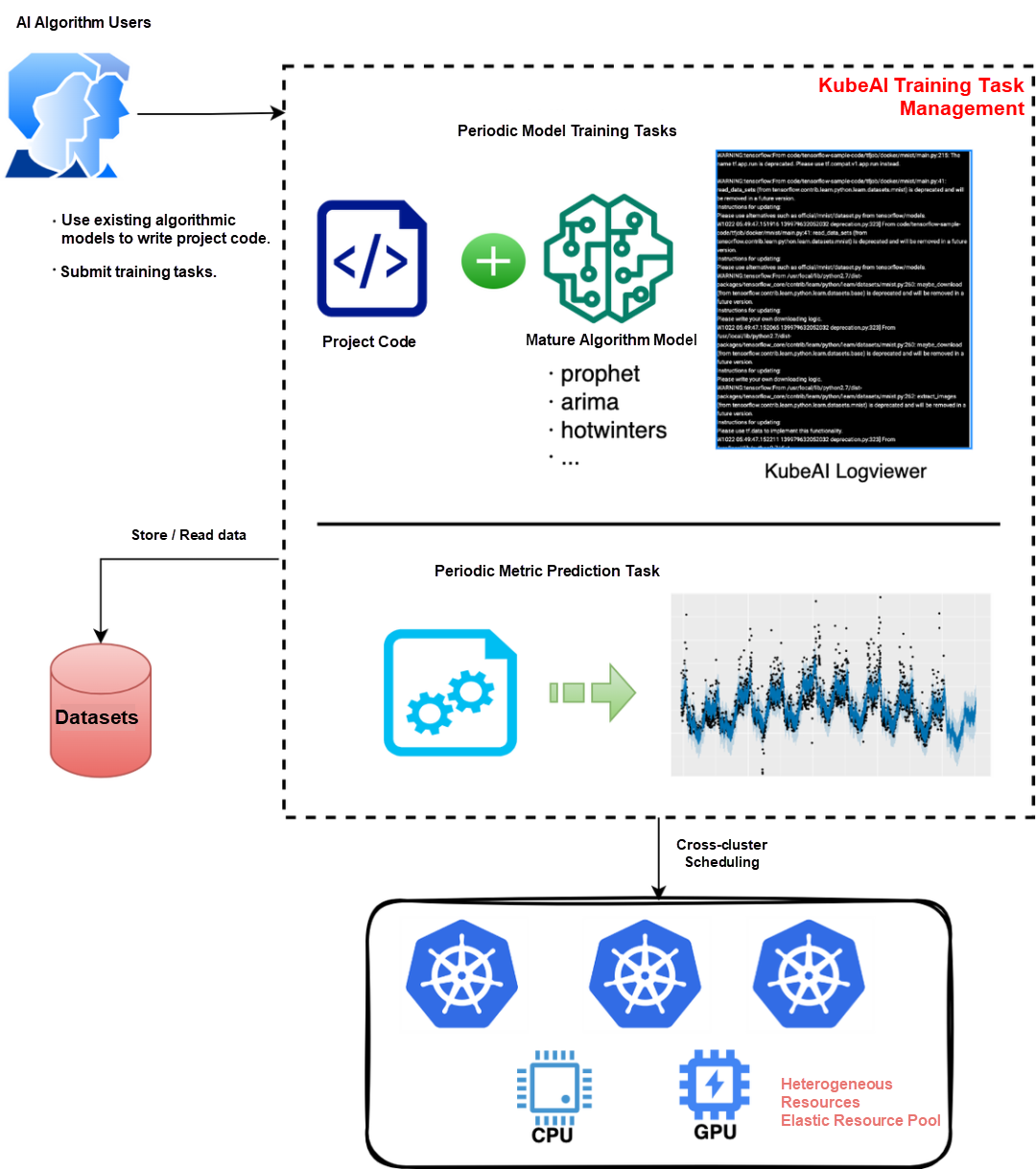

This is a typical non-algorithmic business scenario that uses AI capabilities. For example, use the Facebook Prophet algorithm to predict a business metric baseline. KubeAI provides basic AI capabilities for these scenarios and meets the requirement of quickly verifying mature algorithms. Users only need to implement an algorithm model in engineering (using existing best practices or secondary development), create a container image, submit a task on KubeAI, and start the execution to obtain the desired results. Alternatively, users can perform periodic training and inference to obtain baseline prediction results.

Users can use computing resources or other heterogeneous resources for task execution. These resources are configured as needed. Taking the 12 metrics of an online business scenario as an example, there are nearly 20,000 tasks executed every day. Compared with the previous resource costs of similar requirements, KubeAI helps save nearly 90% of the cost and improve the R&D efficiency by about three times.

Dewu has successfully implemented the containerization of business in a relatively short period, which on the one hand, benefits from the increasingly mature cloud-native technology, and on the other hand, benefits from our in-depth understanding of the needs of our business scenarios and our ability to provide targeted solutions. After an in-depth analysis of the pain points and requirements in the algorithm business scenario, we implement KubeAI based on the targets of improving the engineering efficiency of AI business scenarios and resource utilization and reducing the threshold for AI model and service development.

In the future, we will continue to make efforts in training engine optimization, fine-grained scheduling of AI tasks, and elastic model training to improve the efficiency of AI model training and iteration and increase resource utilization.

Disclaimer: This is a translated article from Dewu, all rights reserved to the original author. The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

The Practices and Exploration of Alibaba Cloud ApsaraMQ for Kafka Ecosystem Integration

How Can Metabit Trading Improve the Efficiency of Quantitative Research on the Cloud by 40%?

212 posts | 13 followers

FollowAlibaba Container Service - November 15, 2024

Alibaba Cloud Native Community - February 9, 2023

Alibaba Cloud Native Community - March 14, 2022

Alibaba Developer - March 1, 2022

Alibaba Cloud Community - March 8, 2022

Alibaba EMR - June 22, 2021

212 posts | 13 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Alibaba Cloud Native