By Xuefu Zhang, a Senior Technical Expert in Hologres Research and Development at Alibaba

Hologres is a comprehensive real-time data warehouse developed by Alibaba Cloud. This cloud-native system integrates real-time services and big data analysis scenarios. It is compatible with the PostgreSQL protocol and seamlessly integrates with the big data ecosystem. It can use the same data architecture to support real-time writing, query, and offline federated analysis. It simplifies the architecture of the business, provides real-time decision-making capabilities, and enables big data to exert greater commercial value. With the development of business and technology, Hologres is optimizing its core competitiveness from the birth of Alibaba Group to commercialization on the cloud. Alibaba Cloud wants everyone learn more about Hologres! So, we plan to launch a series of articles revealing the underlying technology principles of Hologres, including high-performance storage engines, high-efficiency query engines, high throughput writing, and high QPS query. Please stay tuned.

This article analyzes the technical principles of the Hologres high-performance analytics engine to accelerate the query of Cloud Data Lake Formation (DLF).

With the increasing acceptance of cloud services, cloud users are more willing to store their collected data in low-cost object storage services, such as OSS and S3. In addition, the cloud-based data management method has been promoted, and metadata has been stored on Alibaba Cloud DLF. The combination of OSS and DLF have created a new way to build data lakes. The scale of this cloud-based data lake collection is growing, and now, there is a demand for the data lake house. The data lake house architecture is based on external storage in open formats and high-performance query engines, making the data architecture flexible, scalable, and pluggable.

Hologres is seamlessly integrated with DLF in data lake house scenarios where data is stored in OSS, managed by DLF, and can be queried without importing and exporting data. Hologres is compatible with all types of files supported by DLF and enables second-level and sub-second-level interactive analysis of petabytes of offline data. All of this is inseparable from the DLF-Access engine of Hologres, which uses DLF-Access to access DLF metadata and OSS data. In addition, the performance of accessing DLF and OSS is improved by the high-performance distributed execution engine HQE.

Hologres DLF/OSS query acceleration provides the following benefits:

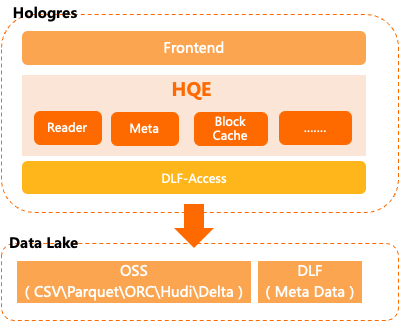

As shown in the figure, the data lake house architecture of Hologres to access DLF/OSS is concise.

Data in the cloud data lake is stored in OSS, and metadata is stored in DLF. When Hologres executes a Query to accelerate the query of DLF or OSS data, the Hologres architecture is listed below:

DLF-Access is a distributed data access engine that consists of multiple equal processes and can scale out. Any process can perform two roles:

Based on the DLF-Access architecture, it can accelerate the query of DLF/OSS data with high performance based on the following technical innovation advantages:

1) Abstract Distributed External Tables

Combined with the distributed features of DLF/OSS, Hologres abstracts a distributed external table to access data, including traditional or cloud data lakes.

2) Seamless Interoperability with DLF Meta

The Meta of DLF-Access and Data Lake Formation can be seamlessly interoperable. Meta and Data can be obtained in real-time. You can use Import Foreign Schema commands to synchronize the metadata of DLF to the external table of Hologres to create external tables automatically.

3) Reading and Converting Vectorized Data

DLF-Access can make full use of the features of data lake storage files for vectorized data reading and conversion, improving performance.

4) Return Shared Data Formats

The converted data format is the shared Apache Arrow format. Hologres can directly use the returned data to avoid the overheads of serialization and deserialization of additional data.

5) Block Mode IO

DLF-Access and Hologres transmit data in blocks to avoid network latency and load. The default value is 8192 rows of data.

6) Programming Language Isolation

Hologres is a cloud-native OLAP engine developed in C/C++. DLF cloud data lakes are highly compatible with traditional open-source data lakes, while open-source data lakes mostly provide Java-based libraries. The use of an independent DLF-Access engine architecture can isolate different programming languages, which avoids high-cost conversion between native engines and virtual machines while maintaining support for flexible and diverse data format of data lakes.

As mentioned before, Hologres uses DLF-Access to perform queries to accelerate DLF external tables. The performance of queries can be good. However, there is a layer of RPC interaction between Hologres. When the amount of data is large, the network will have certain bottlenecks.

Therefore, based on the existing capabilities of Hologres, Hologres V1.3 optimizes the execution engine and supports the Hologres HQE to read DLF tables directly. This improves the read performance by more than 30% compared with earlier versions.

This is mainly due to the following aspects:

1) The RPC interaction and an additional data redistribution are saved, which improves the performance.

2) Reuse the Block Cache of Hologres to avoid accessing storage during multiple queries. This avoids storage I/O and directly accesses data from memory to accelerate queries better.

3) The existing Filter Pushdown capability can be reused to reduce the amount of data that needs to be processed.

4) Pre-read and Cache are implemented at the underlying I/O layer to accelerate the performance during Scan.

Currently, DLF supports table formats used in transaction data lakes, such as Hudi and Delta. Hologres uses DLF-Access to directly read data from these tables without adding any additional operations. It meets the requirements of the real-time data lake house architecture.

Hologres integrates with DLF/OSS by DLF-Access and makes full use of their advantages. With the goal of extreme performance, Hologres directly accelerates the query of data in cloud data lakes, making it easier and more efficient for users in interactive analytics. It reduces the analysis cost and realizes the data lake house solution.

We will launch a series of articles in the future to reveal the underlying principles of Hologres. Please stay tuned!

Looking at the Development Trend of Real-Time Data Warehouses from the Core Scenarios of Alibaba

Alibaba EMR - February 15, 2023

Alibaba Cloud MaxCompute - December 22, 2021

Alibaba Cloud MaxCompute - July 14, 2021

Alibaba EMR - July 20, 2022

Alibaba EMR - May 10, 2021

Rupal_Click2Cloud - December 15, 2023

Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn More Data Lake Formation

Data Lake Formation

An end-to-end solution to efficiently build a secure data lake

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Hologres