PolarDB for PostgreSQL is an enterprise-level database product developed by Alibaba Cloud. It uses a compute-storage separation architecture and is compatible with PostgreSQL and Oracle. PolarDB supports the scale-out of storage and computing capabilities. PolarDB provides enterprise-level database features (such as high reliability, high availability, and elastic scaling). At the same time, PolarDB has large-scale parallel computing capabilities, which can cope with the mixed load of OLTP and OLAP. It also has multi-mode innovative features (such as spatio-temporal, vector, search, and graph), which can meet the new requirements of enterprises for rapid data processing.

PolarDB supports multiple deployment modes: storage and computing separation deployment, X-Paxos three-node deployment, and local disk deployment.

As user business data becomes larger and more complex, traditional database systems face huge challenges:

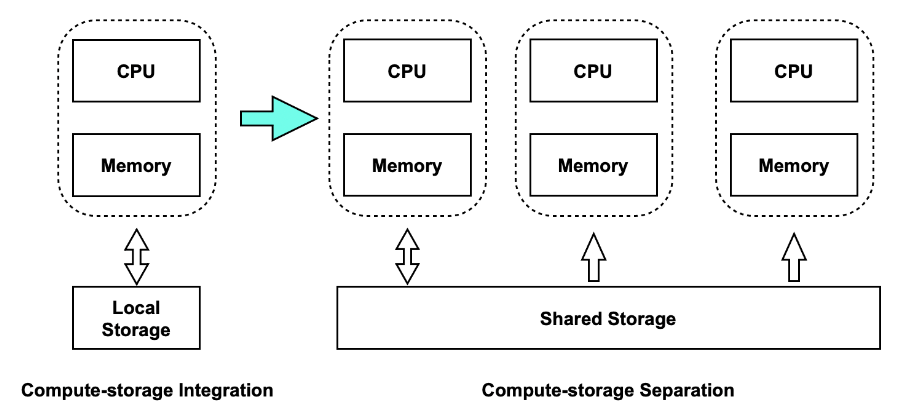

PolarDB cloud-native database wasdeveloped to solve the preceding problems of traditional databases. It adopts a self-developed architecture that separates computing clusters and storage clusters. It has the following advantages:

The following section describes the architecture of PolarDB from two aspects: storage-computing separation architecture and HTAP architecture.

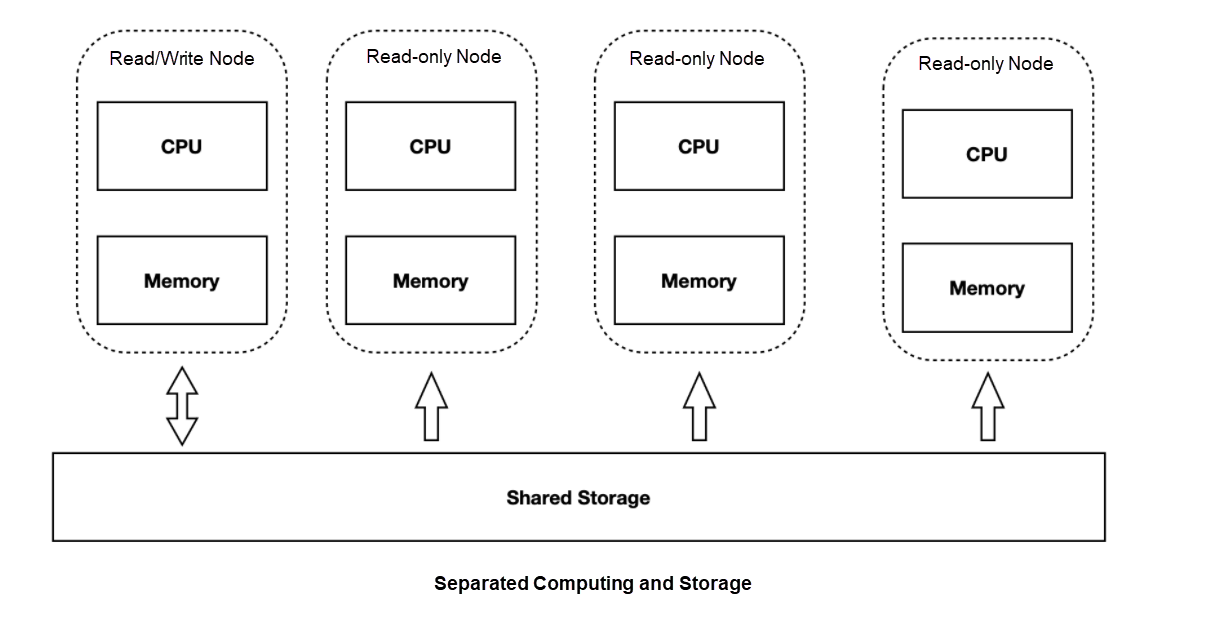

PolarDB is designed to separate storage and computing. Storage clusters and computing clusters can be expanded independently:

Based on shared storage, the primary node can share the storage data with multiple read-only nodes. The primary node can no longer be scrubbed like the traditional scrubbed method. Otherwise:

We need to have page multi-version capability for the first problem. We need the main library to control the dirty speed of dirty pages for the second problem.

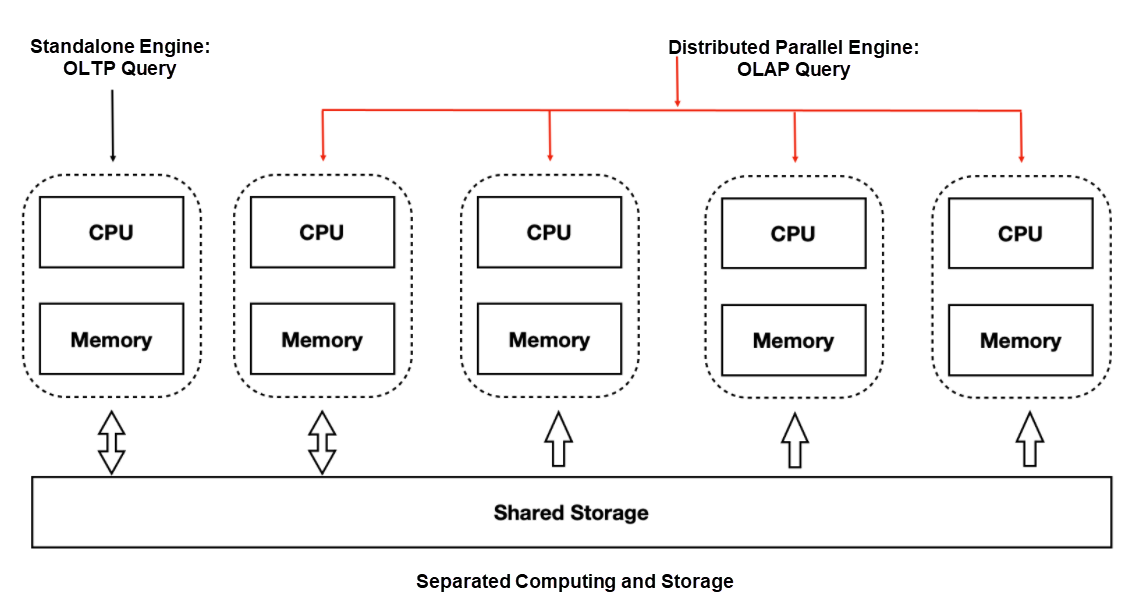

After read/write splitting, a single compute node cannot take advantage of the large I/O bandwidth on the storage side or accelerate large queries by adding computing resources. We have developed distributed parallel execution of MPP based on Shared-Storage to accelerate OLAP queries in OLTP scenarios. PolarDB supports a set of OLTP scenarios in the following two types of computing engines:

When the same hardware resources are used, the performance meets 90% of traditional Greenplum. At the same time, it has SQL-level elasticity. When the computing power is insufficient, the CPU that participates in OLAP analysis and query can be increased at any time without data redistribution.

Based on shared storage, the database changed from the traditional shared nothing to the shared storage architecture. The following issues need to be resolved:

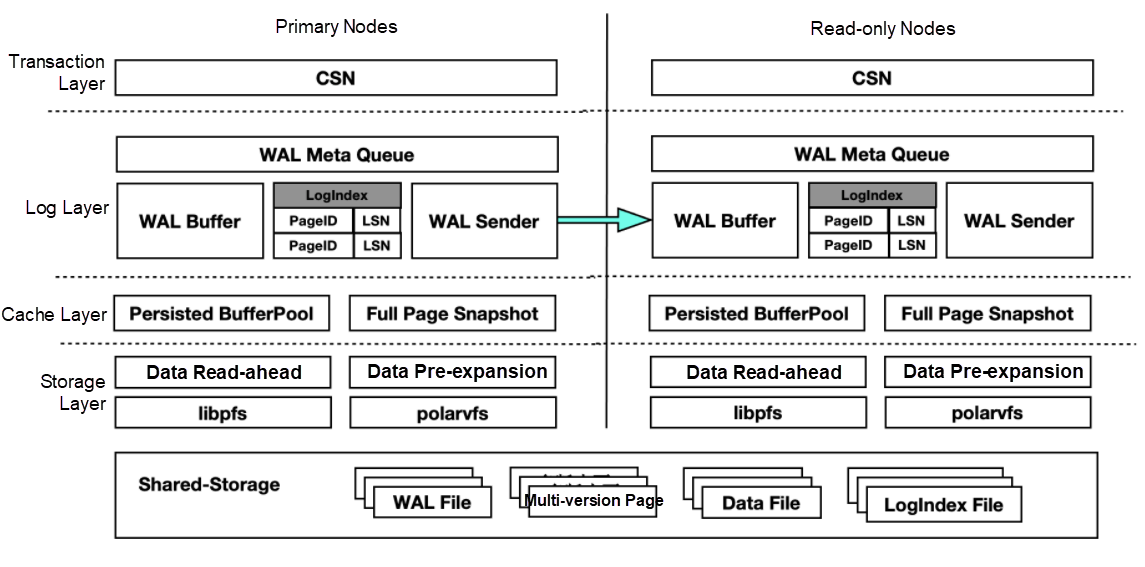

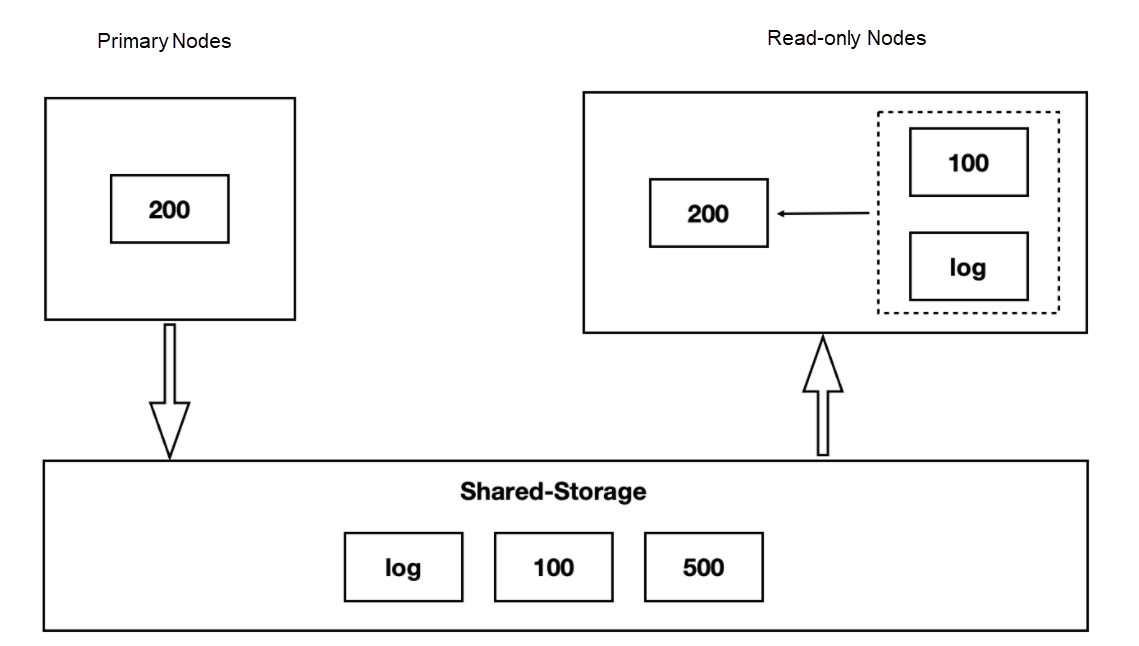

First, let's look at the architecture of Shared-Storage-based PolarDB:

In the traditional share nothing database, both the primary node and the read-only node have their memory and storage. You only need to copy WAL logs from the primary node to the read-only node and play back the logs on the read-only node in the sequence. This is also the basic principle of the replication state machine.

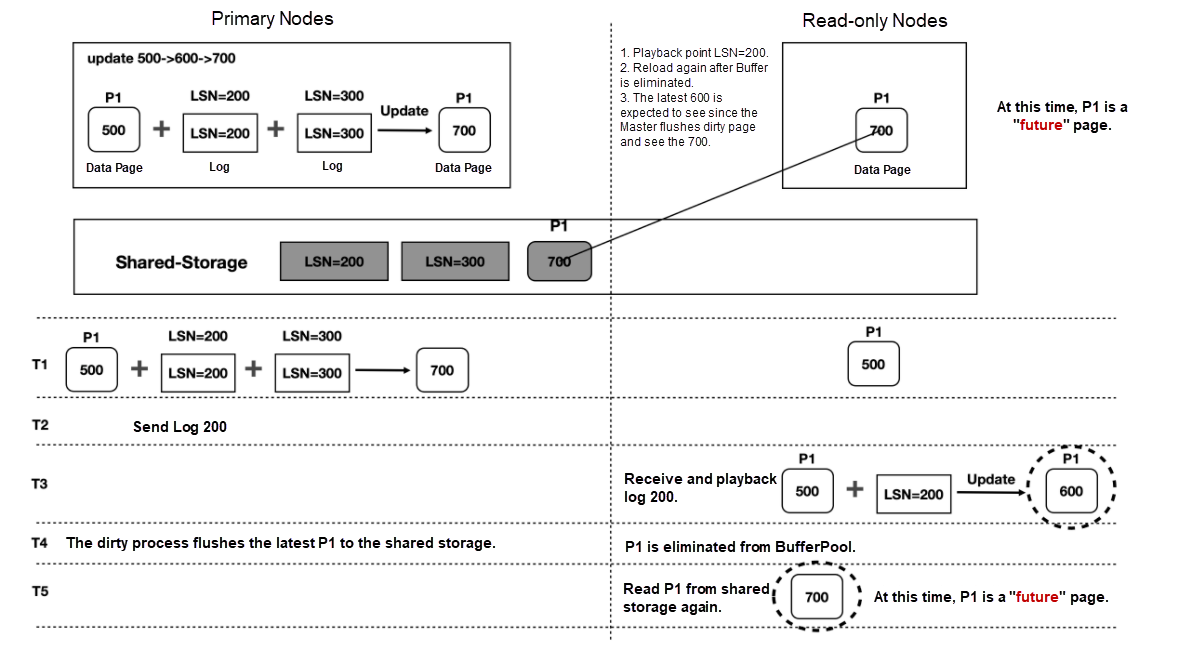

As mentioned earlier, after the separation of storage and computing, the pages read on Shared-Storage are consistent, and the memory state is obtained by reading the latest WAL from Shared-Storage and playing it back, as shown in the following figure:

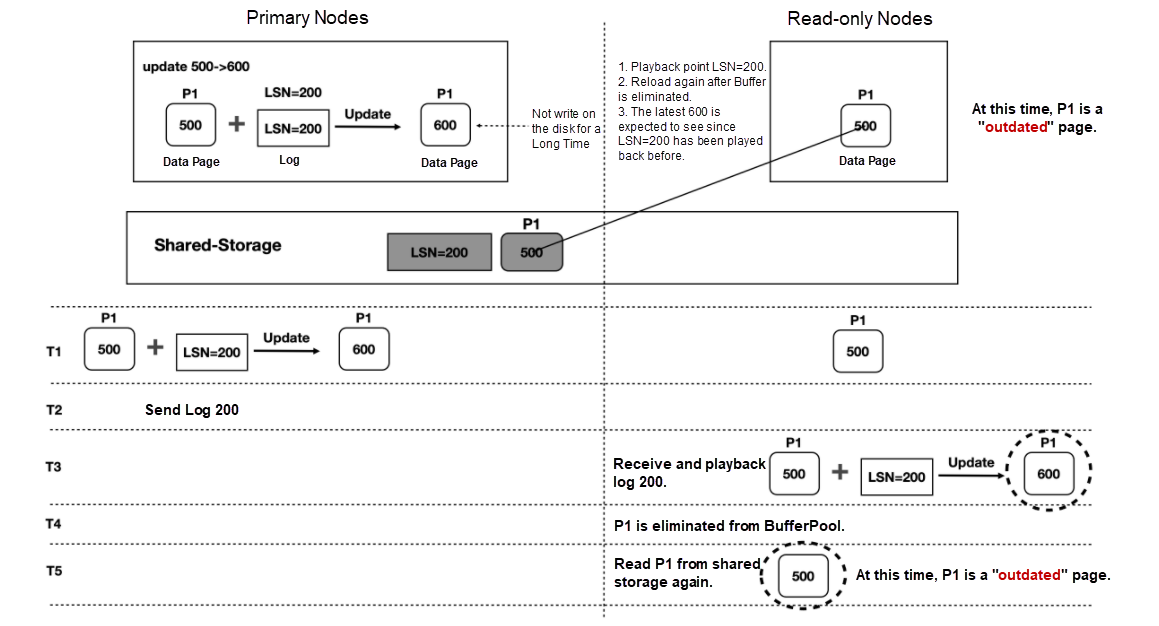

In the preceding process, the pages replayed based on logs in the read-only node will be eliminated. After that, you need to read the pages from the storage again. It will appear that the read pages are the previous old pages, which are called outdated pages. Please see the following figure:

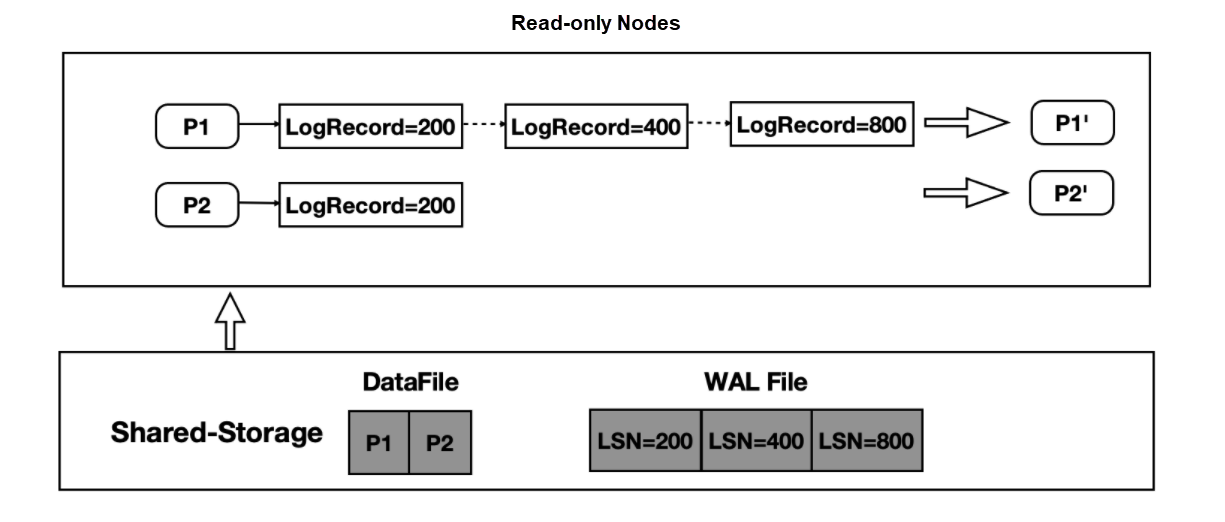

When a read-only node reads a page at any time, it needs to find the corresponding Base page and the logs of the corresponding starting point and play them back in sequence (as shown in the following figure):

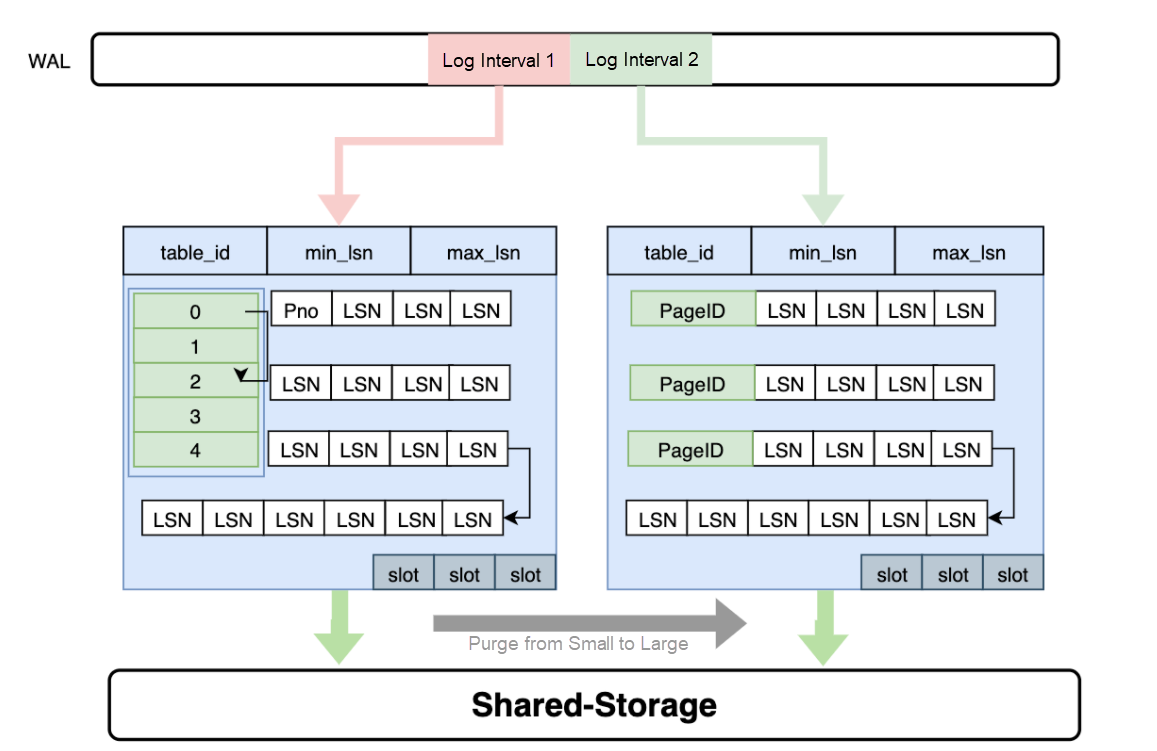

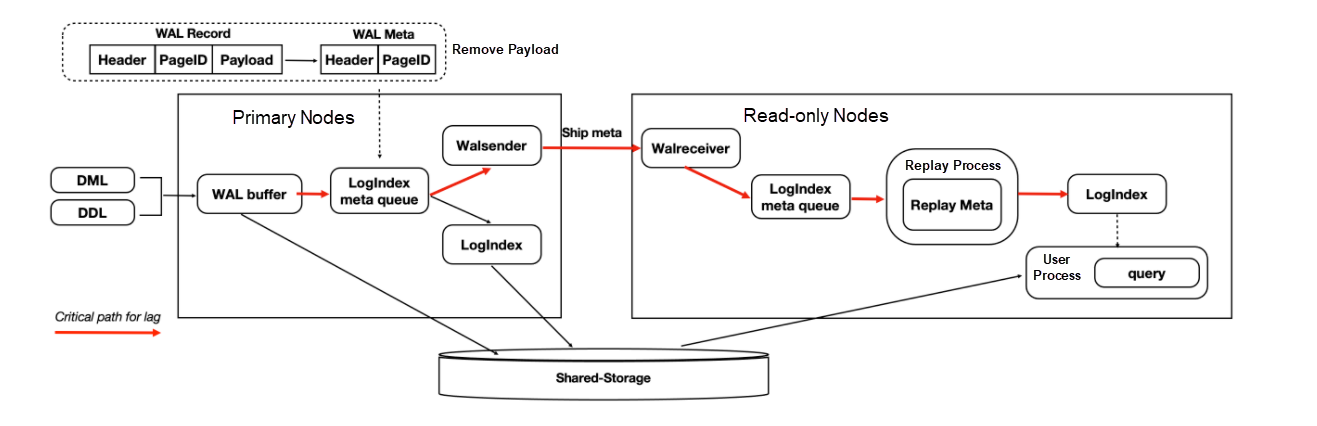

Through the preceding analysis, the inverted index of each Page to Log needs to be maintained, and the memory of the read-only node is limited. Therefore, the index of this Page to Log needs to be persisted. PolarDB has designed a persistent index structure- LogIndex. LogIndex is a persistent hash data structure.

LogIndex solves the problem when dirty brushing depends on outdated pages. The replay of read-only nodes is converted into Lazy replay: only the meta information of the log needs to be played back.

After storage and computing are separated, there is still a future page problem in the dirty dependency. Please see the following figure:

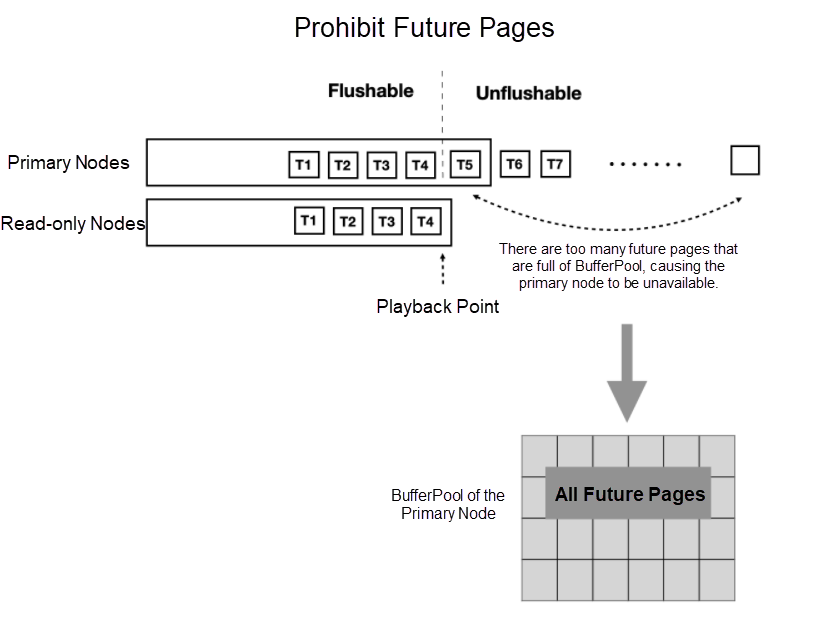

The reason for the future page is that the primary node swipes dirty faster than the replay speed of any read-only node (although the Lazy replay of the read-only node is already very fast). Therefore, the solution is to control the dirty progress of the primary node: the replay point of the slowest read-only node cannot be exceeded. The following figure shows:

Please see the following figure:

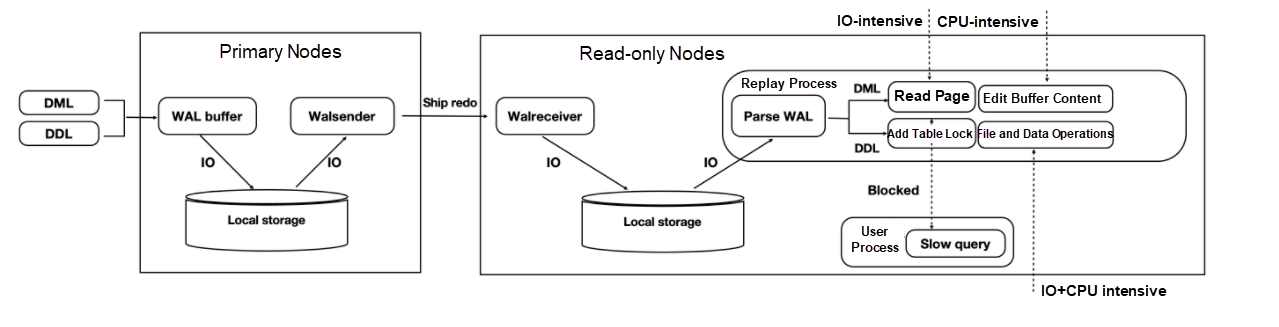

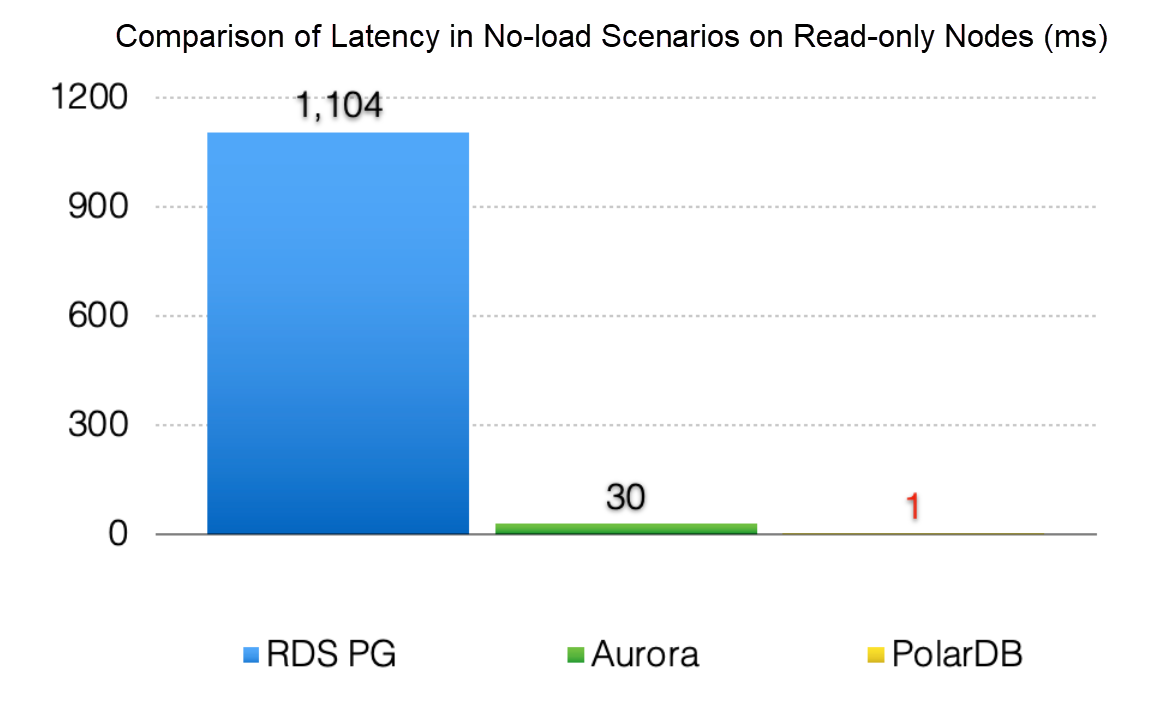

As you can see, the entire link is very long, and the latency of read-only nodes is high, which affects the SLB of user service read/write splitting.

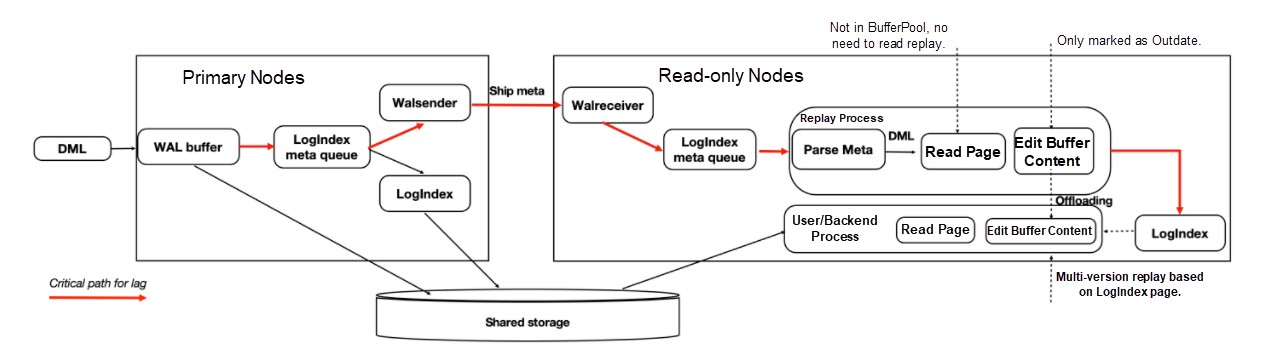

The underlying layer is Shared-Storage, and read-only nodes can directly read the required WAL data from Shared-Storage. Therefore, the primary node only copies the metadata of the WAL log (with the payload removed) to the read-only node. Therefore, the network transmission volume is small, and the IO on the critical path is reduced. Please see the following figure:

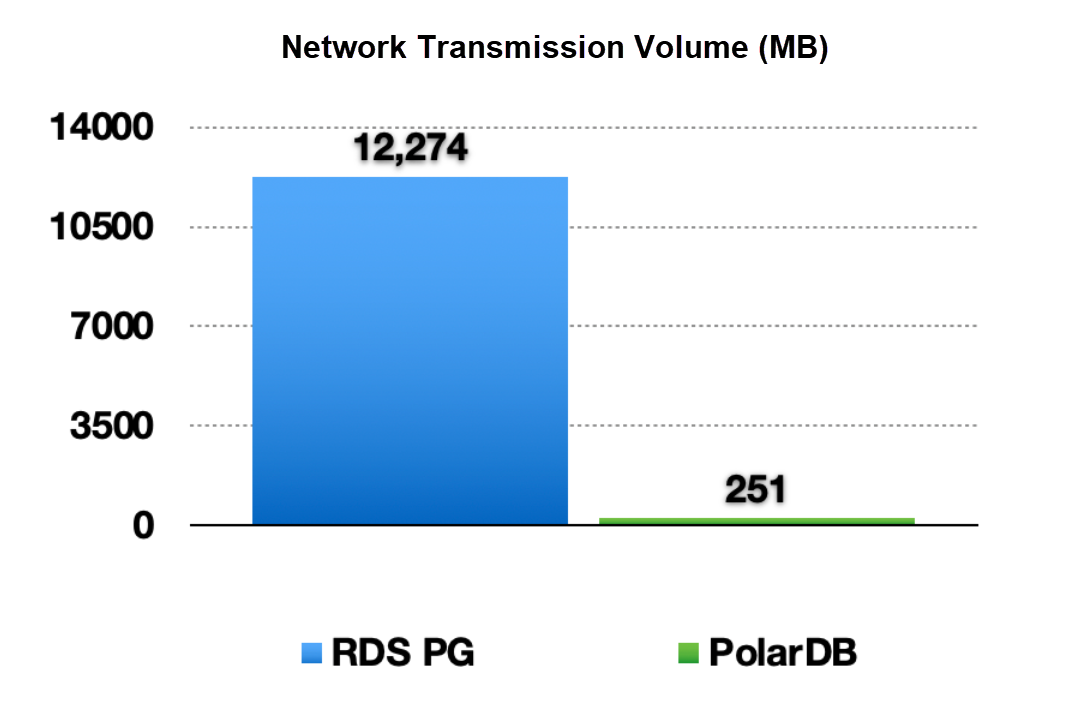

The preceding optimization can significantly reduce the amount of network transmission between the primary node and read-only nodes. The following figure shows the network transmission volume has been reduced by 98%.

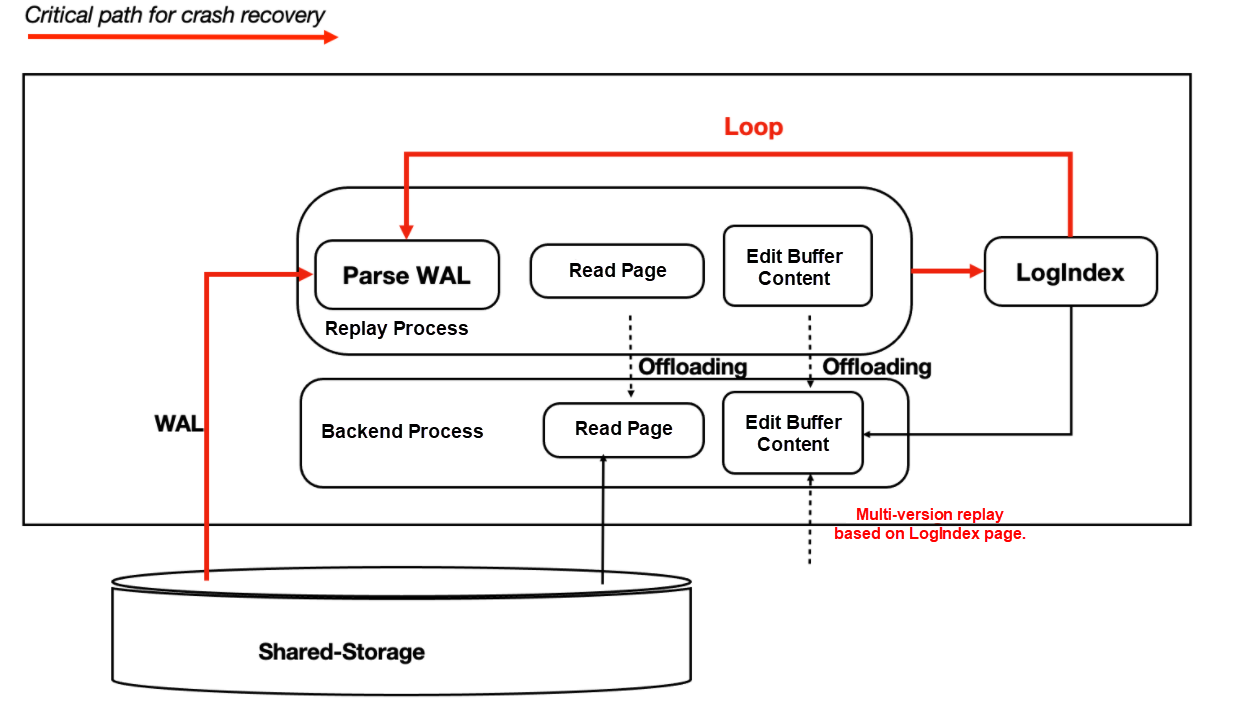

In the process of log replay in traditional DB, a large number of pages are read and applied one by one and written on the disk. This process is based on the critical path of user read IO. Using storage and computing separation, you can do the following: If the Page on the read-only node is not in BufferPool, no IO is generated, and only LogIndex can be recorded.

The following IO operations in the replay process can be offloaded to the session process:

As shown in the following figure, when applying a WAL meta in the replay process on the read-only node:

With the optimizations above, you can significantly reduce the latency of replay.

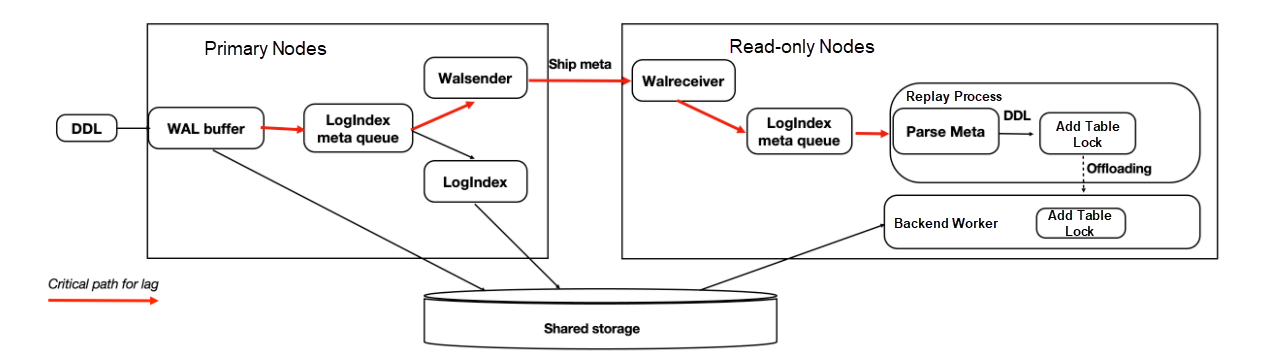

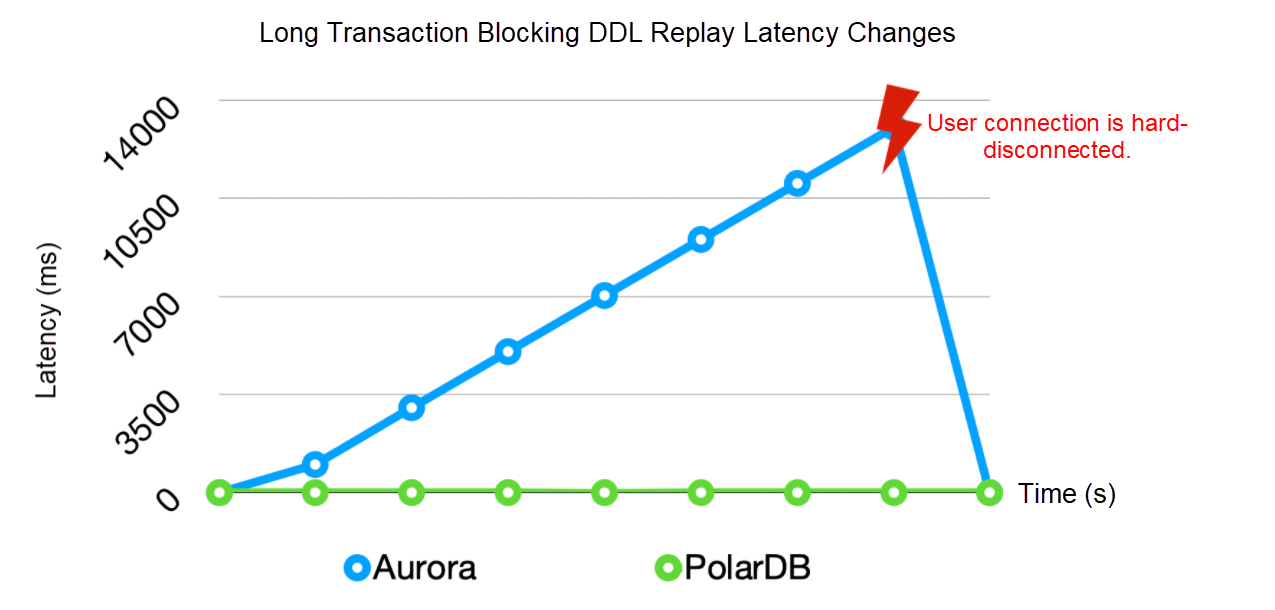

When you execute DDL statements on the primary node (drop table, for example), you must lock the table on all nodes. This ensures that the table file is not deleted by the primary node when it is read on the read-only node (because only one copy of the file is stored in Shared-Storage). The exclusive lock on the table on all read-only nodes is replicated to all read-only nodes using WAL. The read-only nodes replay the DDL lock.

When the replay process plays back the DDL lock, the table lock may be blocked for a long time. Therefore, you can optimize the critical path of the return process by offloading the DDL lock to other processes.

Through the preceding optimization, the replay process is always in a smooth state, and the critical path of replay is not blocked by waiting for DDL.

The preceding three optimizations significantly reduce the replication latency and bring the following advantages:

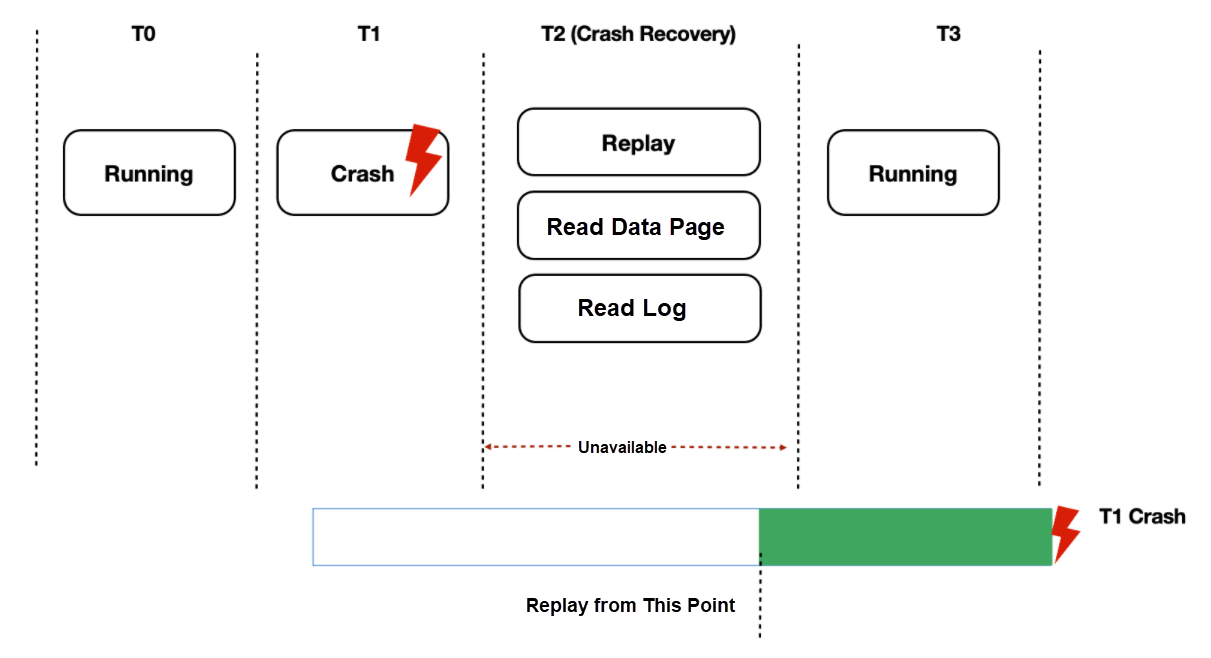

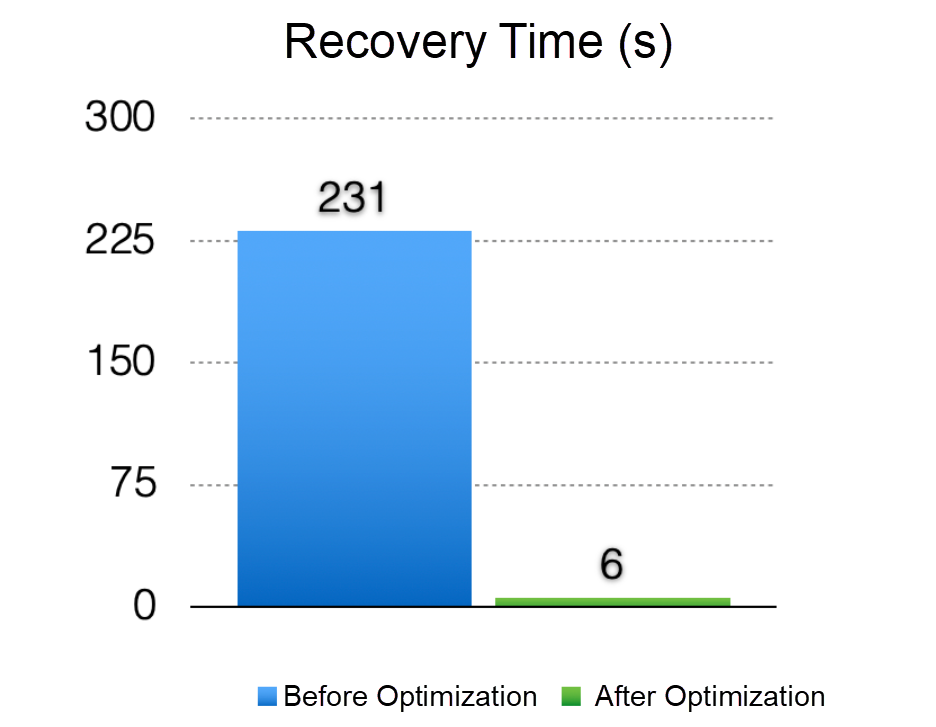

The recovery time in scenarios such as database OOM and Crash is long. In essence, log return slows down. The problem is even more prominent under the shared storage Direct-IO model.

As mentioned earlier, we have achieved Lazy replay on the read-only node through LogIndex. During the recovery process after the restart of the primary node, the essence is to play back logs, so we can use Lazy replay to accelerate the recovery process:

After optimization (500MB log volume is played back):

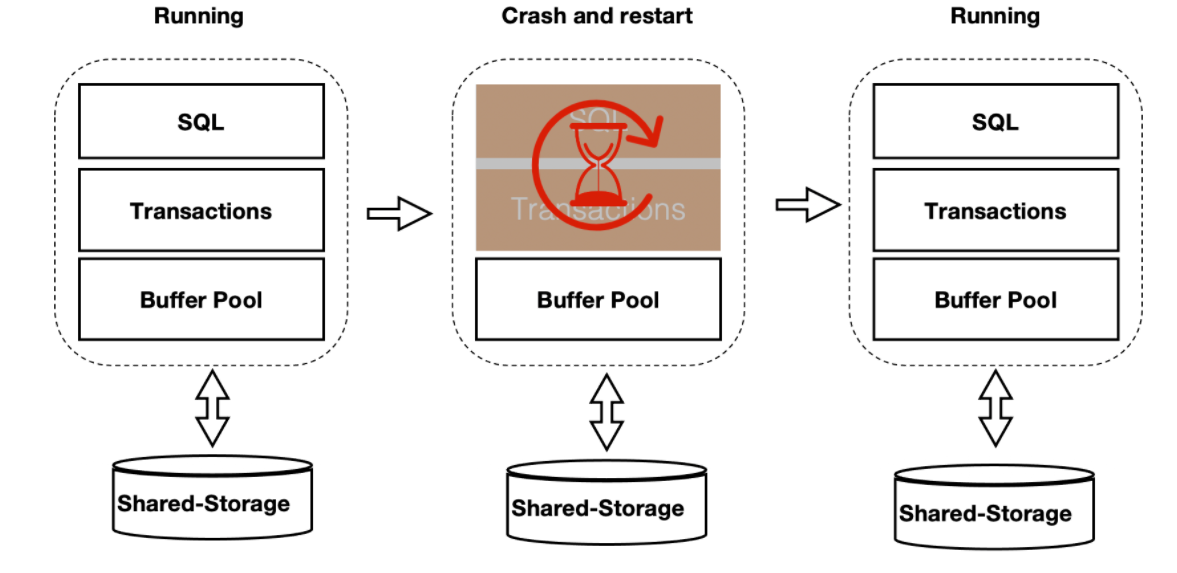

The preceding solution optimizes the restart speed in recovery, but after the restart, the session process plays back to the desired page by reading the WAL log. There will be a short-term slow response after recovery. The optimized method is that BufferPool is not destroyed when the database is restarted (as shown in the following figure). BufferPool is not destroyed during the crash and restart.

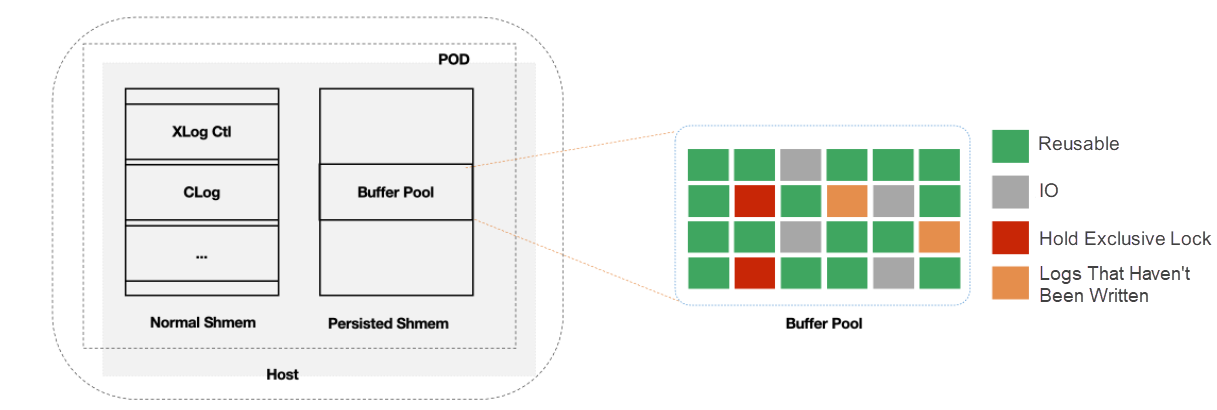

The shared memory in the kernel is divided into two parts:

However, not all Page in BufferPool can be reused. For example, before restarting, a process locks X on Page and then crashes. There is no process to release the X lock. Therefore, after crash and restart, you need to traverse all BufferPool to remove pages that cannot be reused. In addition, the recycling of BufferPool depends on Kubernetes.

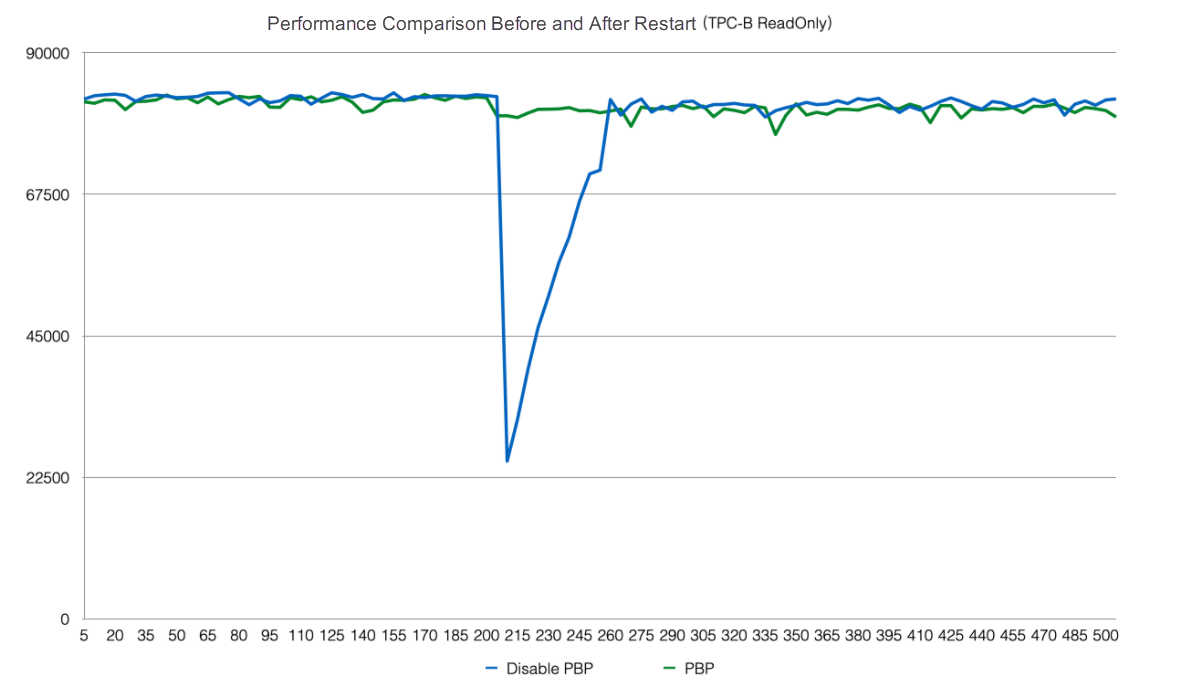

After this optimization, the performance is stable before and after the restart.

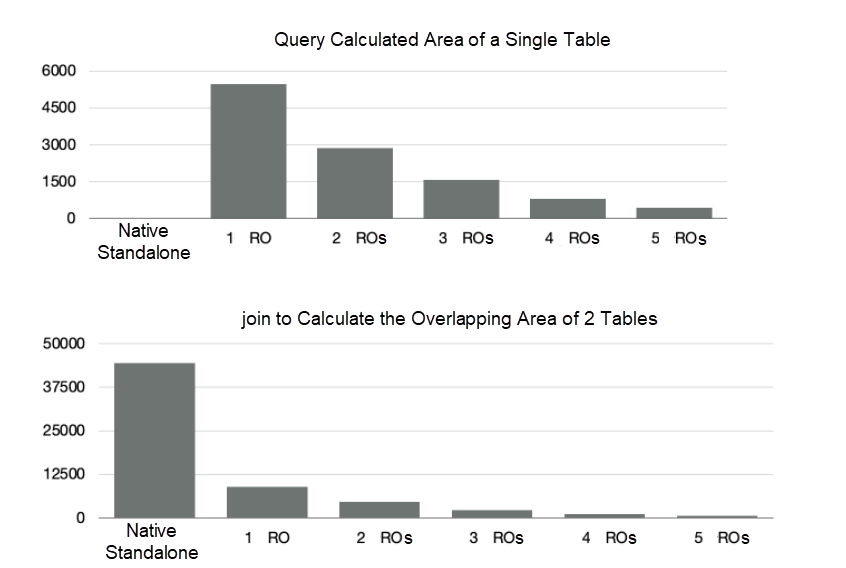

After PolarDB is read/write splitting, the underlying layer is a storage pool. Theoretically, the I/O throughput is infinite. However, large queries can only be executed on a single compute node. The CPU, MEM, and IO of a single compute node are limited. Therefore, a single compute node cannot take advantage of the large IO bandwidth on the storage side or accelerate large queries by increasing computing resources. We have developed distributed parallel execution of MPP based on Shared-Storage to accelerate OLAP queries in OLTP scenarios.

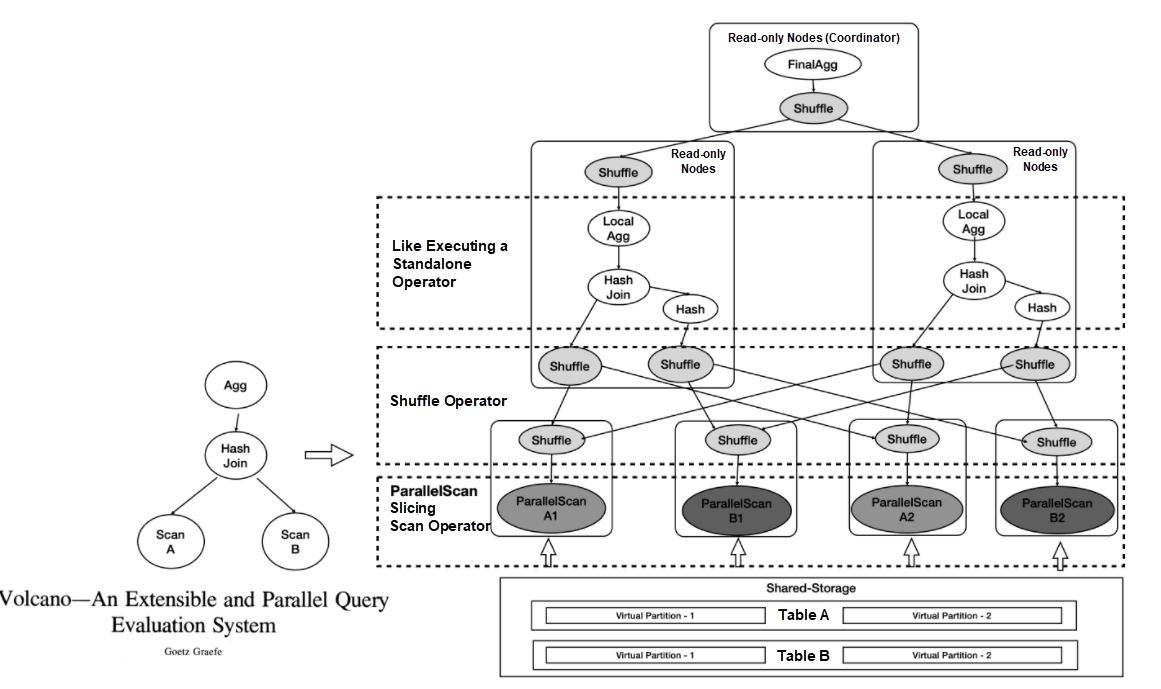

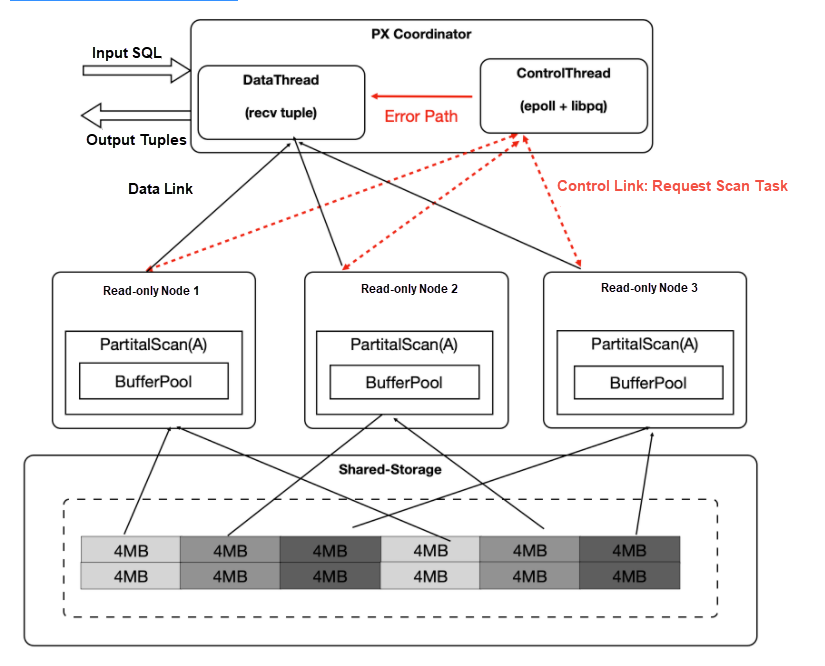

The underlying storage of PolarDB is shared on different nodes. Therefore, tables cannot be scanned directly like traditional MPP. We supported MPP distributed parallel execution on the original standalone execution engine and optimized Shared-Storage. Shared-Storage-based MPP is the first in the industry, whose principle is listed below:

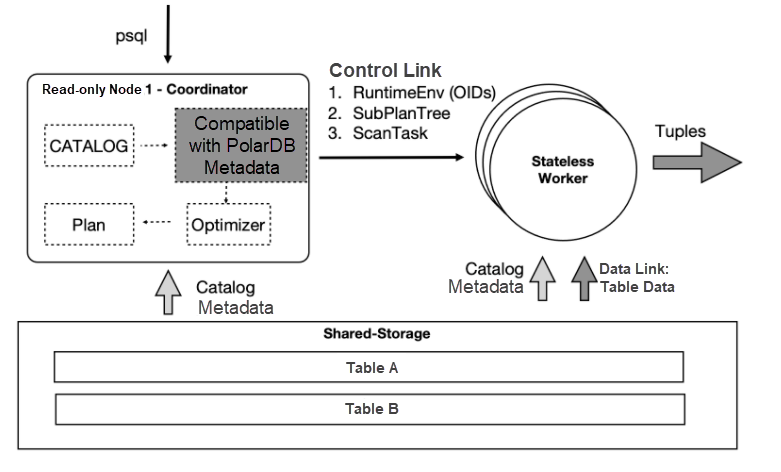

As shown in the figure:

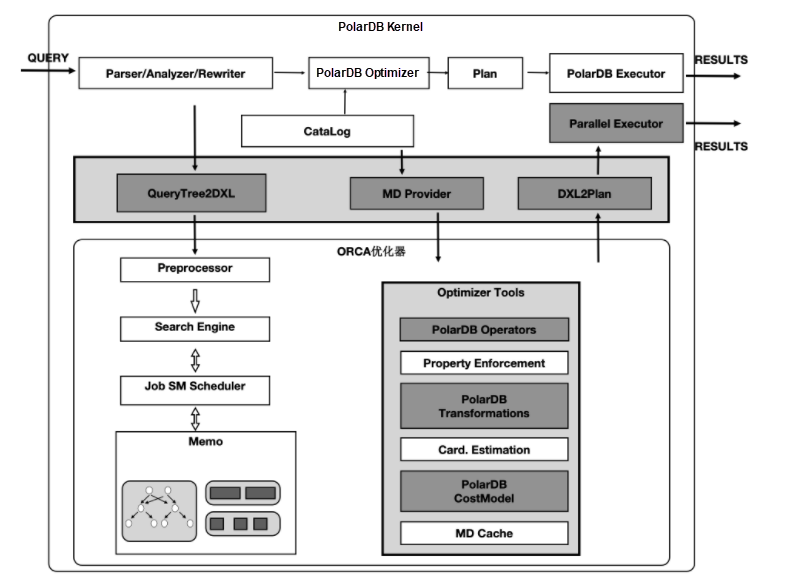

The community-based GPORCA optimizer extends the Transformation Rules that can sense shared storage characteristics. This enables you to explore the unique plan space under shared storage. For example, you can scan a table in PolarDB in full or in different regions. This is the essential difference from traditional MPP.

In the figure, the gray part is the adaptation part between the PolarDB kernel and the GPORCA optimizer.

The lower part is the ORCA kernel, and the gray module is an extension of the shared storage features in the ORCA kernel.

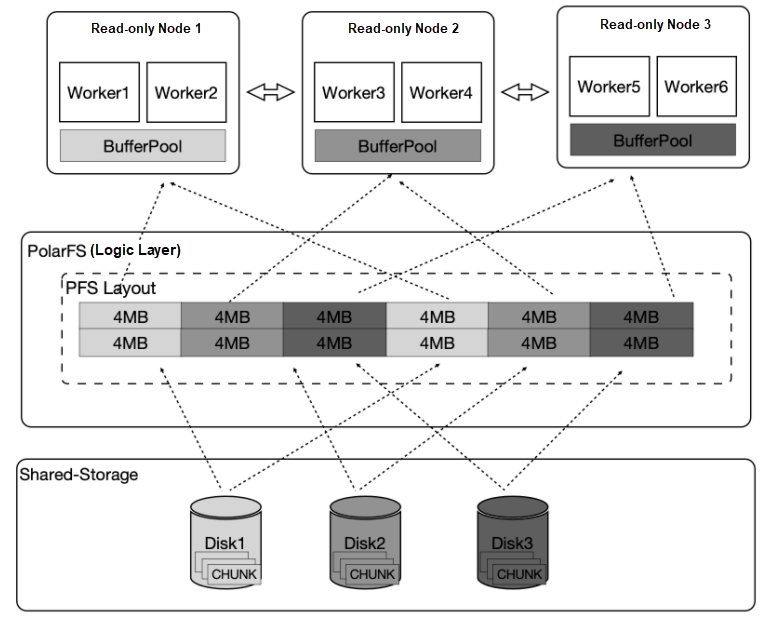

Four types of operators in PolarDB need to be parallelized. The following describes the parallelization of a representative seqscan operator. Logical splitting is performed in units of 4MB during sequential scanning to make maximum use of the large IO bandwidth of storage. IO is scattered to different disks as much as possible to achieve the effect that all disks provide read services at the same time. The advantage is that each read-only node only scans part of the table files, so the final table size that can be cached is the sum of the BufferPool of all read-only nodes.

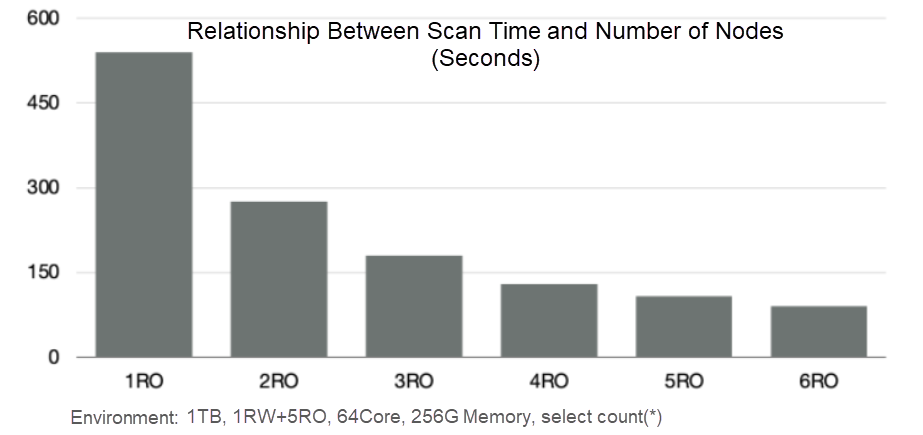

In the following chart:

| Testing Scenario | Time | Read Ability | Increase Multiple | |

|---|---|---|---|---|

| Turn off Buffer | Single RO,64 Concurrent | 47 Minutes | 360MB/s | 30 Times |

| Turn off Buffer | 6RO,15 Concurrent, Disable Buffer | 1.5 Minutes | Total 11.34 GB/s | 30 Times |

| Turn on Buffer | Single RO,64 Concurrent | 37 Minutes | 280MB/s | 600 Times |

| Turn on Buffer | 6RO,40 Concurrent, Open Buffer | 3.75 Seconds | 0 | 600 Times |

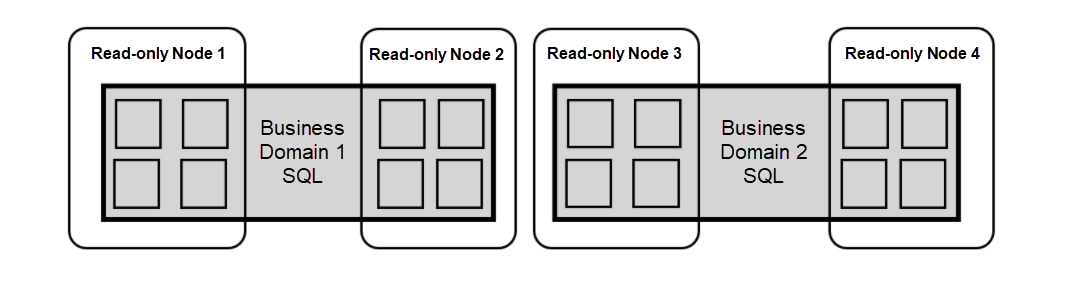

Tilting is an inherent problem in traditional MPP.

In PolarDB, large objects are associated with TOAST tables through heap tables. No matter which table is split, the balance cannot be achieved. The transaction, buffer, network, and I/O load jitters of different read-only nodes can also cause the long tail process during distribution execution .

Note: Although it is dynamically allocated, try to maintain the affinity of the buffer. In addition, the context of each operator is stored in the worker's private memory, and the Coordinator does not store the information of specific tables.

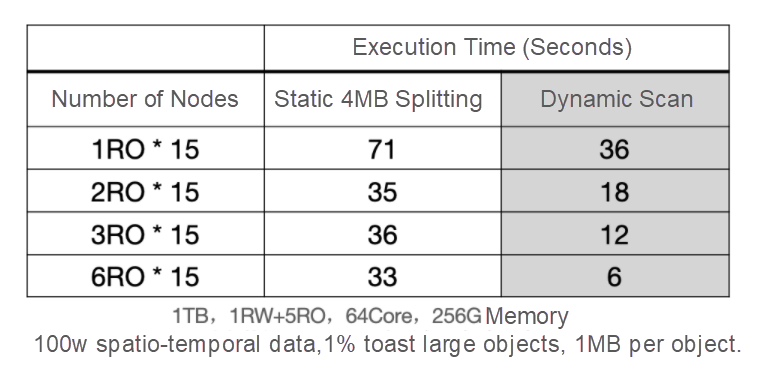

In the following table, when large objects appear, static segmentation data skew, while dynamic scanning can still be linearly improved.

Then, taking advantage of the characteristics of data sharing, we can also support the requirement of extreme flexibility under cloud-native. The external dependencies required by each module on the coordinator link are shared storage, and the run time parameters required on the worker link are synchronized from the coordinator through the control link, making the coordinator and worker stateless.

Thus:

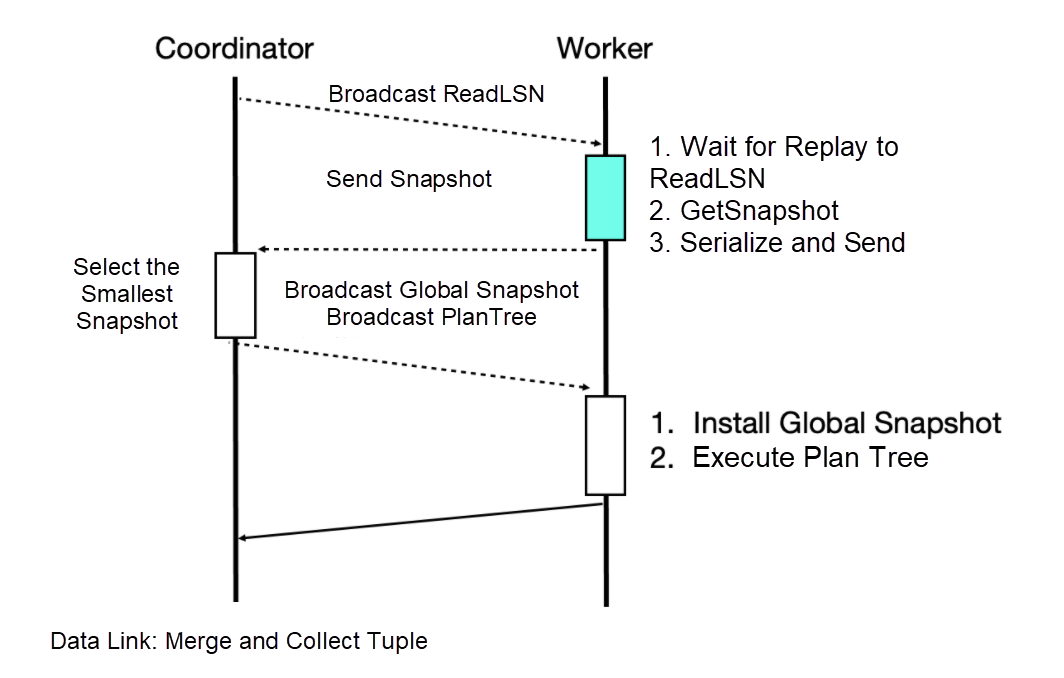

Data consistency across multiple compute nodes is accomplished by waiting for replay and the globalsnapshot mechanism. Waiting for replay ensures that all workers can see the required data version, while globalsnapshot ensures that a unified version is selected.

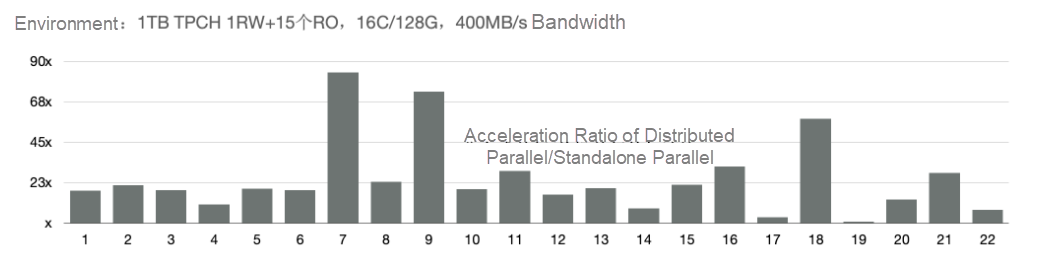

We used 1TB of TPCH for testing. First, we compared the performance of PolarDB's new distributed parallel and single-machine parallel. Three SQL statements were accelerated by 60, and 19 SQL statements were accelerated by more than ten.

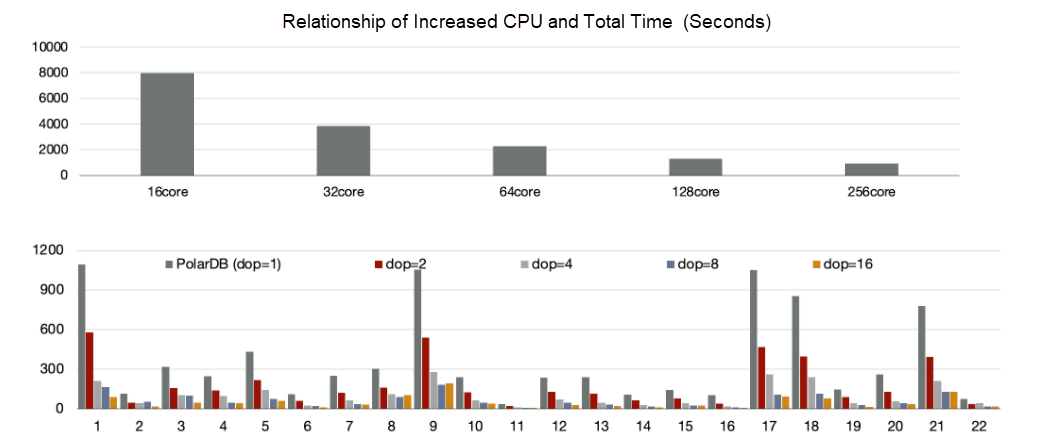

In addition, using distributed execution engine testing to try to increase the performance of the CPU, you can see the performance is improved linearly from 16 and 128 cores. Looking at 22 SQL statements alone, the performance of each SQL statement is improved linearly by increasing the CPU.

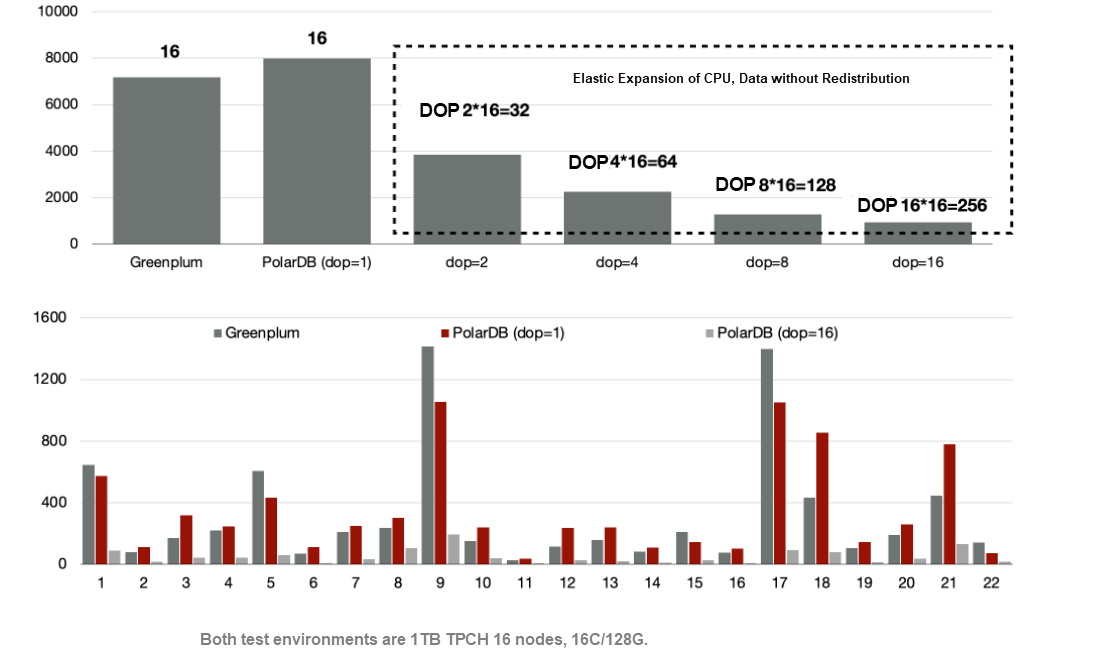

Compared with the traditional MPP Greenplum, PolarDB also uses 16 nodes. The performance of PolarDB is 90% that of Greenplum.

As mentioned earlier, the distributed engine of PolarDB is scalable. Data does not need to be redistributed. When dop=8, the performance is 5.6 times that of Greenplum.

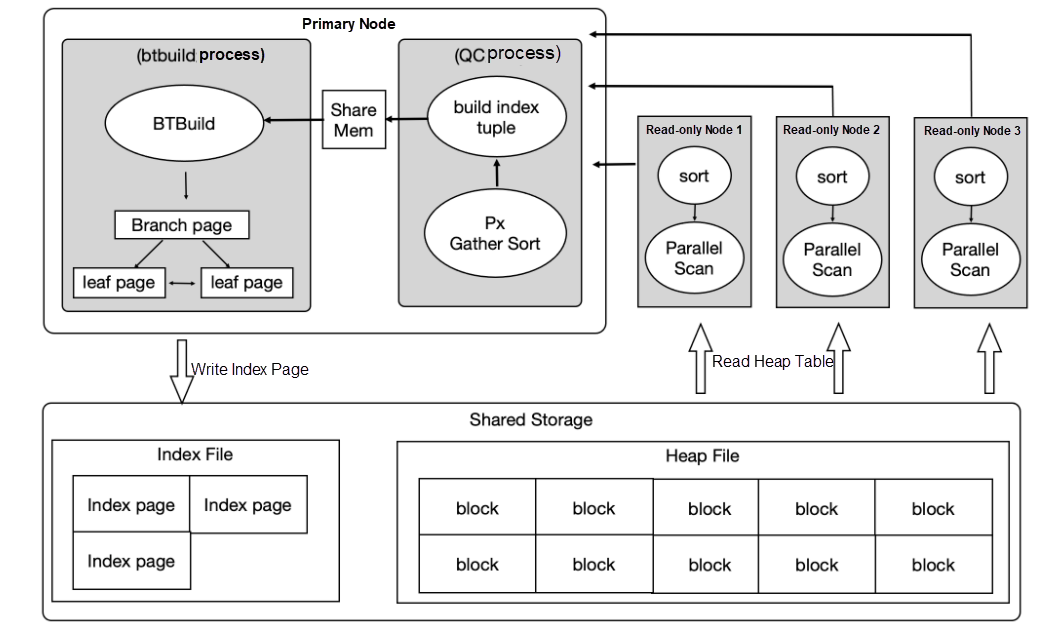

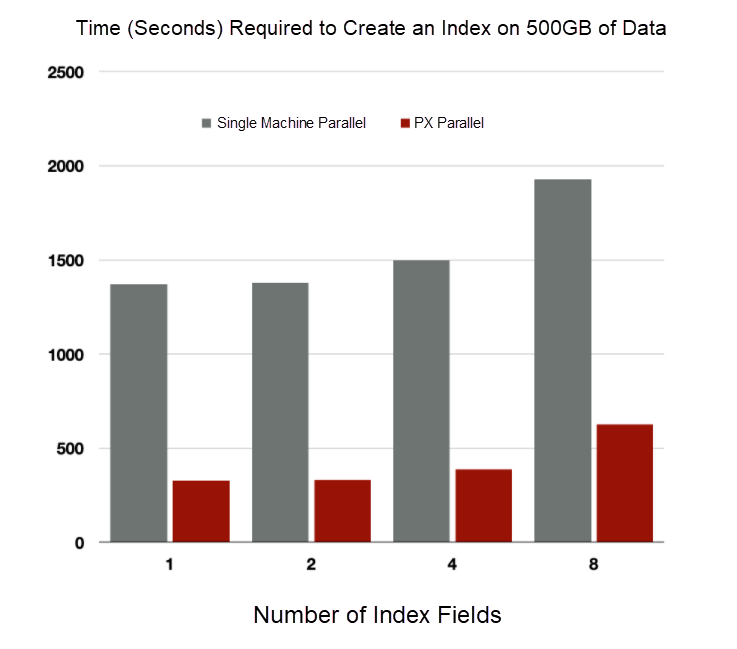

A large number of indexes will be built in the OLTP business. In the process of index creation after analysis, 80% is sorting and building index pages, and 20% is writing index pages. Use of distributed parallelism can accelerate the sorting process, and support batch writes.

The preceding optimizations can improve index creation by four to five times.

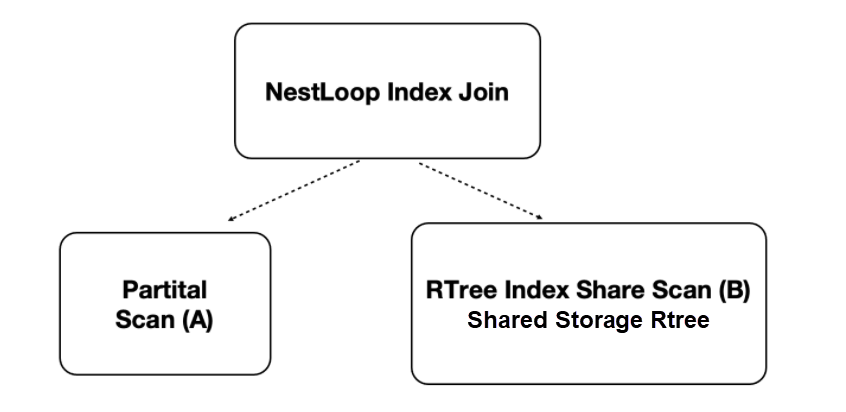

PolarDB is a multi-mode database that supports spatio-temporal data. Spatio-temporal databases are compute-intensive and I/O-intensive and can be accelerated with distributed execution. We have developed the ability to scan shared RTREE indexes for shared storage.

Performance:

This article analyzes the technical points of PolarDB at the architecture level:

Subsequent articles will discuss more technical details specifically, including how to use a shared-storage-based query optimizer, how LogIndex achieves high performance, how to flash back to any point in time, how to support MPP on shared-storage, and how to combine with X-Paxos to build high availability. Please stay tuned!

Cloud Forward Episode 7: Cloud-Native Database - PolarDB | Transparent Read/Write Splitting

Alibaba Cloud Open-Source PolarDB Architecture and Enterprise-Level Features

Alibaba Cloud_Academy - March 31, 2023

Alibaba Clouder - July 22, 2020

ApsaraDB - November 21, 2023

Alibaba Cloud_Academy - February 23, 2023

ApsaraDB - April 19, 2019

ApsaraDB - April 28, 2020

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by ApsaraDB